Need help with : [Microsoft][ODBC SQL Server Driver]Timeout expired

Hello.

I have an MS Access 2K application with a split FE/BE configuration.

The workstation is XP Pro and MS SQL SERVER 2008 R2 is installed on computer via LAN.

Other databases are Access 2K on a windows 2K server.

This is a windows 2003 server enviorment.

I'm running a very long routine...on 10,000 + records... The routine loops through each records and creates HTML code that can be uploaded to a website.

The routine fails after looping approximately through 10,000 records. I get the following error:

[Microsoft][ODBC SQL Server Driver]Timeout expired

Can anyone help?

I have an MS Access 2K application with a split FE/BE configuration.

The workstation is XP Pro and MS SQL SERVER 2008 R2 is installed on computer via LAN.

Other databases are Access 2K on a windows 2K server.

This is a windows 2003 server enviorment.

I'm running a very long routine...on 10,000 + records... The routine loops through each records and creates HTML code that can be uploaded to a website.

The routine fails after looping approximately through 10,000 records. I get the following error:

[Microsoft][ODBC SQL Server Driver]Timeout expired

Can anyone help?

I don't like my server to get flatlined because it makes users unhappy, so when my app starts, it runs a passthrough query

which has this SQL in it

exec spTimeout15

(Now, it wasn't until later I discovered you shouldn't name your own store procedures to start with 'sp' lest they conflict with the system's)

In said stored procedure is

SET LOCK_TIMEOUT 15000

SELECT @@LOCK_TIMEOUT

That sets the maximum time a query is allow to take to 15000 milliseconds.

Which is reasonable almost all the time.

BUT, you have those times when a bitch of a query needs to run and complete...

So I also have a stored procedure with

SET LOCK_TIMEOUT 120000

SELECT @@LOCK_TIMEOUT

I run that one when I KNOW I need to run something heavy.

Sounds like you need something similar.

Or you need to create a better query!

which has this SQL in it

exec spTimeout15

(Now, it wasn't until later I discovered you shouldn't name your own store procedures to start with 'sp' lest they conflict with the system's)

In said stored procedure is

SET LOCK_TIMEOUT 15000

SELECT @@LOCK_TIMEOUT

That sets the maximum time a query is allow to take to 15000 milliseconds.

Which is reasonable almost all the time.

BUT, you have those times when a bitch of a query needs to run and complete...

So I also have a stored procedure with

SET LOCK_TIMEOUT 120000

SELECT @@LOCK_TIMEOUT

I run that one when I KNOW I need to run something heavy.

Sounds like you need something similar.

Or you need to create a better query!

ASKER

Dale,

My code looks like this:

Set oRS = oDB.OpenRecordset(strSQLte

With oRS

'oRS is all parts in SelectPartsTable with the ComponentMaster info joined

.MoveFirst

Do While Not .EOF

DoEvents

<<about 200 lines of code that calculate values, prices, and create HTML>>

.movenext

.loop

end with

My code looks like this:

Set oRS = oDB.OpenRecordset(strSQLte

With oRS

'oRS is all parts in SelectPartsTable with the ComponentMaster info joined

.MoveFirst

Do While Not .EOF

DoEvents

<<about 200 lines of code that calculate values, prices, and create HTML>>

.movenext

.loop

end with

ASKER

opps..forgot this

strSQLtext = "SELECT SelectedParts.*, ComponentMaster.Notes,Comp

strSQLtext = strSQLtext & "PackageMaster.PackageName

strSQLtext = strSQLtext & "ComponentMaster.PackageLe

strSQLtext = strSQLtext & "ComponentMaster.Title255 , ComponentMaster.SeriesID, ComponentMaster.PartName, ComponentMaster.ClassFamil

strSQLtext = strSQLtext & "ComponentMaster.Alternate

strSQLtext = strSQLtext & "FROM ((SelectedParts INNER JOIN ComponentMaster ON (SelectedParts.SearchNumbe

strSQLtext = "SELECT SelectedParts.*, ComponentMaster.Notes,Comp

strSQLtext = strSQLtext & "PackageMaster.PackageName

strSQLtext = strSQLtext & "ComponentMaster.PackageLe

strSQLtext = strSQLtext & "ComponentMaster.Title255 , ComponentMaster.SeriesID, ComponentMaster.PartName, ComponentMaster.ClassFamil

strSQLtext = strSQLtext & "ComponentMaster.Alternate

strSQLtext = strSQLtext & "FROM ((SelectedParts INNER JOIN ComponentMaster ON (SelectedParts.SearchNumbe

1) I don't see any VBA variable, controls or anything else in there, so

Why don't you save this query so the engine can optimize it?

And then access it in code through QueryDefs("TheQueryName")

Are you pulling too many fields for that?

2) With a Select * in there, Access is likely to request the whole shebang from the server

3) Are there indexes on all the fields on both sides of the Joins( SearchNumber ,LineID,PartClass,PackageC

--a missing index can be a performance killer--

And 4)

If this really is heavy duty, why not do it as a View or Stored Procedure of the SQL Server?

Do you have the permission to create views or stored procedures?

Because this is fairly straight-forward SQL and wouldn't be a bear to do up.

Why don't you save this query so the engine can optimize it?

And then access it in code through QueryDefs("TheQueryName")

Are you pulling too many fields for that?

2) With a Select * in there, Access is likely to request the whole shebang from the server

3) Are there indexes on all the fields on both sides of the Joins( SearchNumber ,LineID,PartClass,PackageC

--a missing index can be a performance killer--

And 4)

If this really is heavy duty, why not do it as a View or Stored Procedure of the SQL Server?

Do you have the permission to create views or stored procedures?

Because this is fairly straight-forward SQL and wouldn't be a bear to do up.

ASKER

Hello Nick, thanks for helping once again. Hello Dale, too, same to you.

The ComponentMaster, ManufacturerMaster, and PackageMaster tables are still MS Access tables, which run on a Windows 2K server connected to our LAN. This is basically all the info we have about parts that we sell.

The SelectedParts tables is on the MS SQL Server 2008 R2, which is also connected to our LAN, but it runs from a workstation. We cycle through the records, on at a time, and create lots of intelligent HTML which is saved in the [*Description] field and [*Title] fields. It calculates a few more things, just numbers, so not much horsepower needed for that stuff. The [*Description] field does cause the table to get very large... many gigabytes.

1) Nick, I'm not sure what you meant about the QueryDefs and optimizing...

2) I can get rid of the SelectedParts.* line. Was wondering if that was worth the effort.

I can also break of the routine into two or three passes, but it seems silly that I would have to do that.

3) Indexes, yes!

4) Not sure if I can do this because of the access tables...can I?

The ComponentMaster, ManufacturerMaster, and PackageMaster tables are still MS Access tables, which run on a Windows 2K server connected to our LAN. This is basically all the info we have about parts that we sell.

The SelectedParts tables is on the MS SQL Server 2008 R2, which is also connected to our LAN, but it runs from a workstation. We cycle through the records, on at a time, and create lots of intelligent HTML which is saved in the [*Description] field and [*Title] fields. It calculates a few more things, just numbers, so not much horsepower needed for that stuff. The [*Description] field does cause the table to get very large... many gigabytes.

1) Nick, I'm not sure what you meant about the QueryDefs and optimizing...

2) I can get rid of the SelectedParts.* line. Was wondering if that was worth the effort.

I can also break of the routine into two or three passes, but it seems silly that I would have to do that.

3) Indexes, yes!

4) Not sure if I can do this because of the access tables...can I?

ASKER

Nick, should I be trying: SET LOCK_TIMEOUT 120000

The only way I can tell if it works will be to run a procedure and wait several hours to see if it fails.

The only way I can tell if it works will be to run a procedure and wait several hours to see if it fails.

SET LOCK_TIMEOUT 120000 is 2 minutes.

If you really need several hours than something else is severely wrong, either in the networking, the server or the query.

Even old OS's and hardware shouldn't take hours to do things short of billion-row ETLs

The first thing, if it were me, that I would discover is if the query is the bottleneck or the code

if you do this to your code

Set oRS = oDB.OpenRecordset(strSQLte

With oRS

'oRS is all parts in SelectPartsTable with the ComponentMaster info joined

.MoveFirst

Do While Not .EOF

'I'm gonna comment it all out and see how long it takes to walk down the recordset

'DoEvents

'all 200 lines commented out

'<<about 200 lines of code that calculate values, prices, and create HTML>>

'not processing nothing, just seeing how my query performs

.movenext

.loop

end with

How long does it take?

If the answer is hours, you have a query and server problem

if the answer is seconds or minutes, well then you have a code problem.

One thing at a time!

If you really need several hours than something else is severely wrong, either in the networking, the server or the query.

Even old OS's and hardware shouldn't take hours to do things short of billion-row ETLs

The first thing, if it were me, that I would discover is if the query is the bottleneck or the code

if you do this to your code

Set oRS = oDB.OpenRecordset(strSQLte

With oRS

'oRS is all parts in SelectPartsTable with the ComponentMaster info joined

.MoveFirst

Do While Not .EOF

'I'm gonna comment it all out and see how long it takes to walk down the recordset

'DoEvents

'all 200 lines commented out

'<<about 200 lines of code that calculate values, prices, and create HTML>>

'not processing nothing, just seeing how my query performs

.movenext

.loop

end with

How long does it take?

If the answer is hours, you have a query and server problem

if the answer is seconds or minutes, well then you have a code problem.

One thing at a time!

One option could be to import SelectedParts to an empty local Access database, link this, and modifying the query using this. It will probably run much faster as well.

/gustav

/gustav

Gustav has a good point. when you are doing queries that include tables from both Access and SQL Server, the query can run slow. If you could either move SelectedParts to Access (as a temp table prehaps) or physically move the other 3 tables to SQL Server, the query would probably run much quicker.

ASKER

I am so sorry, I did not mention something that will clear up a few things.

The process I am running consists of 35 different routines. Once, initiated, the routines run in order, one by one until all 35 routines are run.

The objective is to create a table that can be exported to eBay in the format that eBay requires to programmatically list the inventory for sale.

Some of the routines are simply and only take seconds to run, others are complex and take much longer. For example, my pricing routine (routine #22) queries 50K sales records, 200K quotes, and 5 million competitor prices to establish an asking price. This routine runs fine but may take 30 minutes to complete when I'm processing 100,000 rows.

Routine #33 is the problem, and can take my code a couple of hours just to being this routine. This routine creates an html description. Its simple code, pulls stuff form the database and includes labels as well as html code to make them easy on the eyes. The thing that makes this routine unique is that every 250 records or so, expands the filesize by 30MB, so 100,000 records, if stored in an Access table, would cause the table to grow by 12GB.

The reason I moved SelectedParts to the SQL server was to get away from the 2GB limitation of MS Access, so this table must be on the server.

Of course, I can rewrite the routine that fails so it could be run as a stand alone procedure to see what happens, but it would be so much simpler to simply solve the timeout expired problem since the existing code otherwise performs very well. (Functionally at least!)

The process I am running consists of 35 different routines. Once, initiated, the routines run in order, one by one until all 35 routines are run.

The objective is to create a table that can be exported to eBay in the format that eBay requires to programmatically list the inventory for sale.

Some of the routines are simply and only take seconds to run, others are complex and take much longer. For example, my pricing routine (routine #22) queries 50K sales records, 200K quotes, and 5 million competitor prices to establish an asking price. This routine runs fine but may take 30 minutes to complete when I'm processing 100,000 rows.

Routine #33 is the problem, and can take my code a couple of hours just to being this routine. This routine creates an html description. Its simple code, pulls stuff form the database and includes labels as well as html code to make them easy on the eyes. The thing that makes this routine unique is that every 250 records or so, expands the filesize by 30MB, so 100,000 records, if stored in an Access table, would cause the table to grow by 12GB.

The reason I moved SelectedParts to the SQL server was to get away from the 2GB limitation of MS Access, so this table must be on the server.

Of course, I can rewrite the routine that fails so it could be run as a stand alone procedure to see what happens, but it would be so much simpler to simply solve the timeout expired problem since the existing code otherwise performs very well. (Functionally at least!)

ASKER

Anyone who can help, please! :-)

I'd like to try Nicks suggestion to create a stored procedure, but I'm an Access guy, I don't remember doing a stored procedure before.

I used the following T-SQL code:

CREATE PROCEDURE uspFixedTimeoutProblem

AS

SET LOCK_TIMEOUT 120000

SELECT @@LOCK_TIMEOUT

GO

I think this is what Nick suggested.

Now I think I need to create a pass through query to execute this as part of my application??

When I create a pass through query, with the following code:

exec uspFixedTimeoutProblem

Access jumps to the Select Data Source screen. When I enter the FileDSN that I use, I enter my password, and then a query screen shows up with EXPR1000 and one row, 120000. I don't understand this..

I need to add a line of code to my routine that will run the stored procedure. Can anyone help?

I'd like to try Nicks suggestion to create a stored procedure, but I'm an Access guy, I don't remember doing a stored procedure before.

I used the following T-SQL code:

CREATE PROCEDURE uspFixedTimeoutProblem

AS

SET LOCK_TIMEOUT 120000

SELECT @@LOCK_TIMEOUT

GO

I think this is what Nick suggested.

Now I think I need to create a pass through query to execute this as part of my application??

When I create a pass through query, with the following code:

exec uspFixedTimeoutProblem

Access jumps to the Select Data Source screen. When I enter the FileDSN that I use, I enter my password, and then a query screen shows up with EXPR1000 and one row, 120000. I don't understand this..

I need to add a line of code to my routine that will run the stored procedure. Can anyone help?

I think you've got it. Your procedure works, runs and sets the max query timeout to 2 minutes. EXPR1000 is the given default name for the column. 120000 is the new value set. This is global to the server and will remain that way until you issue a new Set Lock_Timeout command.

Now, even though you've got the timeout tweaking query in place, I don't think that it will be your solution.

DoEvents is a nice idea, right up until you really understand it.

It basically makes Access wait -- but Access consumes 100% of the processor core time that it's thread is on while it does so. On a single core machine, this essentially makes the machine unresponsive. On a quad core machine 25% of the processor time is consumed, and so on.

You want to be very careful about using DoEvents. It has its place when you shell out to some external process that needs to complete before your VBA carries on, but you do need to carefully check and construct the code that uses it so that an error doesn't lead to an infinite loop.

Anyway.

My code looks like this:

Set oRS = oDB.OpenRecordset(strSQLte

With oRS

'oRS is all parts in SelectPartsTable with the ComponentMaster info joined

.MoveFirst

Do While Not .EOF

DoEvents

<<about 200 lines of code that calculate values, prices, and create HTML>>

.movenext

.loop

end with

strSQLText creates a big, huge, ugly recordset that times out.

What I would suggest is

Dim OuterLoopSQL as string ' SQL that will get only enough PK fields to get a unique record of the set you want

OuterLoopSQL = "You've got to Build this"

Dim InnerSQLSelect as string 'The Select and from clause of the big ugly query

InnerSQLSelect = "And Build this"

Set outerRS = oDB.OpenRecordset(OuterLoo

With outerRS

.MoveFirst

Do While Not .EOF

DoEvents

strSQLtext = InnerSQLSelect & " An Appropriate WHERE Clause contructed with values from outerRS

Set oRS = oDB.OpenRecordset(strSQLte

'oRS is one record of all parts in SelectPartsTable with the ComponentMaster info joined

<<about 200 lines of code that calculate values, prices, and create HTML for this one record>>

oRS.close

set oRS = nothinh

.movenext 'now we'll build the next SQL statement and do it

.loop

end with

So your outer recordset used in the loop would be a lot lighter, and inside the loop, you would create the big, ugly recordset for just a single record at a time.

This would have a significantly smaller chance of timing out because each recordset would be much lighter than your present one.

Now, even though you've got the timeout tweaking query in place, I don't think that it will be your solution.

DoEvents is a nice idea, right up until you really understand it.

It basically makes Access wait -- but Access consumes 100% of the processor core time that it's thread is on while it does so. On a single core machine, this essentially makes the machine unresponsive. On a quad core machine 25% of the processor time is consumed, and so on.

You want to be very careful about using DoEvents. It has its place when you shell out to some external process that needs to complete before your VBA carries on, but you do need to carefully check and construct the code that uses it so that an error doesn't lead to an infinite loop.

Anyway.

My code looks like this:

Set oRS = oDB.OpenRecordset(strSQLte

With oRS

'oRS is all parts in SelectPartsTable with the ComponentMaster info joined

.MoveFirst

Do While Not .EOF

DoEvents

<<about 200 lines of code that calculate values, prices, and create HTML>>

.movenext

.loop

end with

strSQLText creates a big, huge, ugly recordset that times out.

What I would suggest is

Dim OuterLoopSQL as string ' SQL that will get only enough PK fields to get a unique record of the set you want

OuterLoopSQL = "You've got to Build this"

Dim InnerSQLSelect as string 'The Select and from clause of the big ugly query

InnerSQLSelect = "And Build this"

Set outerRS = oDB.OpenRecordset(OuterLoo

With outerRS

.MoveFirst

Do While Not .EOF

DoEvents

strSQLtext = InnerSQLSelect & " An Appropriate WHERE Clause contructed with values from outerRS

Set oRS = oDB.OpenRecordset(strSQLte

'oRS is one record of all parts in SelectPartsTable with the ComponentMaster info joined

<<about 200 lines of code that calculate values, prices, and create HTML for this one record>>

oRS.close

set oRS = nothinh

.movenext 'now we'll build the next SQL statement and do it

.loop

end with

So your outer recordset used in the loop would be a lot lighter, and inside the loop, you would create the big, ugly recordset for just a single record at a time.

This would have a significantly smaller chance of timing out because each recordset would be much lighter than your present one.

ASKER

Ugh... I've been working with this all weekend and still do not have a solution. I just finished adding the "exec uspFixedTimeoutProblem" line after each line that opened a new recordset... I guess I didn't have to do this?

I've modified my code so that the routine that creates the html does nothing but create the code.

I now call it as a subroutine from the main routine.

The query is nothing more than a select query to extract the data we need from three table to build a webpage.

Its not the query eating up that time, is the code that is executing in the loop.

This code is doing nothing more than create HTML.

As near as I can tell, the code is processing about 20 records per second. Now when we consider 250,000 records in our inventory, that means to cycle through every record once, our objective, at 20 records/second and 250K records, it would take 3.5 hours to cycle through the table once.

Up until now, I've only been processing groups of 10 to 50K records. I did try this today with all 250, 000 records and a routine that runs before the troublesome routine failed with a similar error (System Resource exceeded #3035.)

Is there a way to turn the timeout process off, or set it for six hours or so?

Thanks

I've modified my code so that the routine that creates the html does nothing but create the code.

I now call it as a subroutine from the main routine.

The query is nothing more than a select query to extract the data we need from three table to build a webpage.

Its not the query eating up that time, is the code that is executing in the loop.

This code is doing nothing more than create HTML.

As near as I can tell, the code is processing about 20 records per second. Now when we consider 250,000 records in our inventory, that means to cycle through every record once, our objective, at 20 records/second and 250K records, it would take 3.5 hours to cycle through the table once.

Up until now, I've only been processing groups of 10 to 50K records. I did try this today with all 250, 000 records and a routine that runs before the troublesome routine failed with a similar error (System Resource exceeded #3035.)

Is there a way to turn the timeout process off, or set it for six hours or so?

Thanks

I guess I didn't have to do this?

Nope. It's a one off

Is there a way to turn the timeout process off, or set it for six hours or so?

1000 milliseconds per second

60 seconds per minute

60 minutes per hour

6 hours

Alter PROCEDURE uspFixedTimeoutProblem

AS

SET LOCK_TIMEOUT 21600000

SELECT @@LOCK_TIMEOUT

GO

is the code that is executing in the loop.

Post it if you'd like. It can't hurt.

200 lines is more than a guy'd like to wrap his brain around, but you're in the weeds.

And getting Access VBA to build HTML is something I do, too.

Hopefully you've got it reasonably commented so it makes good sense.

More fun things include knocking stuff into Excel, and then walking rows and columns to create <tr> and <td>

You've got something unwieldy to conquer.

My last suggestion was to modularize the SQL in the loops.

Next would be to modularize the html creation to one textfile per record in the recordset.

To open them one-by-one and concatenate them together is easily codable.

Here, though, is another thing re. your 3035 error

http://answers.microsoft.com/en-us/office/forum/office_2010-customize/how-do-i-prevent-run-time-error-3035-system/49b66089-4486-4a83-94e2-13eced66d686

This, too, seems to be a one-off thing to run

DAO.DBEngine.SetOption dbMaxLocksPerFile, 1000000

Nick67

Nope. It's a one off

Is there a way to turn the timeout process off, or set it for six hours or so?

1000 milliseconds per second

60 seconds per minute

60 minutes per hour

6 hours

Alter PROCEDURE uspFixedTimeoutProblem

AS

SET LOCK_TIMEOUT 21600000

SELECT @@LOCK_TIMEOUT

GO

is the code that is executing in the loop.

Post it if you'd like. It can't hurt.

200 lines is more than a guy'd like to wrap his brain around, but you're in the weeds.

And getting Access VBA to build HTML is something I do, too.

Hopefully you've got it reasonably commented so it makes good sense.

Set BatFile = fs.CreateTextFile(BuiltPath & "\homeview.htm", True)

'write the html

BatFile.WriteLine ("<html>")

BatFile.WriteLine ("<head>")

BatFile.WriteLine (" <title>In The Weeds</title>")

BatFile.WriteLine (" <style type='text/css'>")

BatFile.WriteLine (" .style9")

BatFile.WriteLine (" {")

BatFile.WriteLine (" font-family: Tahoma;")

BatFile.WriteLine (" font-size: small;")

BatFile.WriteLine (" font-weight: normal;")

BatFile.WriteLine (" color: #1E279C;")

BatFile.WriteLine (" }")

BatFile.WriteLine (" Table")

BatFile.WriteLine (" {")

BatFile.WriteLine (" border-collapse:collapse;")

BatFile.WriteLine (" }")

BatFile.WriteLine (" </style>")

BatFile.WriteLine ("</head>")

BatFile.WriteLine ("<Body>")

BatFile.WriteLine ("<p align = 'left'>")

pcalabria is in the weeds

BatFile.WriteLine ("</body>")

BatFile.WriteLine ("</html>")

BatFile.WriteLine vbCrLf

BatFile.Close

Set BatFile = NothingMore fun things include knocking stuff into Excel, and then walking rows and columns to create <tr> and <td>

Private Function CreateSummaryHTML(oSheet As Excel.Worksheet, NumCols, NumRows)

'ok I want to write the summary.htm out because Excel is unreliable to stream it directly to the Outlook page

'or to the web page

'create an html page in the correct location

Dim fs As Object

Dim BatFile As TextStream

Dim x As Integer

Dim y As Integer

Dim LineToWrite As String

Set fs = CreateObject("Scripting.FileSystemObject")

Set BatFile = fs.CreateTextFile("c:\tempPDF\Summary.htm", True)

'write the html for the page

'create the table

BatFile.WriteLine ("<table border = 1>")

For x = 1 To NumRows + 1

'start the row

BatFile.WriteLine ("<tr>")

'If x = 1 Then LineToWrite = LineToWrite & "<strong>"

For y = 1 To NumCols

LineToWrite = ""

'start the detail

LineToWrite = LineToWrite & "<td>"

If x = 1 Then LineToWrite = LineToWrite & "<strong>"

'we are writing oSheets.Range(letter & number).value

If IsDate(oSheet.Range(Chr(y + 64) & x).Value) = True Then

LineToWrite = LineToWrite & Format(oSheet.Range(Chr(y + 64) & x).Value, "dd-mmm-yyyy")

Else

LineToWrite = LineToWrite & oSheet.Range(Chr(y + 64) & x).Value

End If

If x = 1 Then LineToWrite = LineToWrite & "</strong>"

'end the detail

LineToWrite = LineToWrite & "</td>"

BatFile.WriteLine (LineToWrite)

Next y

'end the row

BatFile.WriteLine ("</tr>")

Next x

BatFile.WriteLine ("</table>")

BatFile.WriteLine vbCrLf

BatFile.WriteLine vbCrLf

BatFile.Close

Set BatFile = Nothing

End FunctionYou've got something unwieldy to conquer.

My last suggestion was to modularize the SQL in the loops.

Next would be to modularize the html creation to one textfile per record in the recordset.

To open them one-by-one and concatenate them together is easily codable.

Here, though, is another thing re. your 3035 error

http://answers.microsoft.com/en-us/office/forum/office_2010-customize/how-do-i-prevent-run-time-error-3035-system/49b66089-4486-4a83-94e2-13eced66d686

This, too, seems to be a one-off thing to run

DAO.DBEngine.SetOption dbMaxLocksPerFile, 1000000

Nick67

The thing that makes this routine unique is that every 250 records or so, expands the filesize by 30MB, so 100,000 records, if stored in an Access table, would cause the table to grow by 12GB.

Why?

You aren't storing images in the tables, or files, are you?

Why?

You aren't storing images in the tables, or files, are you?

Nick67,

Your procedure works, runs and sets the max query timeout to 2 minutes. EXPR1000 is the given default name for the column. 120000 is the new value set. This is global to the server and will remain that way until you issue a new Set Lock_Timeout command.

Just for the record and assuming your are talking about SQL Server, SET LOCK TIMEOUT has nothing to do with a query timeout. It is certainly not global to the server and will only remain in effect until the session completes.

Incidentally the default is -1 which means it will wait forever.

Your procedure works, runs and sets the max query timeout to 2 minutes. EXPR1000 is the given default name for the column. 120000 is the new value set. This is global to the server and will remain that way until you issue a new Set Lock_Timeout command.

Just for the record and assuming your are talking about SQL Server, SET LOCK TIMEOUT has nothing to do with a query timeout. It is certainly not global to the server and will only remain in effect until the session completes.

Incidentally the default is -1 which means it will wait forever.

ASKER

Nick,

No, I'm not storing any images in the table, just links to images.

I'm surprised at that file size too, I guess its just a lot of characters.

I am, however, storing the code in the table, not in a file as I'm sure you understand.

I have made the code changes as per your posting, and am testing now. Just logged in to report back and saw Anthony's note...

Anthony,

Are you suggesting the Set Lock Timeout is not causing the problem? If so, what do you think is?

It does look like the process times out. It does not encounter an error, it just stops running with the Timer Expired message (more than an hour after the routine starts).

No, I'm not storing any images in the table, just links to images.

I'm surprised at that file size too, I guess its just a lot of characters.

I am, however, storing the code in the table, not in a file as I'm sure you understand.

I have made the code changes as per your posting, and am testing now. Just logged in to report back and saw Anthony's note...

Anthony,

Are you suggesting the Set Lock Timeout is not causing the problem? If so, what do you think is?

It does look like the process times out. It does not encounter an error, it just stops running with the Timer Expired message (more than an hour after the routine starts).

ASKER CERTIFIED SOLUTION

membership

This solution is only available to members.

To access this solution, you must be a member of Experts Exchange.

ASKER

Nick,

I did experience the timeout error again, so I look forward to trying this new line of code:

oDB.QueryTimeout = qq

I added the line after about 15 of the 35 or more queries that I use in this routine.

I'll set qq in the initialization setup, so I can try different delays.

Its going to tie up the machine for several hours before I get any results, with this said, are you still feeling good that this is the line to day? My concern is that I'm not actually running queries, am I ?

ie..

Set oRS.OpenRecordSource(strsq

do while not ors.eof

<<Does this count as a query?>>

loop

I did experience the timeout error again, so I look forward to trying this new line of code:

oDB.QueryTimeout = qq

I added the line after about 15 of the 35 or more queries that I use in this routine.

I'll set qq in the initialization setup, so I can try different delays.

Its going to tie up the machine for several hours before I get any results, with this said, are you still feeling good that this is the line to day? My concern is that I'm not actually running queries, am I ?

ie..

Set oRS.OpenRecordSource(strsq

do while not ors.eof

<<Does this count as a query?>>

loop

are you still feeling good that this is the line to day?

All I can do is give you suggestions,

You haven't posted anything that will permit anything else.

I don't think you are addressing the root cause, but again, I can only guess.

You have something causing a query time out.

Generally, those will occur when something seizes a lock on a table or row and can't/won't release it.

Then, then next item to want to access it won't be permitted, and a timeout will occur.

You have a mix of technologies on the go.

There has been a suggestion that you should move all the data to SQL Server.

I'd concur with that.

I've suggested making your logic more atomistic, breaking your looping into two components.

An outer loop that pulls only enough fields to permit the inner workings to create their own, smaller SQL statements.

Other things to look at are read-only vs dynaset.

Anytime you ask for a dynaset, you are asking for a read-write dataset.

There's nothing wrong with that, but if two separate bits of your code try to alter the same row of the same table, things may collide and deadlock.

Breaking apart a monolithic procedure into numerous smaller functions can make the result more readable, but also more fault-tolerant as objects get closed out as their function ends.

So am I feeling good? I am afraid at my end, it's a coin toss

<<Does this count as a query?>>

Yes.

We call our saved query definitions 'queries', but in reality a query is any SQL Statement that you execute, saved as a querydef, or just raw text executed for a recordset.

Dim qdf as QueryDef

Dim rs as recordset

Dim SQL as string

SQL = "Select SometingNice from TheGoodTable;"

Set qdf = Currentdb.QueryDefs("SomeS

qdf.SQL = SQL

It pretty much immaterial to the do this

Set rs = qdf.OpenRecordset(dbOpenDy

or

Set rs = db.OpenRecordset(SQL,dbOpe

they're both queries.

All I can do is give you suggestions,

You haven't posted anything that will permit anything else.

I don't think you are addressing the root cause, but again, I can only guess.

You have something causing a query time out.

Generally, those will occur when something seizes a lock on a table or row and can't/won't release it.

Then, then next item to want to access it won't be permitted, and a timeout will occur.

You have a mix of technologies on the go.

There has been a suggestion that you should move all the data to SQL Server.

I'd concur with that.

I've suggested making your logic more atomistic, breaking your looping into two components.

An outer loop that pulls only enough fields to permit the inner workings to create their own, smaller SQL statements.

Other things to look at are read-only vs dynaset.

Anytime you ask for a dynaset, you are asking for a read-write dataset.

There's nothing wrong with that, but if two separate bits of your code try to alter the same row of the same table, things may collide and deadlock.

Breaking apart a monolithic procedure into numerous smaller functions can make the result more readable, but also more fault-tolerant as objects get closed out as their function ends.

So am I feeling good? I am afraid at my end, it's a coin toss

<<Does this count as a query?>>

Yes.

We call our saved query definitions 'queries', but in reality a query is any SQL Statement that you execute, saved as a querydef, or just raw text executed for a recordset.

Dim qdf as QueryDef

Dim rs as recordset

Dim SQL as string

SQL = "Select SometingNice from TheGoodTable;"

Set qdf = Currentdb.QueryDefs("SomeS

qdf.SQL = SQL

It pretty much immaterial to the do this

Set rs = qdf.OpenRecordset(dbOpenDy

or

Set rs = db.OpenRecordset(SQL,dbOpe

they're both queries.

ASKER

Great. That sounds good. I will try it again tonight.

BTW... <are you still feeling good that this is the line to day? >

should have been <are you still feeling good that this is the line to add? >

We can thank auto-correct for that sloppy correction.

BTW if you would like to see that end product of this process:

http://www.ebay.com/itm/15-PCS-LINEAR-IC-LF444CN-OP-AMP-QUAD-GP-18V-14-PIN-MDIP-RAIL-NATIONAL-444-/281647691811

I'm not sure if you can see it without logging into Ebay. A good portion of the code is redundant, from listing to listing, ultimately, to upload data we need one row per item.

BTW... <are you still feeling good that this is the line to day? >

should have been <are you still feeling good that this is the line to add? >

We can thank auto-correct for that sloppy correction.

BTW if you would like to see that end product of this process:

http://www.ebay.com/itm/15-PCS-LINEAR-IC-LF444CN-OP-AMP-QUAD-GP-18V-14-PIN-MDIP-RAIL-NATIONAL-444-/281647691811

I'm not sure if you can see it without logging into Ebay. A good portion of the code is redundant, from listing to listing, ultimately, to upload data we need one row per item.

Definitely bumping up the query timeout limit won't hurt a machine that isn't doing end-user work

Bumping the query timeout to 2 minutes would be agony to an end user waiting for something to error out.

But it may be what you need -- because you WANT to wait long enough for the query to try to complete

Of course, if the cause of the timeout doesn't resolve itself within the course of the period you select (say because the issue is a lock of some sort that doesn't free itself) it won't help, either.

We are treating symptoms and not causes.

I went to the page and View'ed Source and saved it.

428 KB, so ~0.5 MB

So your size statements make sense.

How much is boilerplate that you could do a find and replace on?

Bumping the query timeout to 2 minutes would be agony to an end user waiting for something to error out.

But it may be what you need -- because you WANT to wait long enough for the query to try to complete

Of course, if the cause of the timeout doesn't resolve itself within the course of the period you select (say because the issue is a lock of some sort that doesn't free itself) it won't help, either.

We are treating symptoms and not causes.

I went to the page and View'ed Source and saved it.

428 KB, so ~0.5 MB

So your size statements make sense.

How much is boilerplate that you could do a find and replace on?

SOLUTION

membership

This solution is only available to members.

To access this solution, you must be a member of Experts Exchange.

Nick67,

@Anthony Perkins has forgotten more about SQL Server than I will ever know

Thank you. That was very kind of you, but you really should not underestimate your SQL Server knowledge that much. I am sure you know more than you say you do.

I don't want my app to wait for eternity for a lock to clear -- for the end user, that appears as an application hang.

I agree with you 100%. That is a lousy situation. Even the default CommandTimeout of 30 seconds is too long, don't you think?.

@Anthony Perkins has forgotten more about SQL Server than I will ever know

Thank you. That was very kind of you, but you really should not underestimate your SQL Server knowledge that much. I am sure you know more than you say you do.

I don't want my app to wait for eternity for a lock to clear -- for the end user, that appears as an application hang.

I agree with you 100%. That is a lousy situation. Even the default CommandTimeout of 30 seconds is too long, don't you think?.

@acperkins

mea culpa on the Lock_Timeout and how to lengthen the timeout for ADO or DAO is in ID: 40698632

My bad.

I have a few heavy, ugly queries that lengthening it helps with...

But only because I knock it down to 15 seconds in the first place.

I am sure you know more than you say you do.

I've made a SQL Server Express 2005 and now 2008 R2 instance run the backend of the business very well for about a decade now.

But only because they were very good products to start with, and I haven't been an idiot with them. :)

mea culpa on the Lock_Timeout and how to lengthen the timeout for ADO or DAO is in ID: 40698632

My bad.

I have a few heavy, ugly queries that lengthening it helps with...

But only because I knock it down to 15 seconds in the first place.

I am sure you know more than you say you do.

I've made a SQL Server Express 2005 and now 2008 R2 instance run the backend of the business very well for about a decade now.

But only because they were very good products to start with, and I haven't been an idiot with them. :)

So why don't we address the real problem as I see it.

Your query looks like this (using aliases for clarity):

Step 1 should be run that in SSMS and look at the Actual Execution Plan. If you are not comfortable doing that and you don't want to post it here, then we will have to address it the old fashioned way a sort of Q/A if you will.

1. How long did it take to run in SSMS?

2. How many rows did it return?

3. Why are you still using *? Do you really need all the columns in Selected Parts? This is very typical in sloppy MS Access code, where you could get away with it. It is never a good idea in SQL Server (or Sybase or Oracle or PostgreSQL or even MySQL)

4. Can you post the schema to all the tables involved (SelectedParts, ComponentMaster, ManufacturerMaster and PackageMaster) including all the indexes defined.

Your query looks like this (using aliases for clarity):

SELECT s.*,

c.Notes,

c.TasPath1,

p.PackageCode,

m.IPSEname,

m.ShortName,

m.FullName,

m.Abbreviation,

p.Package,

p.PackageImage,

p.Leads,

p.PackageMaterial,

p.PackageName,

p.MountingType,

p.sellingQuantityIncrement,

p.EnforceSellingQuantity,

c.OrderNumber,

c.DescriptionPrimary,

c.title80,

c.DescriptionRoot,

c.Description,

c.FamilyName,

c.GenericName,

c.FamilyName,

c.PartClass,

c.PartFamily,

c.PartFamily2,

c.FamilyDescription,

c.Pb,

c.DocName,

c.DocName2,

c.MSL,

c.EURoHs,

c.DataSheet,

c.DateObsolete,

c.ECCN,

c.ScheduleB,

c.PackageLength,

c.PackageWidth,

c.PackageHeight,

c.SeatedPlaneHeight,

c.PinPitch,

c.PackageDiameter,

c.MaximumReflow,

c.OrderNoVerified,

c.SEdate,

c.SEautoscan,

c.DistiVersion,

c.OriginalCost,

c.RecPrice,

c.AskPrice,

c.Value,

c.tolerance,

c.FullReelQty,

c.TapeWidth,

c.caseSize,

c.TCR,

c.Power,

c.Voltage,

c.OperatingRange,

c.ReelSize,

c.WebImage,

c.SeriesName,

c.Title80,

c.Title255,

c.SeriesID,

c.PartName,

c.ClassFamily,

c.AlternateOrderNumber,

c.AlternateOrderNumber2,

c.AlternateOrderNumber3,

c.AlternateOrderNumber4,

c.AlternateOrderNumber5,

c.TapePitch

FROM SelectedParts s

INNER JOIN ComponentMaster c ON s.SearchNumber = c.SearchNumber AND s.LineID = c.LineID

LEFT JOIN ManufacturerMaster m ON c.LineID = m.LineID

LEFT JOIN PackageMaster p ON c.PartClass = p.PartClass AND c.PackageCode = p.PackageCodeStep 1 should be run that in SSMS and look at the Actual Execution Plan. If you are not comfortable doing that and you don't want to post it here, then we will have to address it the old fashioned way a sort of Q/A if you will.

1. How long did it take to run in SSMS?

2. How many rows did it return?

3. Why are you still using *? Do you really need all the columns in Selected Parts? This is very typical in sloppy MS Access code, where you could get away with it. It is never a good idea in SQL Server (or Sybase or Oracle or PostgreSQL or even MySQL)

4. Can you post the schema to all the tables involved (SelectedParts, ComponentMaster, ManufacturerMaster and PackageMaster) including all the indexes defined.

@acperkins

Part of the evil is that it's coming from three separate datasources.

Only one of them is SQL Server -- and that one only because @pcalabria hit the 2 GB file size limit for Access.

The other two remain in Access.

The suggestion has been put on the table that SQL Server Migration Assistant for MS Access should be used to move ALL the data to SQL Server. It's easy to say ... but ensuring nothing breaks and pushing out new frontends is ... a bit time-consuming.

What's the upper limit for columns returned in SQL Server? Part of why @pcalabria has things structured the way he does (Select *) is that he's got more than 255 'needed' fields.

Of course, I can rewrite the routine that fails so it could be run as a stand alone procedure to see what happens, but it would be so much simpler to simply solve the timeout expired problem since the existing code otherwise performs very well. (Functionally at least!)

I think, and I think you'd agree, that he really does need to refactor this.

It's always sad when you hit the day that things will no longer scale to what you need :)

On the other hand, it means you built something successful.

Nick67

Part of the evil is that it's coming from three separate datasources.

Only one of them is SQL Server -- and that one only because @pcalabria hit the 2 GB file size limit for Access.

The other two remain in Access.

The suggestion has been put on the table that SQL Server Migration Assistant for MS Access should be used to move ALL the data to SQL Server. It's easy to say ... but ensuring nothing breaks and pushing out new frontends is ... a bit time-consuming.

What's the upper limit for columns returned in SQL Server? Part of why @pcalabria has things structured the way he does (Select *) is that he's got more than 255 'needed' fields.

Of course, I can rewrite the routine that fails so it could be run as a stand alone procedure to see what happens, but it would be so much simpler to simply solve the timeout expired problem since the existing code otherwise performs very well. (Functionally at least!)

I think, and I think you'd agree, that he really does need to refactor this.

It's always sad when you hit the day that things will no longer scale to what you need :)

On the other hand, it means you built something successful.

Nick67

Part of the evil is that it's coming from three separate datasources.

Than I am afraid I cannot help much. My knowledge of MS Access is even less than what I have forgotten from SQL Server.

What's the upper limit for columns returned in SQL Server?

You can return as many as you want. That is not the problem. The problem is that there is a penalty for each extra byte that needs to travel over the network.

he does (Select *) is that he's got more than 255 'needed' fields.

That is unfortunate. But if they have tables with that many columns they have bigger problems than a pesky timeout that can be handled any number of ways.

I think, and I think you'd agree, that he really does need to refactor this.

Absolutely. As it stands now, the very best you can do is return all the rows needed from SQL Server using a firehose (forward only, read only) cursor and then deal with them in MS Access.

Than I am afraid I cannot help much. My knowledge of MS Access is even less than what I have forgotten from SQL Server.

What's the upper limit for columns returned in SQL Server?

You can return as many as you want. That is not the problem. The problem is that there is a penalty for each extra byte that needs to travel over the network.

he does (Select *) is that he's got more than 255 'needed' fields.

That is unfortunate. But if they have tables with that many columns they have bigger problems than a pesky timeout that can be handled any number of ways.

I think, and I think you'd agree, that he really does need to refactor this.

Absolutely. As it stands now, the very best you can do is return all the rows needed from SQL Server using a firehose (forward only, read only) cursor and then deal with them in MS Access.

ASKER

Good morning.

I would again like to thank both of you for your ongoing help.

Here is some background, answers and comments:

The ComponentMaster, ManufacturerMaster, and PackageMaster databases are MS Access which are populated and read by an application with more than a thousand forms, queries, reports, and procedures using an application I first developed in 2000 that have continually updated. Its used each day by my staff for everything they need to do. Moving these tables to SQL Server is a huge project as the SQL statements developed for Access are not interchangeable with the statements required by SQL Server. As a result, I do need to selectively migrate tables to SQL server, when size becomes the problem.

The SelectedParts table start as an MS Access table but was migrated to SQL (as Nick explained) because of the size restriction in MS Access. This table is used by a brand new routine developed to list our inventory in ECommerce channels. It collects and creates the fields each channel needs, and organizes them into a table, with one row per inventory item.

We don't expect to run this new routine very often. Once a generates a listing for us we can leave the listing in place until the items sells, so performance is not critical. The routine can be run after hours, so it doesn't matter whether it take three hours or six hours, as long as when we come back to work the "Process Completed" message is on the screen, and not an error message.

<Select *> I can get rid of Select * fairly easily, if you believe it will help.

<firehouse> I can use a forward only cursor if it makes a difference.

<Actual Execution Plan>I've read a bit about this, but never created one or looked into this. I'm willing to try but will have resistance to publishing any info that can help our competitors mimic what I've done in a public forum.

I would again like to thank both of you for your ongoing help.

Here is some background, answers and comments:

The ComponentMaster, ManufacturerMaster, and PackageMaster databases are MS Access which are populated and read by an application with more than a thousand forms, queries, reports, and procedures using an application I first developed in 2000 that have continually updated. Its used each day by my staff for everything they need to do. Moving these tables to SQL Server is a huge project as the SQL statements developed for Access are not interchangeable with the statements required by SQL Server. As a result, I do need to selectively migrate tables to SQL server, when size becomes the problem.

The SelectedParts table start as an MS Access table but was migrated to SQL (as Nick explained) because of the size restriction in MS Access. This table is used by a brand new routine developed to list our inventory in ECommerce channels. It collects and creates the fields each channel needs, and organizes them into a table, with one row per inventory item.

We don't expect to run this new routine very often. Once a generates a listing for us we can leave the listing in place until the items sells, so performance is not critical. The routine can be run after hours, so it doesn't matter whether it take three hours or six hours, as long as when we come back to work the "Process Completed" message is on the screen, and not an error message.

<Select *> I can get rid of Select * fairly easily, if you believe it will help.

<firehouse> I can use a forward only cursor if it makes a difference.

<Actual Execution Plan>I've read a bit about this, but never created one or looked into this. I'm willing to try but will have resistance to publishing any info that can help our competitors mimic what I've done in a public forum.

Moving these tables to SQL Server is a huge project as the SQL statements developed for Access are not interchangeable with the statements required by SQL Server.

The suggestion has been put on the table that SQL Server Migration Assistant for MS Access should be used to move ALL the data to SQL Server.

If you are not using this tool, you should be. Unless you have done unhappy things like attachments, BLOBS and hyperlink fields -- and I don't think you have -- this tool pretty much seamlessly moves Access data to SQL Server.

The SQL syntax for using a linked table is exactly the same as using the local table was.

The creation of views and stored procedures does require some syntax changes, but not many, really and for the most part they are straight-forward. Double-quotes for single quotes, percent for asterisks, and passing in dates as properly formatted string literals instead of between hashes are most of it. Our friend Nz() is replaced by IsNull(), and IIF() by Case, but generally I've found that I can create a query in Access, copy the SQL and paste it into SSMS and sort out its issues in relatively short order for Views and simple stored procedures.

The biggest adjustment is that in SQL Server, you want to use set logic and not looping logic -- and you have the tools to do so, but your looping tools are pretty much gone (cursors are generally an evil crutch to use as a last resort)

12 GB of data when its done, yes?

That isn't happening in Access.

I take it that is going into SQL Server, so you will need something read-write on that end.

The tool creates new tables prefaced with dbo_

I renamed those all to the old table names and then NOTHING needed to change in code or in queries.

The suggestion has been put on the table that SQL Server Migration Assistant for MS Access should be used to move ALL the data to SQL Server.

If you are not using this tool, you should be. Unless you have done unhappy things like attachments, BLOBS and hyperlink fields -- and I don't think you have -- this tool pretty much seamlessly moves Access data to SQL Server.

The SQL syntax for using a linked table is exactly the same as using the local table was.

The creation of views and stored procedures does require some syntax changes, but not many, really and for the most part they are straight-forward. Double-quotes for single quotes, percent for asterisks, and passing in dates as properly formatted string literals instead of between hashes are most of it. Our friend Nz() is replaced by IsNull(), and IIF() by Case, but generally I've found that I can create a query in Access, copy the SQL and paste it into SSMS and sort out its issues in relatively short order for Views and simple stored procedures.

The biggest adjustment is that in SQL Server, you want to use set logic and not looping logic -- and you have the tools to do so, but your looping tools are pretty much gone (cursors are generally an evil crutch to use as a last resort)

12 GB of data when its done, yes?

That isn't happening in Access.

I take it that is going into SQL Server, so you will need something read-write on that end.

The tool creates new tables prefaced with dbo_

I renamed those all to the old table names and then NOTHING needed to change in code or in queries.

<Actual Execution Plan>I've read a bit about this, but never created one or looked into this.

Unfortunately that is not a valid option as there is only one tables in SQL Server.

Unfortunately that is not a valid option as there is only one tables in SQL Server.

ASKER

Nick, you make it sound so easy! :-)

I would love to find a reasonable way to migrate to SQL Server and have used the SQL Server Migration Assistant for MS Access to move six of my estimated 1000 tables. Most of the time it has worked very well. Not all the time. Numerous problems have been encountered. In fact, its one of my nightmares!

Imagine you have more than 1000 routines, some of which you haven't looked at in 10+ years. Some of the routines use up to 30 different sql statements, and of course some use none.

You mentioned a few problems I haven't encountered yet.. so its worse than I though! LoL

Seriously, replacing * with % is annoying, but forgetting to remove # around dates simply causes the query to not return results without causing an error message that I can address! Some query some where just doesn't do what I don't remember what it was supposed to do if I still need it do it but it probably took me a day to get it working in the first case and that's only one routine. ouch!

With this said, there are a few more tables I will need to migrate, so I'll be here looking for you and the other experts to help throw some water on the next fire.

BTW... I think the last change fixed the current problem, last change being objDB.QueryTimeout = qq

The codes been running 5 hours without an error message...so far!

I'll report back when the process finishes!

I would love to find a reasonable way to migrate to SQL Server and have used the SQL Server Migration Assistant for MS Access to move six of my estimated 1000 tables. Most of the time it has worked very well. Not all the time. Numerous problems have been encountered. In fact, its one of my nightmares!

Imagine you have more than 1000 routines, some of which you haven't looked at in 10+ years. Some of the routines use up to 30 different sql statements, and of course some use none.

You mentioned a few problems I haven't encountered yet.. so its worse than I though! LoL

Seriously, replacing * with % is annoying, but forgetting to remove # around dates simply causes the query to not return results without causing an error message that I can address! Some query some where just doesn't do what I don't remember what it was supposed to do if I still need it do it but it probably took me a day to get it working in the first case and that's only one routine. ouch!

With this said, there are a few more tables I will need to migrate, so I'll be here looking for you and the other experts to help throw some water on the next fire.

BTW... I think the last change fixed the current problem, last change being objDB.QueryTimeout = qq

The codes been running 5 hours without an error message...so far!

I'll report back when the process finishes!

Seriously, replacing * with % is annoying, but forgetting to remove # around dates simply causes the query to not return results without causing an error message that I can address! Some query some where just doesn't do what I don't remember what it was supposed to do if I still need it do it but it probably took me a day to get it working in the first case and that's only one routine. ouch!

And you are focusing on the easy catches, what about the fact that T-SQL is not the same as the SQL dialect used in MS Access. So unless you are prepared to re-design and re-write you app you had best stay with MS Access.

And you are focusing on the easy catches, what about the fact that T-SQL is not the same as the SQL dialect used in MS Access. So unless you are prepared to re-design and re-write you app you had best stay with MS Access.

Access on the front-end, certainly.

But on the back-end SQL Server.

UNLESS you are moving data operation into SQL Server via views and stored procedures, very little on a working Access app needs to change.

You run SSMA, and it keeps your old local tables as a safeguard, and pushed schema and data to SQL Server.

The one real complaint I had was that the new tables were all prefaces 'dbo_'

Shear that off and it was pretty much good-to-go off the nose.

Back in the day, there was grief with bit/Boolean fields, but that's been fixed.

In code, so long as you referred to TRUE and not -1, throwing dbSeeChanges into each

Set rs = db.OpenRecordset

statement was the major PITA.

I've flanged three subsidiary backends into the main application's SQL Server database when keeping them separate really no longer served any purpose. There wasn't much grief to it.

@pcalabria's got me by about an order of magnitude.

I've got about 200 tables, 500 queries and 110K lines of code.

BUT

I DO NOT have much SQL in VBA code.

QueryDefs are my friend

Hand-editing SQL in the VBA editor is NOT any fun, and I have few instances of more that simple, perhaps 10-field-SELECTS hand-coded.

Most heavy lifting is done with recordsets, not SQL executes in VBA code

But on the back-end SQL Server.

UNLESS you are moving data operation into SQL Server via views and stored procedures, very little on a working Access app needs to change.

You run SSMA, and it keeps your old local tables as a safeguard, and pushed schema and data to SQL Server.

The one real complaint I had was that the new tables were all prefaces 'dbo_'

Shear that off and it was pretty much good-to-go off the nose.

Back in the day, there was grief with bit/Boolean fields, but that's been fixed.

In code, so long as you referred to TRUE and not -1, throwing dbSeeChanges into each

Set rs = db.OpenRecordset

statement was the major PITA.

I've flanged three subsidiary backends into the main application's SQL Server database when keeping them separate really no longer served any purpose. There wasn't much grief to it.

@pcalabria's got me by about an order of magnitude.

I've got about 200 tables, 500 queries and 110K lines of code.

BUT

I DO NOT have much SQL in VBA code.

QueryDefs are my friend

Hand-editing SQL in the VBA editor is NOT any fun, and I have few instances of more that simple, perhaps 10-field-SELECTS hand-coded.

Most heavy lifting is done with recordsets, not SQL executes in VBA code

very little on a working Access app needs to change.

Unless of course you are using SQL, in which case all bets are off and you have no recourse but to re-design and re-write.

Unless of course you are using SQL, in which case all bets are off and you have no recourse but to re-design and re-write.

ASKER

Thanks again for your help.

Nick, I have to agree completely with Anthony Perkins on this one:

<And you are focusing on the easy catches, what about the fact that T-SQL is not the same as the SQL dialect used in MS Access. So unless you are prepared to re-design and re-write you app you had best stay with MS Access>

Although if I've had ten dollars each time an expert here has suggested I convert to MS SQL, it would n't need to write this software...ok, only kidding, but there is so much SQL, in routines that I haven't touched for so many years, my strategy is to move tables to the SQL Server only when necessary, usually due to size limitations.

As my MS Access backend files grow to about 1GB, I split them. That seems to work pretty well and is pretty easy as long as its not one specific table that is growing... which is a problem I will be facing very soon....

Two more things...

Anthony was correct, Set Lock Timeout did not fix the problem. Nick, your suggestion, to oDB.QueryTimeOut=qq solved my problem completely! Thanks you both very much. Your help has been incredible!

DOEVENTS COMMENT

@Nick

One more note, DoEvents is one of my best friends and this is why. I use it when things are going to take a while and I want the user to see screen updates to know what's going on. I'll display a progress indicator, ie Processing record 12,000 of 585,000 and then use DoEvents to keep the user from rebooting because the screen turned white. Not sure about the downsides you mentioned in my apps, but the upside, nice looking updated screens, is worth a slight performance penalty. BTW, I only display updates every 1000 records or so as it seems the display card slows things down.

Nick, I have to agree completely with Anthony Perkins on this one:

<And you are focusing on the easy catches, what about the fact that T-SQL is not the same as the SQL dialect used in MS Access. So unless you are prepared to re-design and re-write you app you had best stay with MS Access>

Although if I've had ten dollars each time an expert here has suggested I convert to MS SQL, it would n't need to write this software...ok, only kidding, but there is so much SQL, in routines that I haven't touched for so many years, my strategy is to move tables to the SQL Server only when necessary, usually due to size limitations.

As my MS Access backend files grow to about 1GB, I split them. That seems to work pretty well and is pretty easy as long as its not one specific table that is growing... which is a problem I will be facing very soon....

Two more things...

Anthony was correct, Set Lock Timeout did not fix the problem. Nick, your suggestion, to oDB.QueryTimeOut=qq solved my problem completely! Thanks you both very much. Your help has been incredible!

DOEVENTS COMMENT

@Nick

One more note, DoEvents is one of my best friends and this is why. I use it when things are going to take a while and I want the user to see screen updates to know what's going on. I'll display a progress indicator, ie Processing record 12,000 of 585,000 and then use DoEvents to keep the user from rebooting because the screen turned white. Not sure about the downsides you mentioned in my apps, but the upside, nice looking updated screens, is worth a slight performance penalty. BTW, I only display updates every 1000 records or so as it seems the display card slows things down.

ASKER

Great help guys. SetQueryTimeout worked

oDB.QueryTimeOut=qq solved my problem completely!

Not to rain on your parade, but lengthening the timeout is not a solution, it is a workaround. See my previous comment

Not to rain on your parade, but lengthening the timeout is not a solution, it is a workaround. See my previous comment

The ODBC driver is timing out, so you either have to optimize your query (as it stands it is could do with some tuning) or if you are unable to do that and as a last resort you have no choice but to lengthen the timeout.Here is why, unless you have set the timeout to infinite (not recommended), you may be revisiting this to increase the timeout again. I believe in American parlance that is called "punting the ball". If you have to do this where there is a user interface than this is a recipe for disaster.

ASKER

I agree Anthony, and thank you for your thoughts.

Just to be clear, however, the only think this routine is doing is cycling through the records, start to finish, forward only, in order to create html code which is stuffed into a single field in the SQL table.

I'm wondering if there may be any benefit in trying a different cursor, but my thought is that it just takes a long time to cycle through all the records. I don't believe it is hitting any record locks because I was the only person logged into the system.

I used the variable qq so that I can set qq via the user interface, along with a default value and option to increase the value if the routine fail in the future.

Just to be clear, however, the only think this routine is doing is cycling through the records, start to finish, forward only, in order to create html code which is stuffed into a single field in the SQL table.

I'm wondering if there may be any benefit in trying a different cursor, but my thought is that it just takes a long time to cycle through all the records. I don't believe it is hitting any record locks because I was the only person logged into the system.

I used the variable qq so that I can set qq via the user interface, along with a default value and option to increase the value if the routine fail in the future.

DoEvents vs Sleep

Sleep can be a much more efficient way of keeping the UI responsive depending upon why you need the VBA to pause.

Unless of course you are using SQL, in which case all bets are off and you have no recourse but to re-design and re-write. You can use Access-flavor SQL within Access using ODBC-linked backend tables without any alteration beyond dbSeeChanges and ensuring you use TRUE when you mean true and not using -1.

If you want to use Views, stored procedures and functions via passthroughs, well then you need to know T-SQL -- but you have to build those things in SQL Server Management Studio anyway, so the Books On Line and Google are there to help you knock it into shape. Until you REALLY move beyond what Access is capable of, the syntax changes aren't major.

Although if I've had ten dollars each time an expert here has suggested I convert to MS SQL, it wouldn't need to write this software...ok, only kidding, but there is so much SQL, in routines that I haven't touched for so many years, my strategy is to move tables to the SQL Server only when necessary, usually due to size limitations.

I would respectfully go the other direction. I'd move the small tables first. You can do object dependency to see what queries and objects use them. You can do CTRL-F to find where they are used in code. The smaller and less critical they are, the easier that will be. Cut your teeth on those. Comment and document the code as you go. Work your way toward the bigger tables. You will have been perusing the schema and code base as you work towards the more difficult cases and that will be useful. Eventually, they'll all be in SQL Server and you'll be done. Your present approach is crisis management. That's not any fun. And if I recall correctly, you've had your own personal crises to deal with. Thinking about long-term maintainability should be moving to the forefront--and avoiding SQL Server except as a crutch is not going to make that easier.

Sleep can be a much more efficient way of keeping the UI responsive depending upon why you need the VBA to pause.

Unless of course you are using SQL, in which case all bets are off and you have no recourse but to re-design and re-write. You can use Access-flavor SQL within Access using ODBC-linked backend tables without any alteration beyond dbSeeChanges and ensuring you use TRUE when you mean true and not using -1.

If you want to use Views, stored procedures and functions via passthroughs, well then you need to know T-SQL -- but you have to build those things in SQL Server Management Studio anyway, so the Books On Line and Google are there to help you knock it into shape. Until you REALLY move beyond what Access is capable of, the syntax changes aren't major.

Although if I've had ten dollars each time an expert here has suggested I convert to MS SQL, it wouldn't need to write this software...ok, only kidding, but there is so much SQL, in routines that I haven't touched for so many years, my strategy is to move tables to the SQL Server only when necessary, usually due to size limitations.

I would respectfully go the other direction. I'd move the small tables first. You can do object dependency to see what queries and objects use them. You can do CTRL-F to find where they are used in code. The smaller and less critical they are, the easier that will be. Cut your teeth on those. Comment and document the code as you go. Work your way toward the bigger tables. You will have been perusing the schema and code base as you work towards the more difficult cases and that will be useful. Eventually, they'll all be in SQL Server and you'll be done. Your present approach is crisis management. That's not any fun. And if I recall correctly, you've had your own personal crises to deal with. Thinking about long-term maintainability should be moving to the forefront--and avoiding SQL Server except as a crutch is not going to make that easier.

ASKER

Sleep?

Nick, I feel like you have forgotten more Access VBA than I've ever known! I never heard of sleep!

When I want Access to pause I've been using a Routine I wrote called delay, where I pass the number of seconds, and loop through doevents. Perhaps I'll try "sleeping".. (It would be nice to get some sleep with all of this crisis management I've been doing!)

With this said, I use doevents when I don't really want to cause a delay to read something on the screen, but when my video card is too slow to display something like : me.txtAnswer="12345". With an XP machine and Dell 3000 MB video, if I run a query immediately after the statement above, Access never has a chance to display the answer before the query starts running, so the answer, and user, must wait. Doevents placed before the query, gives Access, or at least my video card, and chance to display the result before starting the query.

I've never heard of "object dependency" either. Time to start researching....

BTW... access queries on SQL tables also need the the # removed and the * to % change... I'm not sure if there are other changes like those... still learning..

Nick, I feel like you have forgotten more Access VBA than I've ever known! I never heard of sleep!

When I want Access to pause I've been using a Routine I wrote called delay, where I pass the number of seconds, and loop through doevents. Perhaps I'll try "sleeping".. (It would be nice to get some sleep with all of this crisis management I've been doing!)

With this said, I use doevents when I don't really want to cause a delay to read something on the screen, but when my video card is too slow to display something like : me.txtAnswer="12345". With an XP machine and Dell 3000 MB video, if I run a query immediately after the statement above, Access never has a chance to display the answer before the query starts running, so the answer, and user, must wait. Doevents placed before the query, gives Access, or at least my video card, and chance to display the result before starting the query.

I've never heard of "object dependency" either. Time to start researching....

BTW... access queries on SQL tables also need the the # removed and the * to % change... I'm not sure if there are other changes like those... still learning..

Sleep is WinAPI.

You can call WinAPI from VBA, but the syntax is C/C++, and therefore not for the faint-of-heart.

Highly useful though.

Like being able to access the computername and loginname of the present Access user & machine.

Start here

http://access.mvps.org/access/index.html

Sleep is pretty straightforward

In a code module, not a forms or report one!,

Public Declare Sub Sleep Lib "kernel32" (ByVal dwMilliseconds As Long)

DoEvents for a pause usually is constructed as a loop with some wait = timer type interior for a kick out.

That's flatout useless busywork for the processor and thread, wasting CPU cycles.

Sleep tells the OS not to bother returning the VBA thread to the top of the queue until x number of milliseconds have elapsed. If you need the VBA to pause up while some exterior process completes (file copy, pdf creation, etc) Sleep is more efficient.

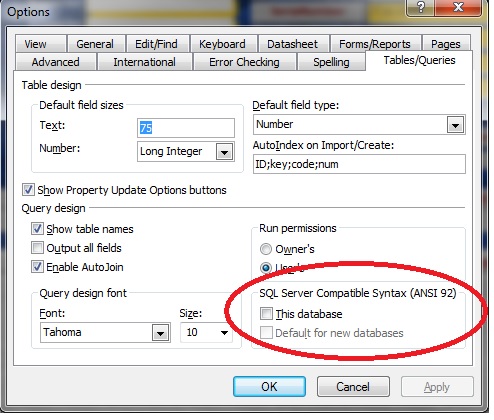

You have to have Name Autocorrect turned on for Object Dependency. Most devs will turn that off in production.

It's a global option to the mdb/accdb.

Once it is on, Access can trace what objects another depends upon, and which objects depend upon it.

Another VERY GOOD reason to have your SQL in saved Queries and not as text in your VBA code

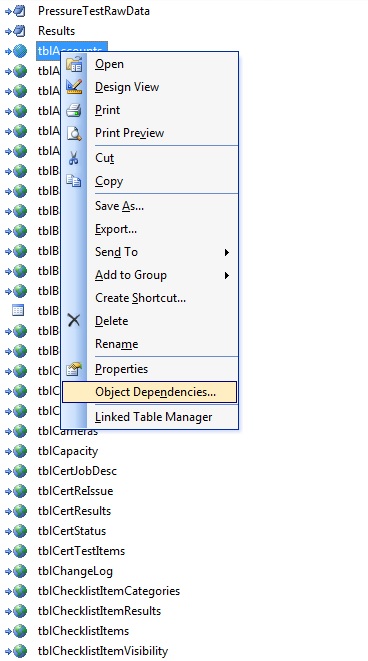

BTW... access queries on SQL tables also need the the # removed and the * to % change... I'm not sure if there are other changes like those... still learning.. As you can see in the image, almost everything is linked ODBC SQL Server tables (Access 2003) and no

SELECT * from tblinsDetails where [Date] = #2-Jan-2015# works just fine in both queries and code.

Now, for a passthrough -- which bypass Access entirely -- yes then I'd need SELECT * from tblinsDetails where [Date] = '2-Jan-2015'

You can call WinAPI from VBA, but the syntax is C/C++, and therefore not for the faint-of-heart.

Highly useful though.

Like being able to access the computername and loginname of the present Access user & machine.

Start here

http://access.mvps.org/access/index.html

Sleep is pretty straightforward

In a code module, not a forms or report one!,

Public Declare Sub Sleep Lib "kernel32" (ByVal dwMilliseconds As Long)

DoEvents for a pause usually is constructed as a loop with some wait = timer type interior for a kick out.

That's flatout useless busywork for the processor and thread, wasting CPU cycles.

Sleep tells the OS not to bother returning the VBA thread to the top of the queue until x number of milliseconds have elapsed. If you need the VBA to pause up while some exterior process completes (file copy, pdf creation, etc) Sleep is more efficient.

You have to have Name Autocorrect turned on for Object Dependency. Most devs will turn that off in production.

It's a global option to the mdb/accdb.

Once it is on, Access can trace what objects another depends upon, and which objects depend upon it.

Another VERY GOOD reason to have your SQL in saved Queries and not as text in your VBA code

BTW... access queries on SQL tables also need the the # removed and the * to % change... I'm not sure if there are other changes like those... still learning.. As you can see in the image, almost everything is linked ODBC SQL Server tables (Access 2003) and no