bwhorton

asked on

Lefthand P4300 and VMWare vSphere 4 Issue

Maybe some of you guys can weigh in on this:

Environment:

Lefthand p4300 starter array with two storage nodes (lefthand1 and lefthand2) set up with two-way replication. In the lefthand management console, I can see lefthand1, but lefthand2 only shows as its private IP and is unreachable. Last night, around 10CST, it appears a double drive failure of lefthand2 occurred. This is a RAID 5 array.

Three Dell R710's serve as hosts (vmhost1, vmhost2, vmhost3) and a fourth R710 serves as the vcenter server.

vSphere 4 is running on all servers in a cluster connected to the iSCSI array via a private network 192.168.1.x

The issue is that one of the hosts can't really see the array (vmhost 2). I'm guessing it's because of the issue with lefthand2. I would think that since LH2 is not reachable, it would use the replicated information on LH1 to provide storage resources to the VM array. However, this does not appear to be the case.

Doing anything in vCenter takes a long time. I've tried to rescan all storage adapters but it is either locked or taking forever. I don't know if I should break the 2 node storage management group and see if I can access the datastores on the good array or what. I've got a a call into HP support and a ticket opened, but have yet to hear anything.

Anyone seen or heard of something similar before? Any insight would be greatly appreciated.

Thanks,

Ben

Environment:

Lefthand p4300 starter array with two storage nodes (lefthand1 and lefthand2) set up with two-way replication. In the lefthand management console, I can see lefthand1, but lefthand2 only shows as its private IP and is unreachable. Last night, around 10CST, it appears a double drive failure of lefthand2 occurred. This is a RAID 5 array.

Three Dell R710's serve as hosts (vmhost1, vmhost2, vmhost3) and a fourth R710 serves as the vcenter server.

vSphere 4 is running on all servers in a cluster connected to the iSCSI array via a private network 192.168.1.x

The issue is that one of the hosts can't really see the array (vmhost 2). I'm guessing it's because of the issue with lefthand2. I would think that since LH2 is not reachable, it would use the replicated information on LH1 to provide storage resources to the VM array. However, this does not appear to be the case.

Doing anything in vCenter takes a long time. I've tried to rescan all storage adapters but it is either locked or taking forever. I don't know if I should break the 2 node storage management group and see if I can access the datastores on the good array or what. I've got a a call into HP support and a ticket opened, but have yet to hear anything.

Anyone seen or heard of something similar before? Any insight would be greatly appreciated.

Thanks,

Ben

ASKER

The Lefthand is running software version 8.1

Working to get it escalated with HP...

Working to get it escalated with HP...

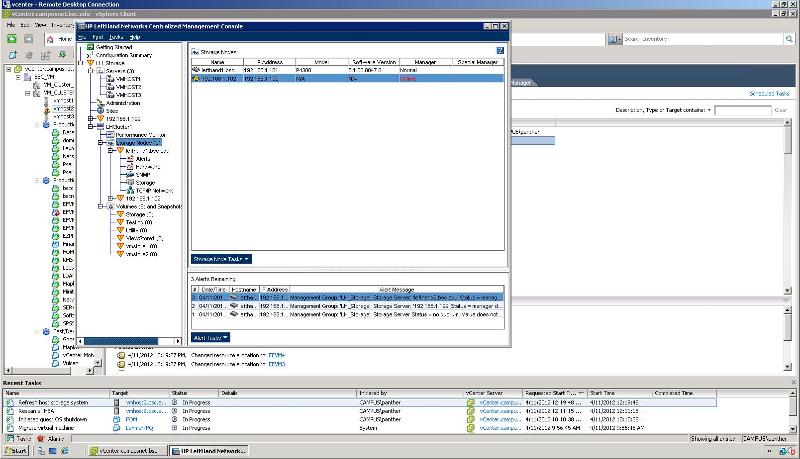

Can you post a screenshot of the HP Console?

When you look at the physical console of both left hand nodes what do you see on the screens?

When you look at the physical console of both left hand nodes what do you see on the screens?

8.1 is ancient 9.5 is the latest, and 9.5/9.6 have private fixes from HP, because of issues with VMware vSphere 4.x.

Also how have you got the nodes bonded? And presumably both NIC's are connected to the network?

At you able to ping the bond IP using the network tools in the console from the working node?

At you able to ping the bond IP using the network tools in the console from the working node?

ASKER

Can ping 192.168.1.101 from vcenter which is lefthand1.

Cannot ping 192.168.1.102 from vcenter which is lefthand2

All private iSCSI traffic is connected through a Brocade FES switch.

Cannot ping 192.168.1.102 from vcenter which is lefthand2

All private iSCSI traffic is connected through a Brocade FES switch.

I will be in front of my left hand console in about half an hour.

What do you see on the physical consoles for the left hand boxes?

What do you see on the physical consoles for the left hand boxes?

ASKER

They are in a different location. I'm remoted in to the vcenter server where the LH CMC is running. Do I need to hook up a kvm to the lefthand boxes and let you know what I see or are you referring to the drives/light on the front of the devices? Sorry for my ignorance.

Well, it's possible if you had 2 drives "fail" that the RAID Controller may be paused waiting for a response.

I had this recently when it though 3 drives had failed. The drive failing issue is resolved in version 9.x that's not to say yours may not have actually failed but it is a known issue with left hand falsely reporting drive failures.

On 2 of my nodes I had around 12 disks fail in the space of 2 months. As I replace 1 from each RAID array every 4 months it's unlikely it's down to "batches". After an upgrade the issue seems to have slowed down.

I had this recently when it though 3 drives had failed. The drive failing issue is resolved in version 9.x that's not to say yours may not have actually failed but it is a known issue with left hand falsely reporting drive failures.

On 2 of my nodes I had around 12 disks fail in the space of 2 months. As I replace 1 from each RAID array every 4 months it's unlikely it's down to "batches". After an upgrade the issue seems to have slowed down.

ASKER

So are you suggesting an upgrade to the software version as a first step to see if it resolves?

Not yet. Let's get the other node back online first.

You are going to need to get a screen attached to see what's going on.

You are going to need to get a screen attached to see what's going on.

ASKER

There's a screen shot above, but I'm guessing you need a specific area for me to capture. Just let me know which and I'll do that. Thanks

Sorry, I mean the physical box. Monitor/keyboard/mouse or KVM depending how you are setup

ASKER

Working with HP engineer on phone now. First obvious issue is that it appears lh2 ip settings are gone. Will post more when I learn more. Thanks

ASKER CERTIFIED SOLUTION

membership

This solution is only available to members.

To access this solution, you must be a member of Experts Exchange.

no point split here, but dont worry about it!

ASKER

hancocka, i opened a ticket with the mod when I saw that points weren't split. They should be addressing it shortly. Thanks!

thats jolly good of you! thanks

ASKER

Self-supported with guidance from HP Support Engineers to diagnose and remedy the problem.

and get it escalted to HP, for the private fixes.