httpd connection hangs

Hi

OS: CentOS 6.3 Kernel 2.6.32-279.14.1.el6.x86_64

HTTPD: httpd-2.2.15-15.el6.centos

On one of our webserver we have the problem that sometimes the connection stops. I have created a script that runs curl to the website and tries to open a 3 mb file.

Everytime it starts to download the first ~400kb of data and then it suddenly stops. The connection still stays ESTABLISHED but the transferrate goes down to 0.

This are the pings that were takenwhile running curl

The connection still stays ESTABLISHED even if I quit the script. They stay ESTABLISHED until it gets a timeout. (See very high Send-Q)

I tried to strace the httpd process. It hangs at that point

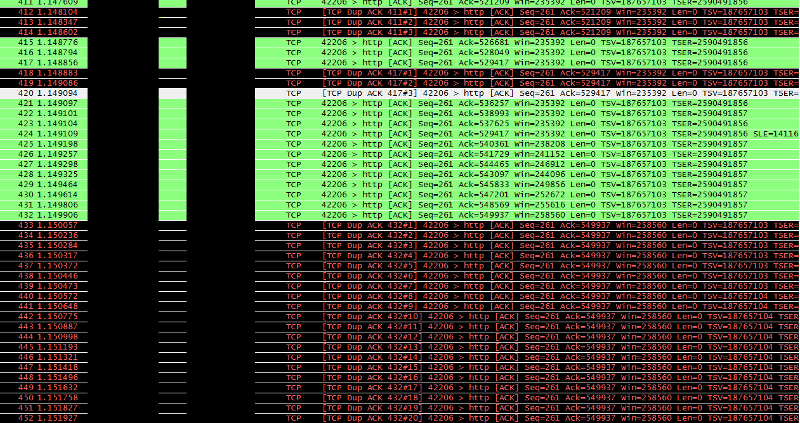

When looking with TCP dump inside Wireshark I get tonns of TCP Dup ACK when the connection drops

Any idea what this could cause?

OS: CentOS 6.3 Kernel 2.6.32-279.14.1.el6.x86_64

HTTPD: httpd-2.2.15-15.el6.centos

On one of our webserver we have the problem that sometimes the connection stops. I have created a script that runs curl to the website and tries to open a 3 mb file.

Everytime it starts to download the first ~400kb of data and then it suddenly stops. The connection still stays ESTABLISHED but the transferrate goes down to 0.

This are the pings that were takenwhile running curl

64 bytes from webserver (web-ip): icmp_req=38 ttl=61 time=1.25 ms

64 bytes from webserver (web-ip): icmp_req=39 ttl=61 time=2.32 ms

64 bytes from webserver (web-ip): icmp_req=40 ttl=61 time=2.47 ms

64 bytes from webserver (web-ip): icmp_req=41 ttl=61 time=1.81 ms

64 bytes from webserver (web-ip): icmp_req=42 ttl=61 time=0.843 ms

64 bytes from webserver (web-ip): icmp_req=43 ttl=61 time=1.17 ms

64 bytes from webserver (web-ip): icmp_req=44 ttl=61 time=1.16 ms

64 bytes from webserver (web-ip): icmp_req=45 ttl=61 time=0.921 ms

64 bytes from webserver (web-ip): icmp_req=46 ttl=61 time=1.22 ms

64 bytes from webserver (web-ip): icmp_req=47 ttl=61 time=1.34 ms

64 bytes from webserver (web-ip): icmp_req=48 ttl=61 time=0.999 ms

64 bytes from webserver (web-ip): icmp_req=49 ttl=61 time=1.07 ms

64 bytes from webserver (web-ip): icmp_req=50 ttl=61 time=3.47 ms

64 bytes from webserver (web-ip): icmp_req=51 ttl=61 time=1.60 ms

64 bytes from webserver (web-ip): icmp_req=52 ttl=61 time=2.15 ms

64 bytes from webserver (web-ip): icmp_req=53 ttl=61 time=1.54 ms

--- webserver ping statistics ---

53 packets transmitted, 53 received, 0% packet loss, time 52086ms

rtt min/avg/max/mdev = 0.843/1.482/3.470/0.628 msThe connection still stays ESTABLISHED even if I quit the script. They stay ESTABLISHED until it gets a timeout. (See very high Send-Q)

Proto Recv-Q Send-Q Local Address Foreign Address State

tcp 0 566352 160.85.187.61:80 160.85.85.47:41500 ESTABLISHED I tried to strace the httpd process. It hangs at that point

setitimer(ITIMER_PROF, {it_interval={0, 0}, it_value={0, 0}}, NULL) = 0

writev(11, [{"0\r\n\r\n", 5}], 1) = 5

write(9, "160.85.85.47 - - [10/Jan/2013:14"..., 120) = 120

shutdown(11, 1 /* send */) = 0

poll([{fd=11, events=POLLIN}], 1, 2000) = 1 ([{fd=11, revents=POLLIN|POLLHUP}])

read(11, "", 512) = 0

close(11) = 0

read(4, 0x7fffdf69eccf, 1) = -1 EAGAIN (Resource temporarily unavailable)

accept4(3, {sa_family=AF_INET, sin_port=htons(42178), sin_addr=inet_addr("160.85.85.47")}, [16], SOCK_CLOEXEC) = 11

fcntl(11, F_GETFL) = 0x2 (flags O_RDWR)

fcntl(11, F_SETFL, O_RDWR|O_NONBLOCK) = 0

read(11, "POST /bigfile HTTP/1.1\r\nUser-Age"..., 8000) = 260

stat("/var/www/moodledev/bigfile", {st_mode=S_IFREG|0644, st_size=76890786, ...}) = 0

open("/.htaccess", O_RDONLY|O_CLOEXEC) = -1 ENOENT (No such file or directory)

open("/var/.htaccess", O_RDONLY|O_CLOEXEC) = -1 ENOENT (No such file or directory)

open("/var/www/moodledev/bigfile", O_RDONLY|O_CLOEXEC) = 12

fcntl(12, F_GETFD) = 0x1 (flags FD_CLOEXEC)

fcntl(12, F_SETFD, FD_CLOEXEC) = 0

read(12, "160.85.104.32 - - [25/Nov/2012:0"..., 4096) = 4096

open("/var/www/moodledev/bigfile", O_RDONLY|O_CLOEXEC) = 13

fcntl(13, F_GETFD) = 0x1 (flags FD_CLOEXEC)

fcntl(13, F_SETFD, FD_CLOEXEC) = 0

setsockopt(11, SOL_TCP, TCP_CORK, [1], 4) = 0

writev(11, [{"HTTP/1.1 200 OK\r\nDate: Thu, 10 J"..., 250}], 1) = 250

sendfile(11, 13, [0], 76890786) = 20270

setsockopt(11, SOL_TCP, TCP_CORK, [0], 4) = 0

poll([{fd=11, events=POLLOUT}], 1, 240000) = 1 ([{fd=11, revents=POLLOUT}])

sendfile(11, 13, [20270], 76870516) = 34200

poll([{fd=11, events=POLLOUT}], 1, 240000) = 1 ([{fd=11, revents=POLLOUT}])

sendfile(11, 13, [54470], 76836316) = 46512

poll([{fd=11, events=POLLOUT}], 1, 240000) = 1 ([{fd=11, revents=POLLOUT}])

sendfile(11, 13, [100982], 76789804) = 62928

poll([{fd=11, events=POLLOUT}], 1, 240000) = 1 ([{fd=11, revents=POLLOUT}])

sendfile(11, 13, [163910], 76726876) = 69768

poll([{fd=11, events=POLLOUT}], 1, 240000) = 1 ([{fd=11, revents=POLLOUT}])

sendfile(11, 13, [233678], 76657108) = 84816

poll([{fd=11, events=POLLOUT}], 1, 240000) = 1 ([{fd=11, revents=POLLOUT}])

sendfile(11, 13, [318494], 76572292) = 114912

poll([{fd=11, events=POLLOUT}], 1, 240000) = 1 ([{fd=11, revents=POLLOUT}])

sendfile(11, 13, [433406], 76457380) = 153216

poll([{fd=11, events=POLLOUT}], 1, 240000) = 1 ([{fd=11, revents=POLLOUT}])

sendfile(11, 13, [586622], 76304164) = 218880

poll([{fd=11, events=POLLOUT}], 1, 240000) = 1 ([{fd=11, revents=POLLOUT}])

sendfile(11, 13, [805502], 76085284) = 257184

poll([{fd=11, events=POLLOUT}], 1, 240000When looking with TCP dump inside Wireshark I get tonns of TCP Dup ACK when the connection drops

Any idea what this could cause?

In "httpd.conf" change 'Timeout' to some much higher number and reload.

ASKER

It looks better when I set the Timeout to 900. It was set to 240. It failed after about 17 tries.

How comes that this change affects the problem? even 240s should be sufficient for a 3mb file?

How comes that this change affects the problem? even 240s should be sufficient for a 3mb file?

Only if the 3M file can be downloaded in less than 240s.

ASKER

Easily.

When I use the webbrowser it still fails to load the website. It actually tries to load over 45mb.

When I use the webbrowser it still fails to load the website. It actually tries to load over 45mb.

Where is the 45M coming from?

ASKER

Well curl tells me that it loaded 45mb. It is a php file that is generating a massive amount of information... some crap application.

If that app is generating a lot of data, then that would explain why you need a larger timeout. You may even need to go higher if the app takes a while to generate the download. That's typically when I increase it -- because of waiting on an app to process.

ASKER

Hi

I don't think that the Timeout variable itself will fix the prblem. I have set the Timeout to something like 9000000 and it still fails.

I don't see the problem when I am sitting in the same network as the webserver or if I run the script directly on the server itself.

I don't think that the Timeout variable itself will fix the prblem. I have set the Timeout to something like 9000000 and it still fails.

I don't see the problem when I am sitting in the same network as the webserver or if I run the script directly on the server itself.

Can you run 'mtr' remotely to find the latency?

ASKER

What options would I need to run? You mean on the client or on the server?

No, from a laptop, desktop or remote server.

mtr --report 1.2.3.4

Make sure that the latency is non-httpd related.

mtr --report 1.2.3.4

Make sure that the latency is non-httpd related.

ASKER

Here the mtr. xxx were just the hostnames that it is hopping.

HOST: xxxxx Loss% Snt Last Avg Best Wrst StDev

1.|-- xxxx 0.0% 10 2.4 0.7 0.4 2.4 0.6

2.|-- ??? 100.0 10 0.0 0.0 0.0 0.0 0.0

3.|-- ??? 100.0 10 0.0 0.0 0.0 0.0 0.0

4.|-- xxxxx 0.0% 10 0.9 1.0 0.9 1.1 0.0

Can you put a static file out of the webserver that's at least 10M and use curl to pull it down?

ASKER

Hi

That is what I have done with a bigfile which was 75mb. Also I tried a file with 3mb size but it fails still. Not always but every 2-10 tryouts.

That is what I have done with a bigfile which was 75mb. Also I tried a file with 3mb size but it fails still. Not always but every 2-10 tryouts.

This is screaming 'Timeout' to me. Can you test with a larger timeout -- say around 1200?

ASKER

I have testet with Timeout of 90'000 and it still fails sometimes

Is the file being streamed -FROM- PHP or is PHP generating a file and you are later downloading that saved file?

If PHP is actually the backend and is delivering the file to you straight from the script, then it's possible PHP could be the issue. PHP might have some insufficient limits on timeouts or memory, and could be killing the delivery after it hits that limit. Double-check your php.ini file to see if you have error logging enabled and being written to some error log file somewhere. (If you change the php.ini file, you'll have to restart Apache or if it's Fast-CGI, you might have to restart the FPM)

Once you have error logging turned on, trigger the problem and check the PHP error log. (It's worth checking even if PHP isn't streaming the file directly)

If PHP is actually the backend and is delivering the file to you straight from the script, then it's possible PHP could be the issue. PHP might have some insufficient limits on timeouts or memory, and could be killing the delivery after it hits that limit. Double-check your php.ini file to see if you have error logging enabled and being written to some error log file somewhere. (If you change the php.ini file, you'll have to restart Apache or if it's Fast-CGI, you might have to restart the FPM)

Once you have error logging turned on, trigger the problem and check the PHP error log. (It's worth checking even if PHP isn't streaming the file directly)

Also bear in mind that PHP can be configured to be the processor for ANY request, not just for files ending in .php, which is why I suggested the error log even if you think it's a static file. I've seen some setups before where PHP was the handler for every request inside a specific domain (which is a bad idea, but that's what was happening).

ASKER

Hi

It is not PHP as it happens also with a 3 MB txt file that I try to wget or curl.

But it is streamed FROM php. The application is called "moodle" but has nothing to do with it as for the reasons stated before.

It is not PHP as it happens also with a 3 MB txt file that I try to wget or curl.

But it is streamed FROM php. The application is called "moodle" but has nothing to do with it as for the reasons stated before.

Hi,

That's why I added the caveat of PHP handling any requests, too. Even when you download a text file, PHP can still be handling the request invisibly, depending on your setup. So even though downloading the text file SHOULD have nothing to do with PHP, PHP might still be involved. That's why I suggested checking the PHP error log anyway.

That's why I added the caveat of PHP handling any requests, too. Even when you download a text file, PHP can still be handling the request invisibly, depending on your setup. So even though downloading the text file SHOULD have nothing to do with PHP, PHP might still be involved. That's why I suggested checking the PHP error log anyway.

ASKER

Ok.

I will have to check next wednesday when I am back there. Did not know that php could be involved in that even if you don't call any php file.

thanks

I will have to check next wednesday when I am back there. Did not know that php could be involved in that even if you don't call any php file.

thanks

It's definitely possible. It all depends on the web server setup and configuration (including htaccess files).

ASKER

Hi

I can exclude php. I have disabled php completely and it still shows the same issues. It doesn't seem to be apache either as I have disabled apache and installed nginx. With the same issues.

I can exclude php. I have disabled php completely and it still shows the same issues. It doesn't seem to be apache either as I have disabled apache and installed nginx. With the same issues.

SOLUTION

membership

This solution is only available to members.

To access this solution, you must be a member of Experts Exchange.

ASKER CERTIFIED SOLUTION

membership

This solution is only available to members.

To access this solution, you must be a member of Experts Exchange.

Got it, so it was #1. The "internet traffic analysis tool" was iptables.

ASKER

Thanks for the help. The solution is documented in my post.