Problem with simple SSIS package

I am trying to copy rows from a SQL query into an existing excel file.

The errors I am getting are:

[Excel Destination [1381]] Error: SSIS Error Code DTS_E_OLEDBERROR. An OLE DB error has occurred. Error code: 0x80040E21.

[Excel Destination [1381]] Error: Cannot create an OLE DB accessor. Verify that the column metadata is valid.

The t-sql is made up of several columns - most are actual columns and some are aliased empty strings so that there is a like for like match to the spreadsheet.

When I look at column mapping everything looks perfect but I just can't get it to work.

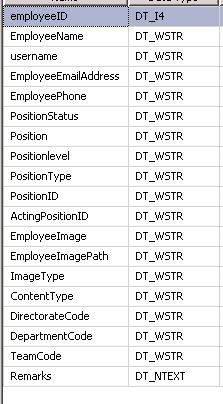

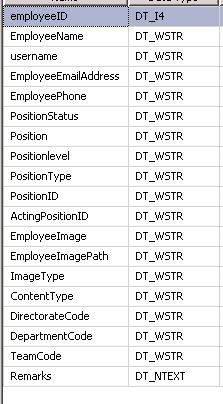

I must be missing something simple. I look at the meta data for the data flow and wonder if there is a mismatch in datatypes (shown in the image) but I can't see a way to change the datatypes

The errors I am getting are:

[Excel Destination [1381]] Error: SSIS Error Code DTS_E_OLEDBERROR. An OLE DB error has occurred. Error code: 0x80040E21.

[Excel Destination [1381]] Error: Cannot create an OLE DB accessor. Verify that the column metadata is valid.

The t-sql is made up of several columns - most are actual columns and some are aliased empty strings so that there is a like for like match to the spreadsheet.

When I look at column mapping everything looks perfect but I just can't get it to work.

I must be missing something simple. I look at the meta data for the data flow and wonder if there is a mismatch in datatypes (shown in the image) but I can't see a way to change the datatypes

ASKER

They do not match:

int > string

ntext > string

Is this what would cause those cryptic errors?

I think you may be right with the transformation in task, in fact the more I look at what I need to achieve the more I see that it might not be a simple data pump task.

For instance I need to recreate the spreadsheet each time so may use a template with the headers already in place.

int > string

ntext > string

Is this what would cause those cryptic errors?

I think you may be right with the transformation in task, in fact the more I look at what I need to achieve the more I see that it might not be a simple data pump task.

For instance I need to recreate the spreadsheet each time so may use a template with the headers already in place.

ASKER CERTIFIED SOLUTION

membership

This solution is only available to members.

To access this solution, you must be a member of Experts Exchange.

ASKER

Great thanks - no doubt ill be back with more questions

Good luck!

I find that often times I need to use a data conversion task in order to change the data type to match.