infira

asked on

ESXi 5.1 SSD guest write speed very slow

I have installed ESXi 5.1 on my server.

And machine has 4x vertex 256GB SSD drives, wich on drive is hot sphere. (RAID 5)

I have installed a Virtual Machine Windows7 for testing.

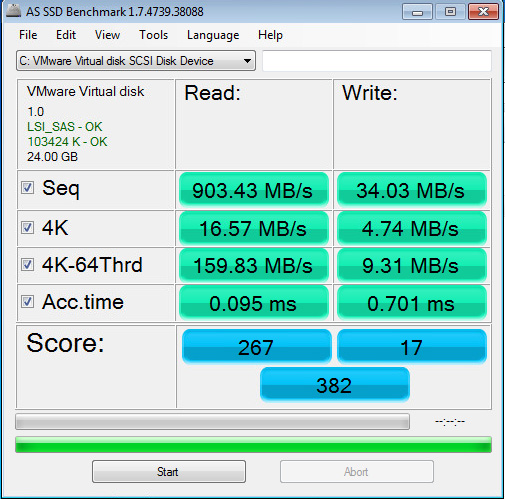

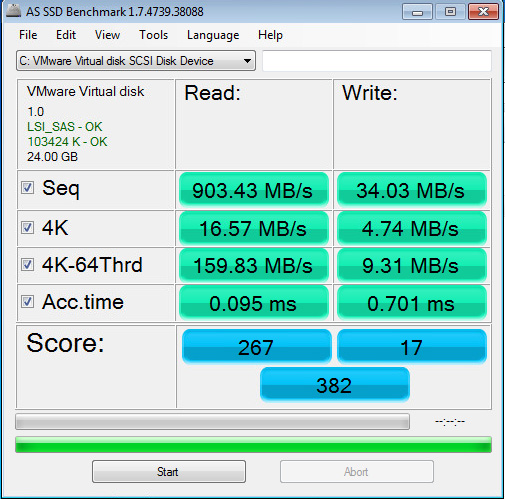

There i downloaded a AS SSD Benchmark for disk speed testing.

It says Seq 900MB/s for read, thats ok

But only 34.03 for Write, that is really slow. i Cant understand what seems to be the problem.

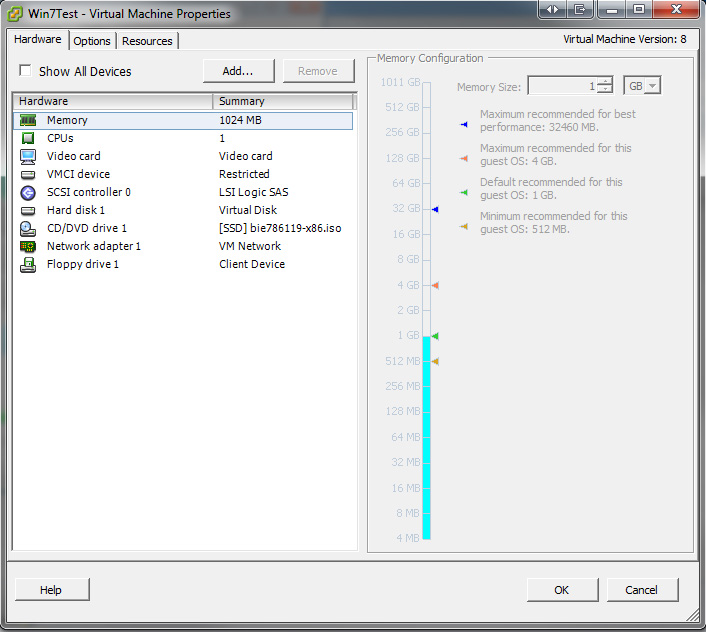

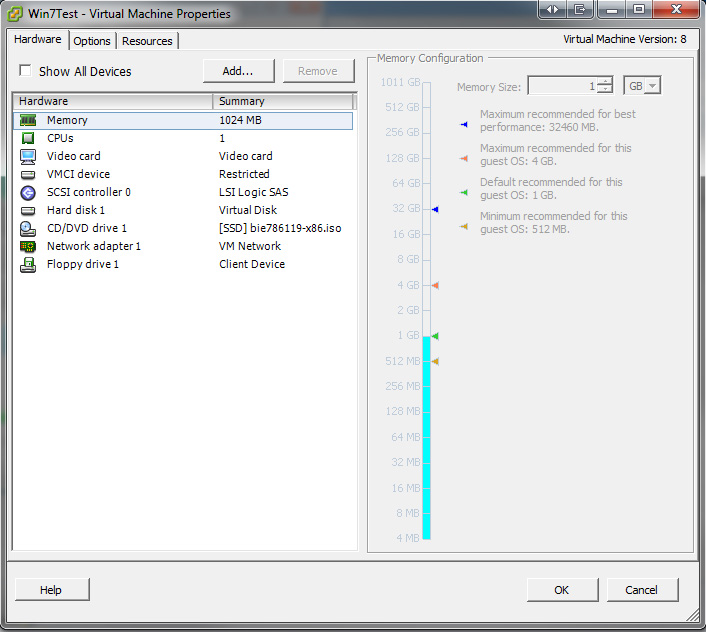

here some pictures for the result VM settings screenshot

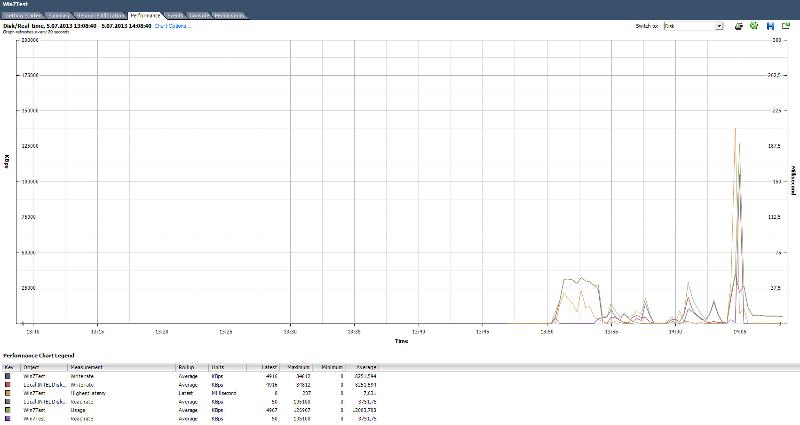

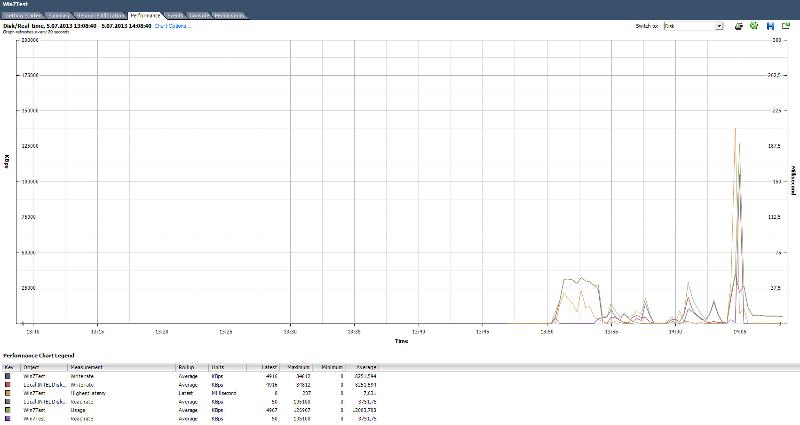

VM settings screenshot  VM performache graphfic

VM performache graphfic

And machine has 4x vertex 256GB SSD drives, wich on drive is hot sphere. (RAID 5)

I have installed a Virtual Machine Windows7 for testing.

There i downloaded a AS SSD Benchmark for disk speed testing.

It says Seq 900MB/s for read, thats ok

But only 34.03 for Write, that is really slow. i Cant understand what seems to be the problem.

here some pictures for the result

VM settings screenshot

VM settings screenshot  VM performache graphfic

VM performache graphfic

ASKER

is there onther solution?, i cant remove Raid anymore

You are using desktop SSD's in a RAID array. Never do that. Those disks aren't built to work properly in an a RAID array. You will have to replace them with enterprise class SSD's (and make sure they are certified to work properly with your RAID controller, best practice is to buy them directly from your Server manufacturer, like Dell or HP). Or replace them with enterprise class standard disks.

ASKER

If i bought these SSD i previously read thad Vertex 4 works fine in Raid arrays

For example i use Raid 0 form my workstations, and i have very high speeds.

And it has worked veruy fine, no problems hasnt occured.

We have small busiess with up to 12 users into server, enterprise class SSD costs too much for us.

For example i use Raid 0 form my workstations, and i have very high speeds.

And it has worked veruy fine, no problems hasnt occured.

We have small busiess with up to 12 users into server, enterprise class SSD costs too much for us.

SOLUTION

membership

This solution is only available to members.

To access this solution, you must be a member of Experts Exchange.

ASKER

@hanccocka what card you using?

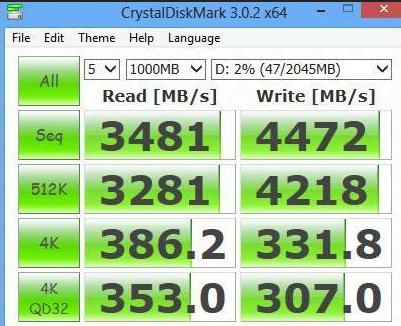

Fusion-IO ioDrives

http://www.fusionio.com/products/iodrive/

the original, MkI, replaced by the ioDrive2

http://www.fusionio.com/products/iodrive2/

Why the need for all the speed?

(unless you are doing VDI)

http://www.fusionio.com/products/iodrive/

the original, MkI, replaced by the ioDrive2

http://www.fusionio.com/products/iodrive2/

Why the need for all the speed?

(unless you are doing VDI)

ASKER

i dont need speed that much, but 30 mb is pretty slow, even my museum old HDD performed better

If you are comparing physical with virtual machines, virtual computers will always be slower, by nature of the I/O which is virtualised, that's the trade off, compared to a physical computer.

Its it impacting performance to the end user.

Its it impacting performance to the end user.

ASKER

that i know, but the performance slowlyness should'nt be that high

Write speed should be around 400-500MB not 30

Write speed should be around 400-500MB not 30

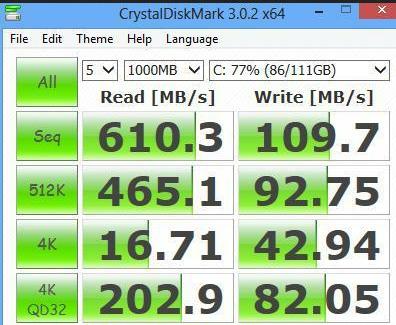

Never seen the write speed at 400-500MB, you can see my example at RAID 10.

ASKER

Ok, maybe i exaggerate, but i think the 30MB/s is low.

For conclusion you said, my raid configuration is wrong?

For conclusion you said, my raid configuration is wrong?

ASKER CERTIFIED SOLUTION

membership

This solution is only available to members.

To access this solution, you must be a member of Experts Exchange.

ASKER

For now i accept your solution, ill try it later, now i must copy data back to old server. And try Raid 10 or 1

30MB is not low for a RAID5 doing nearly full uncached random I/O. SSDs are purchased for low latency, not throughput.

ASKER

@dlethe you claim that 30MB is normal in raid 5? considering tha singel drive should perfrom ~510MB seq write speed

No, I claim that 30MB is normal in YOUR specific situation (random I/O, non-cached, RAID5, grossly mismatched I/O size).

How the single drive performs on sequential writes would only be relevant if you were benching sequential writes. You aren't. No such thing as a sequential write when it comes to what HDDs/SDDs do behind a RAID5 controller. Then factor real world likelihood that your I/O is random and uncached, and you'll understand why.

How the single drive performs on sequential writes would only be relevant if you were benching sequential writes. You aren't. No such thing as a sequential write when it comes to what HDDs/SDDs do behind a RAID5 controller. Then factor real world likelihood that your I/O is random and uncached, and you'll understand why.

Raid 5 suffers write penalties, although I wouldn't have expected that much.