pzozulka

asked on

VMware: Adding a new ESXi host

We have 3 ESXi hosts and vCenter running vSphere 5.0. One of the hosts failed, and we bought a new server to replace it.

Can you recommend any step-by-step guides on adding a new host into an existing environment.

We are also running NetApp FAS 2240 using NFS volumes.

Can you recommend any step-by-step guides on adding a new host into an existing environment.

We are also running NetApp FAS 2240 using NFS volumes.

ASKER

I guess my question was more of configuring the ESXi host. Adding it to vCenter is easy.

You will need to

1. Add Networking

2. Configure Datastores

have you never configured an ESXi host?

1. Add Networking

2. Configure Datastores

have you never configured an ESXi host?

ASKER

I have managed existing setups before, but from scratch only once about a year ago. So didn't want to miss anything critical.

What specific help do you need with your configuration?

you should be able to replicate from existing hosts, by checking configuration?

you should be able to replicate from existing hosts, by checking configuration?

ASKER

From what I'm hearing my predecessors might have setup the networking incorrectly. I am looking for any recommendations and links to resources that show how to CORRECTLY setup networking on ESXi. Like a best practice guide or similar. In other words, should we use LACP or not, etc.

ASKER CERTIFIED SOLUTION

membership

This solution is only available to members.

To access this solution, you must be a member of Experts Exchange.

SOLUTION

membership

This solution is only available to members.

To access this solution, you must be a member of Experts Exchange.

also team across cards, do not team on the same network interface.

ASKER

We use HP ProCurve switches. Each NIC Team is connected to two different HP Core switches for redundancy.

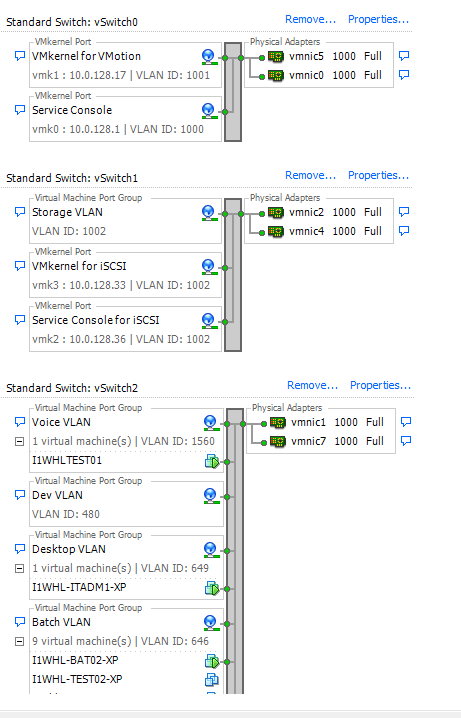

We use HP ProCurve switches. Each NIC Team is connected to two different HP Core switches for redundancy.* T=Tagged, U=Untagged (ex. T1000 = Tagged on VLAN 1000)

vmnic0: Switch 2, VLAN - T1000, T1001

vmnic1: Switch 2, VLAN - U1, T320, T400, T480, T646, T649, T1560

vmnic2: Switch 1, VLAN - T1002

vmnic3: DMZ Switch

vmnic4: Switch 2, VLAN - U1, T1002

vmnic5: Switch 1, VLAN - U1, T1000, T1001

vmnic6: N/A

vmnic7: Switch 1, VLAN - U1, T320, T400, T480, T646, T649, T1560

The reason everyone believes networking is configured wrong is two fold:

1) vSwitch0 has vmnic0 and vmnic5. vmnic0 is NOT untagged on VLAN 1 (Default VLAN), while vmnic5 IS untagged on VLAN1. Same thing with the other vSwitches.

2) As far as LACP, or Trunks, or NIC Teaming goes -- The Network admins are telling me on the switch side -- all ports are regular ports. So not trunks, LACP, or NIC teaming on the switch side. On the vSphere side, I'm seeing NIC teaming setup for each vSwitch.

For example, vmnic4 has this config on the switch:

interface C11

name "ESX1-vmnic3"

flow-control

unknown-vlans Disable

exit

Looks a mess

Vmnic 0 and 1 both port configs need to be the same or in a trunk

Vmnic 0 and 1 both port configs need to be the same or in a trunk

I'm surprised you do not have network issues.

ASKER

There might be some inefficiencies such as not using trunks or LACP, but as far as actual problems (forgetting inefficiencies for a second), I'm not seeing anything critical here.

Where do you see the problem?

Where do you see the problem?

Ports in vSwitch need same config.

ASKER

vSwitch 0: vmnic 0 & 5 have nearly same config.

vSwitch 1: vmnic 2 & 4 have nearly same config.

vSwitch 2: vmnic 1 & 7 have identical configs.

vSwitch 1: vmnic 2 & 4 have nearly same config.

vSwitch 2: vmnic 1 & 7 have identical configs.

SOLUTION

membership

This solution is only available to members.

To access this solution, you must be a member of Experts Exchange.

ASKER

We use to have our NetApp connected via ISCSI, but that's no longer the case. It now connects via NFS to NFS volumes. I guess the previous admin didn't cleanup the network config.

Do you have a similar EE article for NFS network config?

Do you have a similar EE article for NFS network config?

NFS configuration is easier.

follow my video...

http://andysworld.org.uk/2011/06/21/new-hd-video-adding-an-nfs-datastore-to-a-vmware-vsphere-esxesxi-4x-host-server/

this is the same for all versions of ESX/ESXi.

create the VMKernel Portgroup, and then run the wizard to connect to the NFS Export om the NetApp.

follow my video...

http://andysworld.org.uk/2011/06/21/new-hd-video-adding-an-nfs-datastore-to-a-vmware-vsphere-esxesxi-4x-host-server/

this is the same for all versions of ESX/ESXi.

create the VMKernel Portgroup, and then run the wizard to connect to the NFS Export om the NetApp.

2. Right Click your Datacenter or Cluster, and Click Add Host, enter the FQDN, and follow the wizard, using root username and password.

You will also want to ensure, you have the same network portgroup names, and vSwitches.

Also you will want to connect the ESXi host to your NetApp NAS NFS export.