spen_lang

asked on

SSIS Lookup

Hi,

I need to create a package that imports data from an Excel file into a MSSQL database. The package needs to lookup codes from the database using descriptions in the Excel file, if the lookup does not match then I would like to email an end user about the non-matching lookup and stop the database import.

So far I have added a lookup and set it to redirect error rows, which works fine. But I am unable to stop the database import.

Is this possible?

Thanks, Greg

I need to create a package that imports data from an Excel file into a MSSQL database. The package needs to lookup codes from the database using descriptions in the Excel file, if the lookup does not match then I would like to email an end user about the non-matching lookup and stop the database import.

So far I have added a lookup and set it to redirect error rows, which works fine. But I am unable to stop the database import.

Is this possible?

Thanks, Greg

ASKER

The lookup is connected to an OLE DB Destination which does not seem to give me this option... Unless I am looking in the wrong place (mind block)

>The lookup is connected to an OLE DB Destination

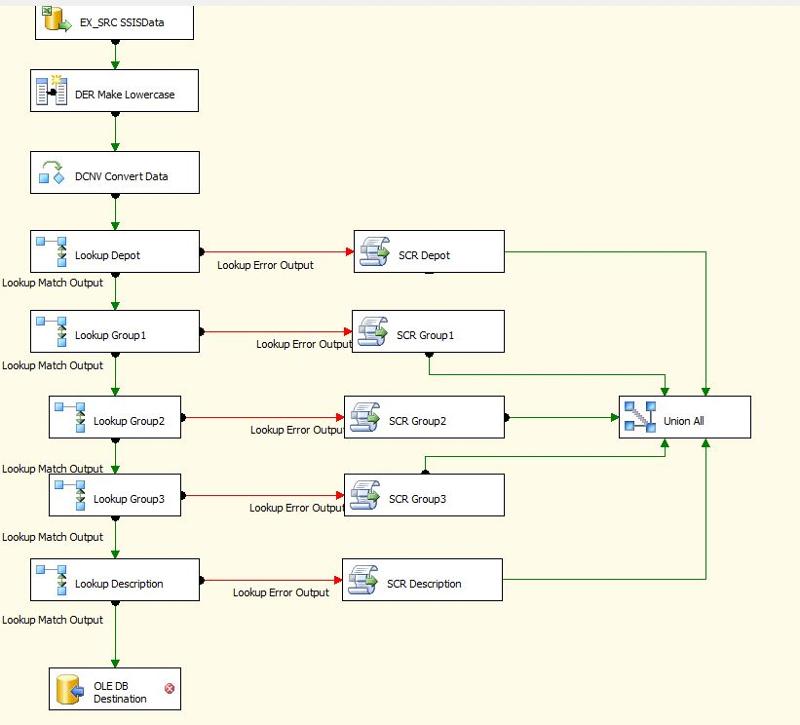

Please send me a screen shot of this.

Normally this is done with a separate Lookup Transform between source and destination, which you can then branch off of for errors / missing rows.

Please send me a screen shot of this.

Normally this is done with a separate Lookup Transform between source and destination, which you can then branch off of for errors / missing rows.

So create a variable somewhere, and create five Row Count transforms between the script task and the Union All (you could even set the value in the script tasks since it's there, and avoid the Row Count transforms).

>But I am unable to stop the database import.

Ok I get it. Slightly different dang deal. Add a Conditional Split between the final lookup and the transform, double-click on it, and create two paths: One if @the_variable == 0 that leads to the destination, and one if @the_variable != 0 that leads to the email or whatever you want to do other than insert.

Then in the control flow, same instructions as above.

>But I am unable to stop the database import.

Ok I get it. Slightly different dang deal. Add a Conditional Split between the final lookup and the transform, double-click on it, and create two paths: One if @the_variable == 0 that leads to the destination, and one if @the_variable != 0 that leads to the email or whatever you want to do other than insert.

Then in the control flow, same instructions as above.

"But I am unable to stop the database import."

Just wondering: why would you want this? Is it an "all or nothing" matter? After all, it's only the "good" records that will make it through to the destination...

If you really really (really) need that then you'll need to work with a staging table. In your Data Flow, records get inserted into the staging table. You'll also need to keep track of the number of records coming out of your source, can be done as explained by Jim (RowCount). The RowCount component should be located directly under the source because at that moment the flow still contains all records.

The next step in your control flow compares the number of records in the staging table with the RowCount variable: when equal then records should get written to final destination table. This can all be done through an Execute SQL task...

Just wondering: why would you want this? Is it an "all or nothing" matter? After all, it's only the "good" records that will make it through to the destination...

If you really really (really) need that then you'll need to work with a staging table. In your Data Flow, records get inserted into the staging table. You'll also need to keep track of the number of records coming out of your source, can be done as explained by Jim (RowCount). The RowCount component should be located directly under the source because at that moment the flow still contains all records.

The next step in your control flow compares the number of records in the staging table with the RowCount variable: when equal then records should get written to final destination table. This can all be done through an Execute SQL task...

Looks like Jim posted his reply while I was writing mine :)

Jim: "One if @the_variable == 0 that leads to the destination, and one if @the_variable != 0 that leads to the email"

Are you sure that will have the desired outcome? As SSIS uses batches, let's say there's an issue in batch number 3, wouldn't the data of the first two batches be already written to the destination as the counter only changes from zero in batch 3?

Jim: "One if @the_variable == 0 that leads to the destination, and one if @the_variable != 0 that leads to the email"

Are you sure that will have the desired outcome? As SSIS uses batches, let's say there's an issue in batch number 3, wouldn't the data of the first two batches be already written to the destination as the counter only changes from zero in batch 3?

ASKER CERTIFIED SOLUTION

membership

This solution is only available to members.

To access this solution, you must be a member of Experts Exchange.

SOLUTION

membership

This solution is only available to members.

To access this solution, you must be a member of Experts Exchange.

ASKER

I have to use a staging table for this project in any case so this solution sounds spot on thanks.

On another note, what is the easiest way to get all data from a "union all" into an email as an attachment? Will I have to export it to a csv file and then pick it up again?

Thanks

On another note, what is the easiest way to get all data from a "union all" into an email as an attachment? Will I have to export it to a csv file and then pick it up again?

Thanks

"Maybe this should be two separate data pumps, one for the validation and one for the conditional execution, unless you see a way to pull this off in the same data flow. Is there a batch setting that can be edited?"

Not that I'm aware of. I can think of a control flow like this

1. start transaction

2. data flow (fail in case of problem)

3. commit transaction if all okay

But I'm not really in favor of this possibility because it requires too much tweaking. Such as setting the RetainSameConnection property of the connection manager to true (which is not the default)...

And the built-in transaction support, well, I stay away from that...

Not that I'm aware of. I can think of a control flow like this

1. start transaction

2. data flow (fail in case of problem)

3. commit transaction if all okay

But I'm not really in favor of this possibility because it requires too much tweaking. Such as setting the RetainSameConnection property of the connection manager to true (which is not the default)...

And the built-in transaction support, well, I stay away from that...

>what is the easiest way to get all data from a "union all" into an email as an attachment?

Hmm. Knew I should have written that article.

That would be a Send Mail task (I'd use Task Factory Advanced Email and SMS Task if that's available to you), where the body of the variable is popualted with HTML-based table object, similar to what this answer by SQL expert ScottPletcher does.

There is a limitation of 4,000 characters in an email, and this is a fair amount of work to pull off, so as a quick and dirty it would be a better idea to output these rows into a text file, then send your email with that text file as an attachment.

Hmm. Knew I should have written that article.

That would be a Send Mail task (I'd use Task Factory Advanced Email and SMS Task if that's available to you), where the body of the variable is popualted with HTML-based table object, similar to what this answer by SQL expert ScottPletcher does.

There is a limitation of 4,000 characters in an email, and this is a fair amount of work to pull off, so as a quick and dirty it would be a better idea to output these rows into a text file, then send your email with that text file as an attachment.

ASKER

Thanks for all your help, all very good points which I have made a start on and will continue with tomorrow. Will share the points out tomorrow...

>So far I have added a lookup and set it to redirect error rows

Add a RowCount transform between this and the next step, and assign the count to a variable.

Then in your control flow, change the arrow that goes from this data pump to the next task to be conditional WHERE success AND @your_variable == 0.

If it's zero execution will continue, if it's greater than zero it will not proceed to the next step, i.e. it'll stop there.