Server 2012 R2 Clustering

We'd like to take advantage of the clustering features in Windows Server 2012 R2 to provide a more secure environment for our virtual servers.

We have a pair of servers with Failover Clustering configured hosting our Hyper-V VMs and a third providing storage for the VHDx files. So if one of our Hyper-V servers goes down the other provides failover hosting. But if our storage server goes down all the VMs go down with it.

What we'd like to do is use the Failover Cluster servers to not only host the VMs but also store the VHDx files. So far all our research is saying to use an SAN (expensive) or single storage location (no redundancy). Is it possible to use a File Server / Scale out File Server cluster to have data stored synchronously on multiple servers and still be accessible to a Hyper-V Cluster?

We have a pair of servers with Failover Clustering configured hosting our Hyper-V VMs and a third providing storage for the VHDx files. So if one of our Hyper-V servers goes down the other provides failover hosting. But if our storage server goes down all the VMs go down with it.

What we'd like to do is use the Failover Cluster servers to not only host the VMs but also store the VHDx files. So far all our research is saying to use an SAN (expensive) or single storage location (no redundancy). Is it possible to use a File Server / Scale out File Server cluster to have data stored synchronously on multiple servers and still be accessible to a Hyper-V Cluster?

In this type of an environment the storage must be shared in order for it to be accessible to both servers independently. A SAN is really the realistic way to go for this.

I forgot to give you the exact model of what I'd recommend.

Vess R2600iS

Runs around $4k for the unit, and to fill it up with 16 disks would be about $1200-$1400. If you only need half that space then cut the disks in half and you have an Enterprise SAN for

8 disks (half full) = $4700

16 disks (full) = $5400

Vess R2600iS

Runs around $4k for the unit, and to fill it up with 16 disks would be about $1200-$1400. If you only need half that space then cut the disks in half and you have an Enterprise SAN for

8 disks (half full) = $4700

16 disks (full) = $5400

Don't forget you'll need interface cards for both servers and cable.

Don't forget you'll need interface cards for both servers and cable.

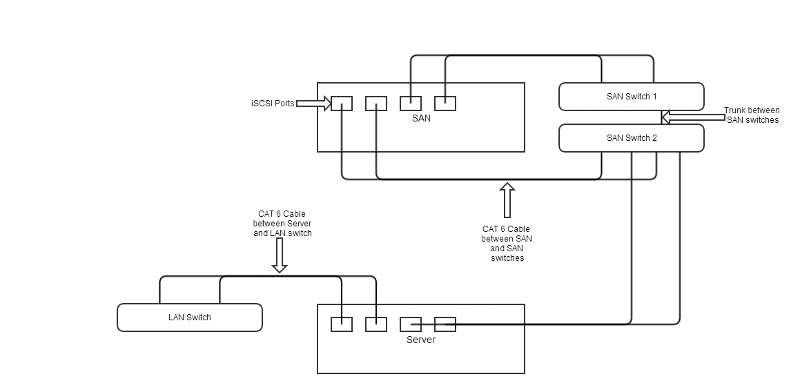

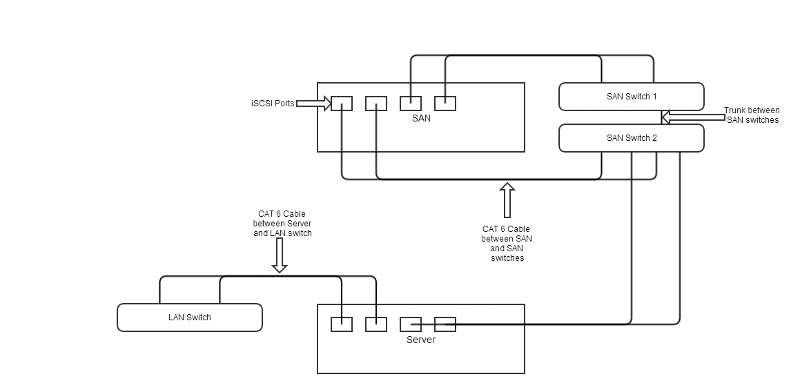

Good catch, I almost forgot that. Our typical setup was 4 iSCSI ports from the back of the VessRAID going into two switches that were trunked (basically connected on ports 24 on each switch with a short CAT 6 cable) together; 2 into one switch, then 2 into the other. After this we'd put a 4 port NIC into the server (usually we'd just order them with more) and 2 of those ports go into the LAN switch, and one from each of the remaining two would go into the SAN switches; one in switch a and one in switch b.

If that doesn't make sense I've got a good drawing I could pull out. Let me know.

This really is the only way to go though.

Our typical setup was 4 iSCSI ports from the back of the VessRAID going into two switches that were trunked

These iSCSI ports are just 4 ethernet ports basically if you're not familiar with them.

Let me also mention that the iSCSI ports should be on a different subnet:

Example

iSCSI subnet = 10.0.0.0/24

LAN subnet = 10.0.1.0/24

So you'd have two ports on your server's NIC that had IPs of 10.0.0.1 & 10.0.0.2 which would connect to the SAN switches

And...

You'd have two ports on your server's NIC that had IPs of 10.0.1.1 & 10.0.1.2 which would connect to the LAN switch

From there you just use the iSCSI Initiator in Windows to setup the rest. Let me know if you need help on that. See the picture below for reference.

Example

iSCSI subnet = 10.0.0.0/24

LAN subnet = 10.0.1.0/24

So you'd have two ports on your server's NIC that had IPs of 10.0.0.1 & 10.0.0.2 which would connect to the SAN switches

And...

You'd have two ports on your server's NIC that had IPs of 10.0.1.1 & 10.0.1.2 which would connect to the LAN switch

From there you just use the iSCSI Initiator in Windows to setup the rest. Let me know if you need help on that. See the picture below for reference.

In order to create Hyper-V virtual machines, you have to have shared storage attached to Hyper-V host as LUN no matter you use local disk, ISCSI or FC SAN

So by simply creating file server cluster will not help, then also you need additional steps such as converting that file servers into storage server (ISCSI probably and don't know if this is supported config even ) and then map its drives as a LUN on Hyper-V server etc

After that you need to add that file server LUN into CSV on hyper-V cluster

Instead of making all this trial and errors SAN storage is best option which will provide you reliable, redundant, high speed and configurable HDD space ideal for running virtual machines and as far as i know most of the organizations would go for SAN storage (small \ large depending upon there requirements and budget.

The another option could be if SAN storage is out of scope for you, just have server class HDD \ Direct attached storage (DAS) with at least 10K rpm (15K rpm is recommended) with 2012 \ 2012 R2 Hyper-V server and then establish Hyper-V replica of critical VMs which do not required clustering and can be established between standalone hyper-v servers

Mahesh.

So by simply creating file server cluster will not help, then also you need additional steps such as converting that file servers into storage server (ISCSI probably and don't know if this is supported config even ) and then map its drives as a LUN on Hyper-V server etc

After that you need to add that file server LUN into CSV on hyper-V cluster

Instead of making all this trial and errors SAN storage is best option which will provide you reliable, redundant, high speed and configurable HDD space ideal for running virtual machines and as far as i know most of the organizations would go for SAN storage (small \ large depending upon there requirements and budget.

The another option could be if SAN storage is out of scope for you, just have server class HDD \ Direct attached storage (DAS) with at least 10K rpm (15K rpm is recommended) with 2012 \ 2012 R2 Hyper-V server and then establish Hyper-V replica of critical VMs which do not required clustering and can be established between standalone hyper-v servers

Mahesh.

ASKER

Thank you for all the responses. Its looking like the consensus is a SAN is the way to go.

My two concerns with this are :

1. Expense (we have a lot of available storage space on the servers, buying more storage space seems like a waste).

2. A SAN is still a single point of failure. If something happens to the SAN all the data is unavailable until the SAN is back online again.

We have two offices across the street from each other that are directly connected by fiber cable. Ideally we'd like to have a at least one server from the Hyper-V cluster in each building and also have the data stored in a server in each building. Backups and replication are our current solution to this but at best there is a 30 second delay between the live virtual machine and its replica. We would like completely synchronize data storage that is accessible by the Hyper-V cluster.

My two concerns with this are :

1. Expense (we have a lot of available storage space on the servers, buying more storage space seems like a waste).

2. A SAN is still a single point of failure. If something happens to the SAN all the data is unavailable until the SAN is back online again.

We have two offices across the street from each other that are directly connected by fiber cable. Ideally we'd like to have a at least one server from the Hyper-V cluster in each building and also have the data stored in a server in each building. Backups and replication are our current solution to this but at best there is a 30 second delay between the live virtual machine and its replica. We would like completely synchronize data storage that is accessible by the Hyper-V cluster.

1. Expense (we have a lot of available storage space on the servers, buying more storage space seems like a waste).

Short term maybe, long term no. Long term the SAN will pay for itself and then some.

2. A SAN is still a single point of failure. If something happens to the SAN all the data is unavailable until the SAN is back online again.

Yes and no. Most SANs today have redundancy built in everywhere; dual power supplies, dual controller cards, RAID levels above the traditional 1-10 levels that allow for more disks to fail in arrays, etc. SANs may seem like a single point of failure, but in reality it is hard to have one go out aside from someone pouring water on it or a tornado ripping your building to shreds. Please really do consider the model I suggested above. I refuse to believe that whoever you report/answer to could say no to the model I suggested full of drives if you make the right case. Heck, use this email as a guide/reference when talking to them.

Promise Vess R2600iS

ASKER CERTIFIED SOLUTION

membership

This solution is only available to members.

To access this solution, you must be a member of Experts Exchange.

Some more research has shown StarWinds makes a software solution that will do the job.

StarWinds makes a good product but it won't achieve the performance, reliability, or expandability that a SAN will.

Obviously in the end it's your choice, but the consensus is clear, you need a SAN.

ASKER

I'll agree, the performance of a SAN will most likely be better then any software solution we could find. Dedicated hardware almost all wins that race.

Reliability will entirely depend on the servers we are using the software on.

Expand-ability will actually be higher. Servers are as expandable as SANs but this software is actually transferable so when the server hits its EOL or maxs out on hardware upgrades we could bring in a new server and transfer the software to it.

That being said, I'm not really looking to debate which is better. We already know SAN is an option but we are looking for a software solution, is it possible and what is available.

Reliability will entirely depend on the servers we are using the software on.

Expand-ability will actually be higher. Servers are as expandable as SANs but this software is actually transferable so when the server hits its EOL or maxs out on hardware upgrades we could bring in a new server and transfer the software to it.

That being said, I'm not really looking to debate which is better. We already know SAN is an option but we are looking for a software solution, is it possible and what is available.

In that case, I haven't worked with this vendor specifically, but have heard good things about them:

http://www.falconstor.com/

Also this is something to think about seriously:

http://www.vmware.com/products/virtual-san

http://www.falconstor.com/

Also this is something to think about seriously:

http://www.vmware.com/products/virtual-san

ASKER

Solutions provided by others failed to meet all the requirements of the original question. The closest solution that met the requirements was my own.

1) Redundancy of a high end RAID configuration

2) Tons of space

3) Redundant power

4) Way more flexibility and performance

We used these for our small-medium business clients and they are great.

http://www.promise.com/storage/raid_series.aspx?region=en-US&m=1105&sub_m=sub_m_3&rsn1=2&rsn3=61

If you fill this up with disks (2TB 7200 RPM) it will probably be around $6-7k. And this will give you 32 TB of space, which is more than enough for most organizations.

I have put dozens of these in and I highly recommend SAN setups for VMware clusters, Hyper-V failover clusters.