What should end-users do about the Heartbleed Vulnerability?

Change passwords? Is there an extensive list of sites that were or are vulnerable? Install add-ons that can manually check the status sites? What can end-users do?

ASKER CERTIFIED SOLUTION

membership

This solution is only available to members.

To access this solution, you must be a member of Experts Exchange.

@skullnobrains

However, up until now, SSL was a good reason to skip more complex protocols - if you encrypt the tunnel, then the transaction is supposedly "safe", so why use something complex that might not work clientside? Common wisdom is to use an SSL "wrap" to protect credentials, credit card details, and so forth, plus the data that use of the credentials is meant to prevent access to.

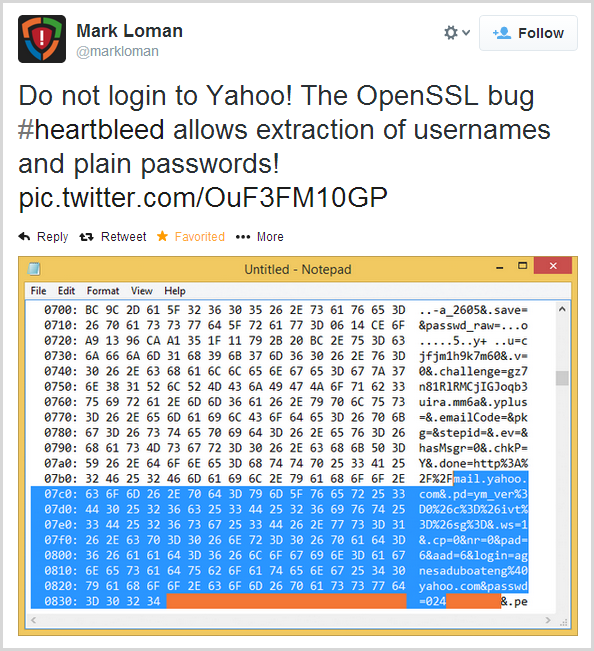

]i'm wondering why mostly vendors in the security field make such a fuss about heartbleed ? free advetisement ?Because it is the worst security breach we have EVER had on the internet - and thats saying something.

many security experts have been waiting until the necessity to change server certificates for those who were impacted was all over the internet before including it in their advises, and some still don't seem to be aware of that.Indeed so. However, that has little to do with end users, and more to do with the hope that at on at least some platforms the private keys were not effected, Despite those hopes, the worst case scenario was in fact true - nginx, lighttpd and apache mod_ssl are all vulnerable to private key and other-session disclosure due to the bug. if you are a server owner, and use SSL on one of those three platforms, you need to go check your release of OpenSSL - if it is the problematic version, you need to upgrade THEN revoke and re-create your private key and certificate, and change every password on the machine, including local shell passwords and those of your users, DB access passwords to other machines, and so forth.

- end-users of software that make use of "perfect forward secrecy" have less risk to have experienced password hijacking. anywayIncorrect. The bug exposes 64K worth of memory from the target machine; most likely, that will be other web sessions close to the time of the exploit, including any form variables (such as login forms). The attack can be repeated several times per second so determined harvesting should expose many of the logins that occurred, even if those logins were not via the SSL interface at all.

basically your little sister is much more likely to have spotted you typing your password than a malicious attacker who has managed to sniff an internet link and performed a crazily complicated attack just to steal your mailbox password...Heartbleed isn't about sniffing. It is about disclosing the contents of process memory (including the data sent and received by other users) without any further tools needed, or without any trace in the logs. PFS does not help ,as the data revealed is not encrypted at all - it's the server's own decrypted copy of another user's session.

using SSL and the likes is not a good enough reason to skip challenge-response authentication or similar common security measures.That is true. Where Heartbleed would have been useless would have been in cases where the submission protocol sends the data in a form inherently useless to a recipient. Challenge response is a good example, as are two factor tokens, one and a half factor schemes such as pinsafe, or appeal to a oracle such as NTLM can provide for users already authenticated to a domain. clientside certificate authentication is also safe, provided the client hasn't been compromised similarly.

However, up until now, SSL was a good reason to skip more complex protocols - if you encrypt the tunnel, then the transaction is supposedly "safe", so why use something complex that might not work clientside? Common wisdom is to use an SSL "wrap" to protect credentials, credit card details, and so forth, plus the data that use of the credentials is meant to prevent access to.

security vendors, cert issuers are going to male big money out of this while they are the ones who should have discovered this bug months ago.No disagreement there, but - have you SEEN the OpenSSL code base? due to the fact it has been hacked and patched for a considerable number of years now, it is an absolute nightmare to try and get up to speed on. The person who should have caught this was the lead (and only full-time) developer on the OpenSSL project, who is the one who approved the new code being added. The author of the patch clearly needs to take primary blame - he WROTE the standard he was implementing, and in that standard, wrote that if the value that is now a problem was "too large" the whole packet must be silently discarded. That is of course the bug in a nutshell, so effectively, the bug he added was exactly the bug that his own standards document told him not to add.... But a more experienced head should have looked, said "hmmm, we get this number from the packet - where is the validation for it?" and kicked it back to the author.

thanks for answering --constructively-- to my flamish post

from what i heard until now, you'd still be bound to the heap of the server process so you would not be able to read authentications occuring in a separate process. do you have reasons to believe otherwise ?

this is supposedly possible, i admit. but even if stealing the certificate key is quite feasible, a large scale attack that would steal passwords from a major host is quite unrealistic.

and most if not all bank sites (or other security-sensitive servers) have more complex authentication mechanisms even though they use ssl. additionally most security-oriented servers have additional protections that would allow them to detect such large scale attacks.

once you have the cert, if you can sniff the network, retrieving unprotected passwords is easy, of course. this is where PFS comes in handy.

i maintain that people with such skills probably have better things to do than steal your wordpress.com password and thay there are far more chances that your password or credit card numbers got caught by a key logger than by using heartbleed.

if it is that bad, it is high time people read such codes and stop accepting updates with messy stuff. additionally, you don't need to read the code to disable a new functionality that you do not need.

this is probably true, but not very inclusive. every single person who used that version in a security-critical environment is to blame, for not looking at the code, for enabling a useless functionality at compilation-time (or for blindly using binaries), and most of all for not using an extra layer of security at least for authentication, not to speak about those who accepted the patch, those who updated repositories that would be used worldwide without looking, and worse those so-called security experts who got paid for advising to update openssl because their automated vulnerability scanner was able to detect some minor likely inapplicable vulnerability in the previous version.

--

note that my point is not to minimise heartbleed.

i just think that people concerned with security need to learn other lessons than "hopefully i patched early so i should be ok" which is the basic dumb reaction of most admins (or has been for the past 10 years at least) while most end users probably could not care less or they should get aware of a whole bunch of other stuff at the same time

i also think that publishing lists of impacted sites all over the web is less constructive than wondering about what those of us who are supposed to be security-aware should/could have done in order not to be impacted at their own level

The attack can be repeated several times per second so determined harvesting should expose many of the logins that occurred

from what i heard until now, you'd still be bound to the heap of the server process so you would not be able to read authentications occuring in a separate process. do you have reasons to believe otherwise ?

Heartbleed isn't about sniffing. It is about disclosing the contents of process memory (including the data sent and received by other users) without any further tools needed, or without any trace in the logs

this is supposedly possible, i admit. but even if stealing the certificate key is quite feasible, a large scale attack that would steal passwords from a major host is quite unrealistic.

and most if not all bank sites (or other security-sensitive servers) have more complex authentication mechanisms even though they use ssl. additionally most security-oriented servers have additional protections that would allow them to detect such large scale attacks.

once you have the cert, if you can sniff the network, retrieving unprotected passwords is easy, of course. this is where PFS comes in handy.

i maintain that people with such skills probably have better things to do than steal your wordpress.com password and thay there are far more chances that your password or credit card numbers got caught by a key logger than by using heartbleed.

No disagreement there, but - have you SEEN the OpenSSL code base?

if it is that bad, it is high time people read such codes and stop accepting updates with messy stuff. additionally, you don't need to read the code to disable a new functionality that you do not need.

The author of the patch clearly needs to take primary blame

this is probably true, but not very inclusive. every single person who used that version in a security-critical environment is to blame, for not looking at the code, for enabling a useless functionality at compilation-time (or for blindly using binaries), and most of all for not using an extra layer of security at least for authentication, not to speak about those who accepted the patch, those who updated repositories that would be used worldwide without looking, and worse those so-called security experts who got paid for advising to update openssl because their automated vulnerability scanner was able to detect some minor likely inapplicable vulnerability in the previous version.

--

note that my point is not to minimise heartbleed.

i just think that people concerned with security need to learn other lessons than "hopefully i patched early so i should be ok" which is the basic dumb reaction of most admins (or has been for the past 10 years at least) while most end users probably could not care less or they should get aware of a whole bunch of other stuff at the same time

i also think that publishing lists of impacted sites all over the web is less constructive than wondering about what those of us who are supposed to be security-aware should/could have done in order not to be impacted at their own level

from what i heard until now, you'd still be bound to the heap of the server process so you would not be able to read authentications occuring in a separate process. do you have reasons to believe otherwise ?

Yes. If nothing else, it has been proven in practical tests that Heartbleed can see session data from other processes, not even just the (eg) webserver it has been bound to, but other processes too. One of the "nice" things (if you want to call something this severe nice) about this is that you can go test this yourself - set up a vulnerable server using s_server (built into the openssl tool), connect to it with any of the available POC scripts, and see what you get back. For that you will need a copy of the 1.0.1e build of openssl (any platform!) and a script suitable for your environment. I used check-ssl-heartbleed.pl largely because I do a lot of perlscripting, but also because it was convenient to integrate it into my existing nagios environment for ad-hoc checking of monitored hosts. If you don't fancy doing your own testing, there are a fair few examples posted - for instance

this is supposedly possible, i admit. but even if stealing the certificate key is quite feasible, a large scale attack that would steal passwords from a major host is quite unrealistic.Why? once you have a couple of examples, it would be trivial to code something for a botnet that grabs a 64K block once per second, parses it for important data (login details and/or credit card details) then discards the rest. The trojan at Target did essentially the same thing - looked for CC data in memory then stored it to a local db for deduplication, uploading only uniques to the attackers.

and most if not all bank sites (or other security-sensitive servers) have more complex authentication mechanisms even though they use ssl. additionally most security-oriented servers have additional protections that would allow them to detect such large scale attacks.Indeed so. Mine uses an interstacial after the (otherwise just <form>) initial login, which prompts for three letters taken from a 12 letter "passphrase", by offset (so it may ask for letters 4, 8 and 9 for example). An attacker would need to both know which letters were asked for, and get lucky (in being asked for the right three letters again) to get past that, so I suspect it is specifically aimed at this class of attack.

However, I just checked four buy-online sites here in the uk, and without exception

a) the login was a simple <form>

b) the checkout screen which prompted for CC details had clientside validation, but was a simple <form> for submission purposes.

So, my bank account (which to be honest, is not much use to an attacker anyhow. I can look at my recent transactions and that's about it) is secure, but a site where I type my CC details, complete with the three check digits on the back of the card, isn't.

Clearly, it is possible to mitigate the issue, but how many sites will have done that?

once you have the cert, if you can sniff the network, retrieving unprotected passwords is easy, of course. this is where PFS comes in handy.

PFS is overrated. While its key limit is not terrible (it is limited to a 1024 bit key, considered insecure for primary ssl keys these days, but probably tolerable for a session key) an active attacker can simply not offer those suites. Of course, that requires a MITM intercept, but doing so is not significantly harder than sniffing in modern networks (in fact, in a switched environment, sniffing pretty much requires some sort of modification to the topology to work)

The great strength of PFS is the task it is designed for - the NSA have a huge stockpile of captured traffic, waiting either advances in cryptoanalysis or capture of the secret key to decrypt. In the case of PFS, that secret key is useless for historic captures; either they have some way to make breaking a 1024 bit key per message economic, or they are out of luck.

i maintain that people with such skills probably have better things to do than steal your wordpress.com password and thay there are far more chances that your password or credit card numbers got caught by a key logger than by using heartbleed.Depends on the model.

If I can (for example) target dabs.com, and get a fresh set of CC data every two minutes, AND don't care too much whose CC data I get, then its a low-risk, high gain solution.

If I have to get a keylogger tracelessly onto a machine, hope that its user types some CC data, then exfiltrate that data, then that is HARD, and likely to have a much lower "hit" rate.

The other risk of course is that passwords are rarely unique per site. Many, even most, users re-use the same password on multiple sites, so it is worthwhile trying what you capture from (say) a wordpress site on other, non-vulnerable sites. If you can get into their webmail? game over! you can then go to any site they have an account on, and invariably, being able to click a link from an email sent to their email account is all that is needed to reset a password.

if it is that bad, it is high time people read such codes and stop accepting updates with messy stuff. additionally, you don't need to read the code to disable a new functionality that you do not need.I am actually a half-decent coder; I do it for a living, although not for OpenSSL :)

I had never seen the patch in question until this happened - I do *have* a fully synched copy of the repro for this project, but only so I can hunt though its header files for the real meaning of the often obscure errors it kicks out.

For a lot of use cases, yes, you *do* need to read the code. Often code like this is taken on trust; if you don't advertise a facility for $FOO, then you don't expect anyone to try $FOO, so the disable flag just stops the advertisement.

OpenSSL does have a flag, and yes it actually removes the hooks that cause this code to be called - that flag is OPENSSL_NO_HEARTBEATS. A quick grep though the codebase gives me a heap of "hits" on this, showing that it disables functionality in both s_client and s_server (the former should tell you why reverse-heartbeat is such an issue)

Where it doesn't give me any hits, is anywhere in the documentation. The only way to know this flag even exists, is to go to the code.

However, this is a compile time flag. I have never, ever compiled OpenSSL myself. I *could*, but it would be a pain, because the next time OpenSSL was updated, I would need to do it again, and then again the next time, for the rest of time. Or I could just use the already-compiled versions, which in the case of linux, means just *approving* an update to the latest version when it is announced that there is a fix in there for a security issue.

this is probably true, but not very inclusive. every single person who used that version in a security-critical environment is to blame, for not looking at the code, for enabling a useless functionality at compilation-time (or for blindly using binaries), and most of all for not using an extra layer of security at least for authentication, not to speak about those who accepted the patch, those who updated repositories that would be used worldwide without looking, and worse those so-called security experts who got paid for advising to update openssl because their automated vulnerability scanner was able to detect some minor likely inapplicable vulnerability in the previous version.I am sure there are some gentoo users out there who have a policy of carefully checking all the source of each module before they emerge it.

Some of them may even have a viable system that can take text input one of these years.

Everyone else? we have to trust that the gatekeepers of a project got it right, and if they failed, that the package maintainers who build the binary we rely on checked it for sanity first, and disabled something they didn't like while they kicked an exception report back upstream. Doing anything else is the path of madness - the OpenSSL project alone is 20MB of text files, all interconnected, so that analysis must hop back and forth between dozens of different header and library files to see what calling a given function actually does, and what the flags passed, used, and returned actually mean.

Doing that on a well structured, well documented solution would take weeks, just to get up to speed on what does what, and why. Bug hunting? that would take months, if you were approaching it cold, and you are still likely to miss anything subtle.

Doing that on OpenSSL? I wouldn't even attempt it, seriously. It is bad enough sitting with wireshark open on one screen, the source tree open on the other, and grepping though for why SSL2_ST_SEND_SERVER_VERIFY

However, there *are* people who are paid to do this. Two of them (coincidentally on the same day, if you believe in coincidences) found this bug. I virtually guarantee there are people out there who watch each patch as it is submitted though, looking for fresh exploits; some of them will be criminals, some will work for the government (so a different set of criminals :) and some will be private researchers hoping to make a name for themselves by finding a major security flaw in a well known package like OpenSSL.

and worse those so-called security experts who got paid for advising to update openssl because their automated vulnerability scanner was able to detect some minor likely inapplicable vulnerability in the previous version.Pretty much, yeah. There is an entire *class* of security experts whose only job appears to be to watch [bugtraq] or [FD], find POC code, and add it to metasploit pro/nessus/scanner-of-choi

That is nice and neat, and keeps people employed. Having a nice, pretty report saying "here is what we found; CVE says this is a low/medium/high risk of log-alerts/dos/compromise/

i also think that publishing lists of impacted sites all over the web is less constructive than wondering about what those of us who are supposed to be security-aware should/could have done in order not to be impacted at their own levelBottom line? none of us has the time or (in the case of a fair few of my colleagues) the skill to catch this sort of thing, and we were blindsided. Does that mean that they are not skilled? well, no. One guy on the payroll for us is a double CCIE - routing/switching AND security. He has probably forgotten more about firewalling and packet analysis than I will ever know. Another? he has religiously followed everything Laura Chappel has ever released, can make Wireshark throw out data even the servers didn't know they had, and on one occasion, had Wireshark outputting VoIP audio *ahead* of the device that was supposed to be receiving it. However, this sort of analysis is very, very rarefied territory indeed. You need to be intimately familiar with the codebase; have read the RFCs until the print is wearing out, fully understand what the changes do and why they do them, and THEN look at them like a blackhat and think "where are the holes in this? what has been left out, and how can I exploit it?" - Because as defenders, we occupy the classic "position of the interior" - we must find and plug every hole in our defences, every time. An attacker need find only one, and need succeed only once.

Could I have caught this, if it were my full time job to work on OpenSSL? possibly not. I don't know, its one of those questions that can't be answered. Was someone being *paid* to catch this sort of thing? yes, and he missed it. However, he, we, the people at security firms and anyone else who is involved here are just backstops - if the person doing the work messes up, we *might* catch it, but he shouldn't mess up to start with.

What should be taken away from this is that one guy, however skilled, shouldn't be the only backstop between fsckups and the world going up in flames - and that's down to money. Far, far too many companies use OpenSSL, and don't contribute a single penny to its upkeep. Look at all the big names (like Cisco!) that have reported Heartbeat vulns in their products, then look at how much they contributed towards keeping that key piece of software up and running properly. Sometimes, you can't expect the work of a tiger if you have the budget for a housecat.

Yes. If nothing else, it has been proven in practical tests that Heartbleed can see session data from other processes, not even just the (eg) webserver it has been bound to, but other processes too.

the example below does not pull data from a different process. i played with some poc scripts as well. for now the most demonstrative was a python script that actually managed to automate stealing certificates. if you could effectively see memory from other processes, the possibilities explode literally, unless ssl decoding is done in a reverse proxy.

normally, looking at another's process's data should result in a segfault, so the answer might well be os-dependant

Why? once you have a couple of examples, it would be trivial to code something for a botnet that grabs a 64K block once per second, parses it for important data (login details and/or credit card details) then discards the rest

not quite even on unsecure sites (which admittedly are the vast majority).

for one reason, such information is typically not resident in the memory of the server (let's say that it might stay 1ms on a crawling-half-dead server), even less as plain text, meaning you'd have to be one of very crazily unlucky people to have somebody stealing precisely 64k of memory relative to your session at that specific moment. i still believe that getting your password stolen by a key logger of some sort (and this is just an example) is MUCH more likely. obviously sites that do challenge response are hardly impacted.

So, my bank account (which to be honest, is not much use to an attacker anyhow. I can look at my recent transactions and that's about it) is secure, but a site where I type my CC details, complete with the three check digits on the back of the card, isn't.

do you actually type credit card info in a non-banking site ? seems situations differ with people. i'm pretty confident that insurances or visa will likely pay in such cases (like they historically have been doing for a looong time without questioning). on the other hand, i'm unsure if my bank will do something about someone wiring the contents of my account to china.

--

regarding PFS, i pretty much agree that it is not that efficient (when compared to the complexity of sniffing internet connections). then i'm unsure that someone who has a cray available for a couple of hours will use it in order to sniff an end-users' connection to his mailbox or even vendor's site

--

Depends on the model.

If I can (for example) target dabs.com, and get a fresh set of CC data every two minutes, AND don't care too much whose CC data I get, then its a low-risk, high gain solution.

interesting point. agreed without reserve except for the rate which seems difficult to esteem

If I have to get a keylogger tracelessly onto a machine, hope that its user types some CC data, then exfiltrate that data, then that is HARD, and likely to have a much lower "hit" rate.

not quite. most such tools are capable to automatically detect what the user is doing. i've seen one that would take screenshots and log keypress for a couple of minutes whenever it detected a connection to an ssl server, with the exception of self-generated certs, and the addition of a hard-coded list of sites. i've seen one that triggered full session records on specific sites.

The other risk of course is that passwords are rarely unique per site. Many, even most, users re-use the same password on multiple sites, so it is worthwhile trying what you capture from (say) a wordpress site on other, non-vulnerable sites. If you can get into their webmail? game over! you can then go to any site they have an account on, and invariably, being able to click a link from an email sent to their email account is all that is needed to reset a password.

that's a fact i was skipping. thanks for pointing this out.

on the other hand, it requires human, intervention

The only way to know this flag even exists, is to go to the code.

the configure script is enough to see the functionality exists.

unfortunately it's not mentioned in the help and there is no way to disable heartbeats from the configure script appart from disabling tls or tls1 altogether (in 1.0.1c which i picked randomly)

I am sure there are some gentoo users out there who have a policy of carefully checking all the source of each module before they emerge it.

the problem is not really about compiled vs precompiled : it is perfectly understandable that a gentoo admin who installs openssl as a dependency of something that might be installed with an automated script does not look at the code.

it is much less understandable that among the hundreds of people who are maintainers for such a security-oriented package (and not whole package trees), not a single one of them saw something that big. some of them may have looked and missed it, but it is likely that most of them just push packages without looking. we're relying on a chain of trust instead of a chain of defiance, that's the problem.

additionally, here is a quote from the openssl release notes

Major changes between OpenSSL 1.0.0h and OpenSSL 1.0.1 [14 Mar 2012]:

TLS/DTLS heartbeat support.

not a single package maintainer or security expert looked at that specific part of the code ? makes you wonder how many actually even look at the release notes ? or on the paranoid side, how many preferred to sell the information rather than releasing it ?

then write it up in a pretty report and submit it to the customer

hmm, the last one i saw, submitted the output of an automated tool without even bothering to digest it in any way. well that was web format with links to online description of the vulnerabilities so it looked pretty nice :P

Could I have caught this, if it were my full time job to work on OpenSSL? possibly not.

in my case, most likely not even given enough time ;), but i would never dare to pose as the maintainer for openssl whatever the OS

then i'm not an update maniac so i could have been lucky with most of the servers i worked on. possibly also gotten through because of architectures with ssl decoding at reverse proxies, definitely not gotten passwords (one time hashes at best) stolen from the memory of a web server if the security was even mild, and most definitely even the password would not be enough in that case (something you know, something you have, something you are... that's for the basics and far from exhaustive)... but then did rely on SSL for many things. i'm still looking back to see in what cases it was too much expectations.

What should be taken away from this is that one guy, however skilled, shouldn't be the only backstop between fsckups and the world going up in flames

totally, and shooting at the guy is just a way not to look at what we did wrong and why. there is some thinking to do both regarding how cisco, redhat, or others who are less rich work with their packages, and also some work to do internally.

best regards

the example below does not pull data from a different process. i played with some poc scripts as well. for now the most demonstrative was a python script that actually managed to automate stealing certificates. if you could effectively see memory from other processes, the possibilities explode literally, unless ssl decoding is done in a reverse proxy.Well, that doesn't seem to match what the discoverers say - which isn't to say I am disagreeing - I would much rather you be right on this one, and it is entirely possible I am misreading or misunderstanding what they are asserting.

Here is what they have right on their front page:

What leaks in practice?

We have tested some of our own services from attacker's perspective. We attacked ourselves from outside, without leaving a trace. Without using any privileged information or credentials we were able steal from ourselves the secret keys used for our X.509 certificates, user names and passwords, instant messages, emails and business critical documents and communication.

Now, it is possible that they had (or deliberately created, for purposes of the exercise) webservers with business critical documents, an instant messengering server, and an email server, all with OpenSSL 1.0.1; its also possible the above text was written by the marketing department rather than the technical staff, given most of the "heartbleed" site, and indeed the name and logo itself, were designed in order to maximise the publicity value of the vulnerability.

Your point on segfaults is well taken - I have seen similar myself when a pointer offset has gone out of bounds (not to mention the usual stack-smashing that is the usual target of buffer overflows, although its usually autovars that get dropped on the stack) and I don't think *nix based os are much different in that regard.

for one reason, such information is typically not resident in the memory of the server (let's say that it might stay 1ms on a crawling-half-dead server), even less as plain text, meaning you'd have to be one of very crazily unlucky people to have somebody stealing precisely 64k of memory relative to your session at that specific moment.I would say the opposite. usually, the process that has just received CC data goes ahead and contacts the merchant bank that the company uses to fulfil CC orders; that exchange takes a little while, but usually results in either a reject reason code, or a fulfilment handle representing the money that is now "locked" in the account or card. The process can then return a suitable next page to the customer (usually, some sort of order tracking page "to print for your records") and discard the CC data as it is no longer needed. Given it will also need to update a bunch of stuff at the backend before and after that (reserving product for the order, marking it as payment authorized, then throwing it out for picking and shipping) its unsurprising it takes a fair bit longer than the much less involved process of logging a user into the site and issuing a cookie - yet almost all the POCs show clear examples of both POST and GET data from user logins.

do you actually type credit card info in a non-banking site ? seems situations differ with people.Sure. How else are you going to shop online? (well, that and PayPal, but I hate paypal :)

I am actually safer there, in that in cases of reported cardholder-not-present fraud, the banks invariably just back out the transaction and credit the account (debiting the merchant again, but NOT refunding the processing fee) and the merchant then has to fight hard to get that money back again (or just take the loss; its hard to run an online business now without the ability to take credit cards, so at some point they need to suck it and write it off, rather than lose access).

My bank's fraud prevention is actually quite good - If I spend an uncharacteristic amount (such as suddenly making a payment of pretty much my entire balance to China :) then they will put the transaction on hold and phone my landline for confirmation. In some ways, that sucks - I have been on holiday, and suddenly unable to use my card because I wasn't home to answer that landline call, and card transactions were showing up in France - but its a lot better than suddenly finding my account empty. Those were actually card-holder-present transactions though, so maybe the bank was more wary of having to eat the loss itself.

not quite. most such tools are capable to automatically detect what the user is doing. i've seen one that would take screenshots and log keypress for a couple of minutes whenever it detected a connection to an ssl server, with the exception of self-generated certs, and the addition of a hard-coded list of sites. i've seen one that triggered full session records on specific sites.Sure; seen the same myself. However, the point is that a typical home user isn't going to type in his CC data very often if at all; a browser plugin hijack is even more efficient - and as a side issue, I note that one way adware has been getting onto systems lately is for a well respected plugin to have its site hijacked and/or the plugin bought legitimately from its author/owner, then an "update" put on the site that in fact serves adware from the browser with no further changes to the code made. Such a method would be even more effective for deliberately waiting for traffic to specific parts of specific sites (banking and ecommerce, perhaps only the payment fulfilment pages for the latter, unless they store payment details like Amazon do) before capturing the data from the plaintext copy within the browser itself.

However, even assuming that every user you infect types his CC data within one hour of infection - you are still looking at having to get the trojan onto one machine per set of CC captured, past the browser's safeguards, any AV running, and anything the OS puts in the way of hooking the keyboard stream. That's got to be a lot harder than making a few thousand repeats of HeartBleed to a popular ecommerce site

The only way to know this flag even exists, is to go to the code.Not sure. there is a point where 'heartbeats' is added to %disabled if tls1 is already a member of %disabled. However, that doesn't really tell you that you need to add "no-heartbeats" to the ./Configure command line in order to assert that flag manually (or add "heartbeat" => "default" to the definition of %disabled in ./Configure of course)

the configure script is enough to see the functionality exists.

it is much less understandable that among the hundreds of people who are maintainers for such a security-oriented package (and not whole package trees), not a single one of them saw something that big. some of them may have looked and missed it, but it is likely that most of them just push packages without looking. we're relying on a chain of trust instead of a chain of defiance, that's the problem.Indeed so. I suspect the debian maintainer for this package just checks it compiled properly and that's about it.

then write it up in a pretty report and submit it to the customerYeah. given Nessus will quite happily lease you their hosted online version of their tool these days, you have to wonder why you are paying some other company $3K per IP to run a tool you could get for $40/IP/month direct from vendor :)

hmm, the last one i saw, submitted the output of an automated tool without even bothering to digest it in any way. well that was web format with links to online description of the vulnerabilities so it looked pretty nice :P

Seriously though, a decent company will at least go and put the results in a word template for you, removing the obvious false positives and irrelevancies,

Could I have caught this, if it were my full time job to work on OpenSSL? possibly not.Not sure. I would like to think I would look at the diffs, go "what is that he is adding then?", find the RFC, find the line that specifies that parameter needs to be checked for size, then go look to see if he indeed checked it for size.

in my case, most likely not even given enough time ;), but i would never dare to pose as the maintainer for openssl whatever the OS

Of course, I might not be as diligent just before midnight on new years eve either :)

then i'm not an update maniac so i could have been lucky with most of the servers i worked on.I think I am more lucky that most of the servers I have are not, by any stretch of the imagination, web facing, and those that do, most have no need for (and hence, do not have) SSL on them. For email we front things though Mimecast (so no direct access to the servers to or from the web)

The servers that do need HTTPS are java, hence tomcat (luckily, not fronted via apache) or appliances and like most such things, so far behind they are still on 0.98.

What should be taken away from this is that one guy, however skilled, shouldn't be the only backstop between fsckups and the world going up in flamesOr look at what can be done to replace it. I know GnuTLS had an issue the other day, but the code is a LOT cleaner than openssl, many servers can use either (mod_gnutls for example supports a lot more than mod_ssl does - such as SNI for name-based vhosting without SAN certificates)

totally, and shooting at the guy is just a way not to look at what we did wrong and why. there is some thinking to do both regarding how cisco, redhat, or others who are less rich work with their packages, and also some work to do internally.

I am actually a great fan of the Bouncy Castle libraries (they even do OpenPGP :) but given those are java/c# only, not really useful in context.

Oh, and as another Learning Event for me (I seem to have a fair number this week :) It has been pointed out that CVE-2013-0156 dwarfed Heartbleed by an order of magnitude - to quote "If Heartbleed was an 11, CVE-2013-0156 was at least a 13"

But of course it was near-unheard of outside of the Rails community, as it didn't have a cool name or logo...

But of course it was near-unheard of outside of the Rails community, as it didn't have a cool name or logo...

I would say the opposite. usually, the process that has just received CC data goes ahead and contacts the merchant bank that the company uses to fulfil CC orders; that exchange takes a little while, but usually results in either a reject reason code, or a fulfilment handle representing the money that is now "locked" in the account or card

interesting. i'm no expert but the ones i've been working with (and paying on) usually redirected you instantaneously. actually i'm unsure about the persistency of the data, involvement of separate processes. admittedly some of them must be vulnerable indeed. it is also a fact that such information could be retrieved as post data.

anyway there is nothing the end user can do relative to past payments appart from changing his credit card. my assumption would be that impacted users would know by now.

maybe double-check your account for a few months looking for micropayments could be a reasonable advise to give to users who do lots of online shopping... but that would be a reasonable advise without heartbleed as well.

However, even assuming that every user you infect types his CC data within one hour of infection - you are still looking at having to get the trojan onto one machine per set of CC captured, past the browser's safeguards, any AV running, and anything the OS puts in the way of hooking the keyboard stream. That's got to be a lot harder than making a few thousand repeats of HeartBleed to a popular ecommerce site

i would not be too sure. hijacking a popular extension like ad-block would give you access to 5% of the internet users (in europe), sending a virus or worm is done through a single operation. anybody who helped windows users recently noticed that about 99% of the computers have annoying browser bars most of which leak personal data

Or look at what can be done to replace it. I know GnuTLS had an issue the other day, but the code is a LOT cleaner than openssl

no idea about the code, and actually i'd probably trust openssl over gnutls (even more now, after heartbleed). but gnutls has license issues that will never make it a suitable replacement. basically like always, the gpl version has to be used in gpl software and in gpl software only. (not discussing the openssl exception)

---

i'm still interested in having a definitive answer regarding the possibility to steal information from other processes or possibly kernel memory. i believe the answer is most likely os-dependant. one thing is pretty sure is that no one managed to still OS passwords (or password dbs) or that would be all over the internet. and many must have tried.

likewise, afaik, the certificates are not stuffed in shared memory but i'm not sure about that either. this may lead to the possibility to steal other certificates such as ssh keys and the likes (this one definitely does not concern regular end users)

thanks again for the neat discussion

hijacking a popular extension like ad-block would give you access to 5% of the internet users (in europe), sending a virus or worm is done through a single operation.Yes, I sometimes have nightmares about that. However, that is sufficiently uncommon (and would be caught pretty fast now that the security community is explicitly watching for it) that some sort of drive-by infection is more likely.

basically like always, the gpl version has to be used in gpl software and in gpl software only. (not discussing the openssl exception)Erm, the GnuTLS project is lgpl, not gpl. you can use it in commercial software without it infecting the commercial software with the gpl, provided the code is supplied in such a form that modified versions of the lgpl library can be compiled and used with the package without any restriction (usually, supplying the library as a dll or so file is the method used)

Commercial companies still prefer a BSD licence though, as now the acknowledgement requirement has been removed, they can use someone else's code to do all the heavy lifting and not admit it in their documentation :)

thanks for pointing out the lgpl license.

but given the crazy number of bugs, vulnerabilities, and other implementation problems such as lack of proper binary support, i would not recommend switching to gnutls.

example that has been around for the past 10 years and that allows to craft fake certificates

http://rhn.redhat.com/errata/RHSA-2014-0246.html

hopefully this one apparently will not let one authenticate against ssh servers

maybe we should switch to tcp over pgp-encrypted emails ;P

more seriously, thare are a few alternatives around. NSS might be a reasonable choice with a good code base and frequent auditing. and there are also a few less known candidates such as polarssl but i would not bet on their security.

for now, my personal bet will still be openSSL in most use cases combined with not relying solely on ssl, but i'm not currently working in a field where tcp encryption is that critical

but given the crazy number of bugs, vulnerabilities, and other implementation problems such as lack of proper binary support, i would not recommend switching to gnutls.

example that has been around for the past 10 years and that allows to craft fake certificates

http://rhn.redhat.com/errata/RHSA-2014-0246.html

hopefully this one apparently will not let one authenticate against ssh servers

maybe we should switch to tcp over pgp-encrypted emails ;P

more seriously, thare are a few alternatives around. NSS might be a reasonable choice with a good code base and frequent auditing. and there are also a few less known candidates such as polarssl but i would not bet on their security.

for now, my personal bet will still be openSSL in most use cases combined with not relying solely on ssl, but i'm not currently working in a field where tcp encryption is that critical

but given the crazy number of bugs, vulnerabilities, and other implementation problems such as lack of proper binary support, i would not recommend switching to gnutls.That I can accept - I am not convinced GnuTLS is yet "enterprise ready" - however, it would make more sense (in my opinion anyhow :) to fix GnuTLS and switch to that, than try to unbreak OpenSSL.

The GnuTLS verification bug is identical (in effect, if not in code) to one that affected the IE browser a few years back and hence should have been checked for then. How it works is this; the function that checks if an intermediate (signing) certificate IS a CA returns 1 if it is, 0 if it is not, -1 if an error occured during the check.

The relying function checks for 0, and if not 0, assumes its ok. Clearly, this is not good behaviour; however, the world in c is divided into "values that indicate FALSE" and "values that indicate TRUE" and both 1 and -1 are "TRUE" by the rules, so the relying function is not wrong in its reliance, the function itself is wrongly returning a value that evals to "true" (and yes, I know you already know this, this is largely aimed at other people following along :)

In effect, this allows someone to use any valid certificate as if it were an intermediate CA - they must first of course have a valid certificate, and there is a clear (and damning) paper trail back to the signing entity, although many attackers won't care too much about that.

maybe we should switch to tcp over pgp-encrypted emailsAnd also RFC 1149? :)

more seriously, thare are a few alternatives aroundTrue, but of the alternatives, almost all are "niche" libraries used only one one or two products. GnuTLS (although it is no longer a FSF project) does have a fair bit of support - in particular, I know Debian now prefer it over OpenSSL (largely for licensing reasons, I understand, rather than technical ones) and RedHat are focussing a *lot* of their development and bugfinding time on it - the implication being that they are looking to make it either a strong alternative or a direct replacement for OpenSSL in a lot of their core packages.

I suspect we wouldn't be having this conversation if that were true :)

but i'm not currently working in a field where tcp encryption is that critical

And of course its not just TCP - one major reported "victim" of the bug is OpenVPN; this is unsurprising, given HeartBleed was added by a patch to DTLS for the purpose of improving performance in SCTP implementations, and all OpenVPN IS is a method of encapsulating traffic in a SCTP/DTLS linkset.

SSL is a fact of life these days though - I doubt you can go more than a couple of hours without using it somewhere.

RFC1149 ?

sure ! send alternates bytes of the pgp-encrypted message using pigeons, the pgp key through the network, and attach devices to make sure the pigeons self-destruct if they happen to land in the wrong location (maybe send a few extra ones just in case, gosh animal lovers are gonna love me !)

--

interesting tips on gnutls. how long would you think it might take for gnutls to reach production-level ?

i also heard that the openbsd developpers had recently forked openssl and that by removing lots of windows-specific and vms-specific code, they managed to un-bloat it largely ( i heard about a magnitude of 2-3 in overall code base but i'm quite unsure of the validity of the information ) ... as a heavy freebsd user, this is good news for me, and i assume it will reach the linux world soon enough. i'm pretty confident that there are enough security freaks in the openbsd development to reach good quality code in time.

i'm much more prone to believe in the work of openbsd guys than redhat's, but that's mostly a matter of personal feelings and not even next to objective.

--

thanks for pointing openvpn (and btw many vpn implementations).

hopefully, i'm not concerned : i largely prefer SSH over VPNs whenever a VPN is not required (ie in most use cases and definitely in ALL cases that involve granting a single machine access to a remote network), and unfortunately, the performance toll imposed by vpns usually make dedicated lines and selection of traffic to secure in other ways look cheap in comparison, not to speak of the awful pita vpns are to setup and maintain. ( you might notice a hint of personal dislike, there ;)

but then ssh also has a history of vulnerabilities, but nothing that bad.

--

as a side note, unfortunately, heartbleed demonstrates that encapsulating an encrypted flow inside another one with a completely different code base could hardly be considered as additional security. this is one of the personal lessons i'll have to remember.

sure ! send alternates bytes of the pgp-encrypted message using pigeons, the pgp key through the network, and attach devices to make sure the pigeons self-destruct if they happen to land in the wrong location (maybe send a few extra ones just in case, gosh animal lovers are gonna love me !)

--

interesting tips on gnutls. how long would you think it might take for gnutls to reach production-level ?

i also heard that the openbsd developpers had recently forked openssl and that by removing lots of windows-specific and vms-specific code, they managed to un-bloat it largely ( i heard about a magnitude of 2-3 in overall code base but i'm quite unsure of the validity of the information ) ... as a heavy freebsd user, this is good news for me, and i assume it will reach the linux world soon enough. i'm pretty confident that there are enough security freaks in the openbsd development to reach good quality code in time.

i'm much more prone to believe in the work of openbsd guys than redhat's, but that's mostly a matter of personal feelings and not even next to objective.

--

thanks for pointing openvpn (and btw many vpn implementations).

hopefully, i'm not concerned : i largely prefer SSH over VPNs whenever a VPN is not required (ie in most use cases and definitely in ALL cases that involve granting a single machine access to a remote network), and unfortunately, the performance toll imposed by vpns usually make dedicated lines and selection of traffic to secure in other ways look cheap in comparison, not to speak of the awful pita vpns are to setup and maintain. ( you might notice a hint of personal dislike, there ;)

but then ssh also has a history of vulnerabilities, but nothing that bad.

--

as a side note, unfortunately, heartbleed demonstrates that encapsulating an encrypted flow inside another one with a completely different code base could hardly be considered as additional security. this is one of the personal lessons i'll have to remember.

---

i'm wondering why mostly vendors in the security field make such a fuss about heartbleed ? free advetisement ?

i think it more productive to remind the following :

- many security experts have been waiting until the necessity to change server certificates for those who were impacted was all over the internet before including it in their advises, and some still don't seem to be aware of that.

- end-users of software that make use of "perfect forward secrecy" have less risk to have experienced password hijacking. anyway, others are pretty near zero already. it might be high time we stop frightening end users like this. most users experience much greater security threats that we don't warn them about. let's be honest, this one has a nice name and impacts something everybody knows about, that's all. some major companies have real reasons to be worried, most end users don't. basically your little sister is much more likely to have spotted you typing your password than a malicious attacker who has managed to sniff an internet link and performed a crazily complicated attack just to steal your mailbox password...

- heartbeat is hardly useful as a functionality and discovering such a hole in security software just reminds us that each additional functionality is a potential bug. applying updates without wondering about new functionalities and new security threats is often worse than not applying them.

- using SSL and the likes is not a good enough reason to skip challenge-response authentication or similar common security measures.

- security vendors, cert issuers are going to male big money out of this while they are the ones who should have discovered this bug months ago.