TBSupport

asked on

T-SQL: Cumulative SUM

Hello:

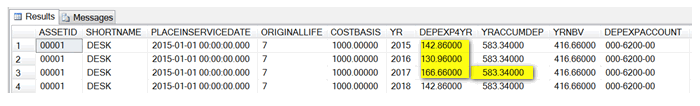

Attached is my T-SQL query. Also, attached are its results.

My focus, primarily, is on the YRACCUMDEP field. I need for this field to be a "cumulative sum" of the DEPEXP4YR field. For each YR field, YRACCUMDEP needs to sum its amount for DEPEXP4YR plus the amount of DEPEXP4YR from previous YR's.

For example, where YR = "2017", YRACCUMDEP needs to display the sum of 142.86, 130.96, and 166.66.

I don't know that I want this constructed as a CTE. I say that because, once I get my YRACCUMDEP field to where it suits my needs, I'm going to want the YRNBV field to subtract the COSTBASIS field and the YRACCUMDEP field for each YR.

Thanks! Please let me know, if there are any questions.

Much appreciated!

TBSupport

Need-Cumulative-Depreciation.docx

QUERY.docx

Attached is my T-SQL query. Also, attached are its results.

My focus, primarily, is on the YRACCUMDEP field. I need for this field to be a "cumulative sum" of the DEPEXP4YR field. For each YR field, YRACCUMDEP needs to sum its amount for DEPEXP4YR plus the amount of DEPEXP4YR from previous YR's.

For example, where YR = "2017", YRACCUMDEP needs to display the sum of 142.86, 130.96, and 166.66.

I don't know that I want this constructed as a CTE. I say that because, once I get my YRACCUMDEP field to where it suits my needs, I'm going to want the YRNBV field to subtract the COSTBASIS field and the YRACCUMDEP field for each YR.

Thanks! Please let me know, if there are any questions.

Much appreciated!

TBSupport

Need-Cumulative-Depreciation.docx

QUERY.docx

To accumulate a calculated column into another column can be achieved by "nesting" (there is no need for a CTE)

To accumulate a calculated column into another column can be achieved by "nesting" (there is no need for a CTE)However please also note you should solve the issue of DISTINCT before you start summing anything. Not only is it wasteful to be calculating rows that are eventually dispensed with via "select distinct" it is also potentially wrong (can produce the wrong figures).

If you run your existing query without DISTINCT what happens?

ASKER

Hi PortletPaul!

When I remove DISTINCT, I get lots of duplicated rows. For example, my query is returning 56 rows. Upon removing DISTINCT, I receive 334 rows--many of them duplicates.

TBSupport

When I remove DISTINCT, I get lots of duplicated rows. For example, my query is returning 56 rows. Upon removing DISTINCT, I receive 334 rows--many of them duplicates.

TBSupport

All of them are "unwanted repetition" (duplicated records are a different thing altogether, here we are dealing with a "resultset" not actual records).

This is produced by one or more of the joins you are using that multiply the rows.

You have to find which of those joins is doing the multiplication and use distinct or group by on that data BEFORE you join. Otherwise your arithmetic could be wrong.

"Select distinct" on the outer query is not the right way out of this issue.

https://www.experts-exchange.com/Programming/Languages/SQL_Syntax/A_12282-Select-Distinct-is-returning-duplicates.html

https://www.experts-exchange.com/Database/Miscellaneous/A_3203-DISTINCT-vs-GROUP-BY-and-why-does-it-not-work-for-my-query.html

This is produced by one or more of the joins you are using that multiply the rows.

You have to find which of those joins is doing the multiplication and use distinct or group by on that data BEFORE you join. Otherwise your arithmetic could be wrong.

"Select distinct" on the outer query is not the right way out of this issue.

https://www.experts-exchange.com/Programming/Languages/SQL_Syntax/A_12282-Select-Distinct-is-returning-duplicates.html

https://www.experts-exchange.com/Database/Miscellaneous/A_3203-DISTINCT-vs-GROUP-BY-and-why-does-it-not-work-for-my-query.html

ASKER

OK. I'll give those a try.

In the meantime, can you also send me a link please for the "nesting" capability that you mentioned earlier? That way, I can "kill two birds with one stone".

Thanks!

TBSupport

In the meantime, can you also send me a link please for the "nesting" capability that you mentioned earlier? That way, I can "kill two birds with one stone".

Thanks!

TBSupport

ASKER CERTIFIED SOLUTION

membership

This solution is only available to members.

To access this solution, you must be a member of Experts Exchange.

ASKER

Hi:

Below is what I just tried. With this, I get the error "Subquery returned more than 1 value. This is not permitted when the subquery follows..."

Any ideas?

TBSupport

Below is what I just tried. With this, I get the error "Subquery returned more than 1 value. This is not permitted when the subquery follows..."

Any ideas?

TBSupport

SELECT ASSETID, COSTBASIS, DEPEXP4YR,

(SELECT SUM(DEPEXP4YR)

FROM TWO.dbo.FA00902 b

WHERE test.YR <= b.FAYEAR) as YRACCUMDEP,

YRNBV FROM

(

SELECT DISTINCT TWO.dbo.FA00100.ASSETID AS ASSETID,

TWO.dbo.FA00100.SHRTNAME AS SHORTNAME,

TWO.dbo.FA00200.PLINSERVDATE AS PLACEINSERVICEDATE, TWO.dbo.FA00200.ORIGINALLIFEYEARS AS ORIGINALLIFE,

TWO.dbo.FA00200.COSTBASIS AS COSTBASIS, TWO.dbo.FA00902.FAYEAR AS YR,

SUM(TWO.dbo.FA00902.AMOUNT) OVER (PARTITION BY TWO.dbo.FA00902.ASSETINDEX,

TWO.dbo.FA00902.FAYEAR, TWO.dbo.FA00902.BOOKINDX) AS DEPEXP4YR,

SUM(TWO.dbo.FA00902.AMOUNT) OVER (PARTITION BY TWO.dbo.FA00902.ASSETINDEX,

TWO.dbo.FA00902.BOOKINDX) AS YRACCUMDEP,

TWO.dbo.FA00200.COSTBASIS - (SUM(TWO.dbo.FA00902.AMOUNT) OVER (PARTITION BY TWO.dbo.FA00902.ASSETINDEX,

TWO.dbo.FA00902.BOOKINDX)) as YRNBV,

TWO.dbo.GL00105.ACTNUMST as DEPEXPACCOUNT,

CASE WHEN TWO.dbo.FA00200.DEPRECIATIONMETHOD = '1'

THEN 'Straight-Line Original Life' WHEN TWO.dbo.FA00200.DEPRECIATIONMETHOD = '2'

THEN 'Straight-Line Remaining Life' ELSE '' END as METHOD,

TWO.dbo.FA40200.BOOKID AS BOOK, 'Fabrikam, Inc.' as COMPANY

FROM TWO.dbo.FA00902

INNER JOIN TWO.dbo.FA00100 ON TWO.dbo.FA00902.ASSETINDEX = TWO.dbo.FA00100.ASSETINDEX

INNER JOIN TWO.dbo.FA00200 ON TWO.dbo.FA00902.ASSETINDEX = TWO.dbo.FA00200.ASSETINDEX

AND TWO.dbo.FA00902.BOOKINDX = TWO.dbo.FA00200.BOOKINDX

INNER JOIN TWO.dbo.FA40200 ON TWO.dbo.FA00200.BOOKINDX = TWO.dbo.FA40200.BOOKINDX

INNER JOIN TWO.dbo.GL00105 ON TWO.dbo.FA00902.GLINTACCTINDX = TWO.dbo.GL00105.ACTINDX

WHERE TWO.dbo.FA00902.SOURCDOC LIKE 'FADEP%' AND TWO.dbo.FA00902.TRANSACCTTYPE = '2'

--REMOVE THE FOLLOWING CLAUSE PRIOR TO "GO-LIVE":

and TWO.dbo.FA40200.BOOKID = 'INTERNAL' --and TWO.dbo.FA00902.FAYEAR = '2017'

)

as test

GROUP BY ASSETID, COSTBASIS, DEPEXP4YR, YR, YRACCUMDEP, YRNBV

PLEASE use code blocks. I edited the above to do this.

--------------------------

When attempting to eradicate unwanted repetition you need to look deeper. Adding another layer on top isn't going to solve the initial problem.

In the following there are 3 source records each with a value of 100. So the grand total is 300. Only the last of these 4 queries gets the correct figures, which it does by solving the repetition before aggregating):

--------------------------

When attempting to eradicate unwanted repetition you need to look deeper. Adding another layer on top isn't going to solve the initial problem.

In the following there are 3 source records each with a value of 100. So the grand total is 300. Only the last of these 4 queries gets the correct figures, which it does by solving the repetition before aggregating):

**MS SQL Server 2008 Schema Setup**:

CREATE TABLE SourceData

([ID] int, [AssocNum] int, [Value] int)

;

INSERT INTO SourceData

([ID], [AssocNum], [Value])

VALUES

(1, 2015, 100),

(2, 2016, 100),

(5, 2017, 100)

;

CREATE TABLE AssoociatedData

([ID] int, [Num] int)

;

INSERT INTO AssoociatedData

([ID], [Num])

VALUES

(1, 2015),

(2, 2015),

(3, 2016),

(4, 2016),

(5, 2017),

(6, 2017),

(7, 2017)

;

**Query 1**:

SELECT

AssocNum

, SUM(Value) AS sum_value

FROM SourceData AS d

INNER JOIN AssoociatedData AS a

ON d.AssocNum = a.Num

GROUP BY

AssocNum

**[Results][2]**:

| ASSOCNUM | SUM_VALUE |

|----------|-----------|

| 2015 | 200 |

| 2016 | 200 |

| 2017 | 300 |

**Query 2**:

SELECT

AssocNum

, SUM(Value) over() AS sum_value

FROM SourceData AS d

INNER JOIN AssoociatedData AS a

ON d.AssocNum = a.Num

**[Results][3]**:

| ASSOCNUM | SUM_VALUE |

|----------|-----------|

| 2015 | 700 |

| 2015 | 700 |

| 2016 | 700 |

| 2016 | 700 |

| 2017 | 700 |

| 2017 | 700 |

| 2017 | 700 |

**Query 3**:

SELECT DISTINCT

AssocNum

, SUM(Value) over() AS sum_value

FROM SourceData AS d

INNER JOIN AssoociatedData AS a

ON d.AssocNum = a.Num

**[Results][4]**:

| ASSOCNUM | SUM_VALUE |

|----------|-----------|

| 2015 | 700 |

| 2016 | 700 |

| 2017 | 700 |

**Query 4**:

SELECT

AssocNum

, SUM(Value) AS sum_value

FROM SourceData AS d

INNER JOIN (SELECT DISTINCT Num FROM AssoociatedData) AS a

ON d.AssocNum = a.Num

GROUP BY

AssocNum

**[Results][5]**:

| ASSOCNUM | SUM_VALUE |

|----------|-----------|

| 2015 | 100 |

| 2016 | 100 |

| 2017 | 100 |

[1]: http://sqlfiddle.com/#!3/19e42/5

It's solved?

ASKER

To be honest, no. I'm just going to move on and ask one of my T-SQL colleagues to help me out with this or even ask him to take over the project. This stuff is beyond my comprehension.

Goodbye.

TBSupport

Goodbye.

TBSupport

ASKER

TBSupport