Trenton Knew

asked on

Hardware Recommendations - Distributed Trunking for dual NIC servers

I am looking for some ideas with regard to finding a set of switches to replace my HP ProCurve 26xx's and 28xx. Right now, we have about 6 28xx and 1 26xx with one of the 28xx acting as a "warm" standby in case of failure. What I mean by "warm" standby is that it is powered on, and attached to the backplane, but no clients are connected to it. This switch will be connected to clients only if one of the other switches fails. So kind of like a cold standby, except it is connected to the tail end of the backplane.

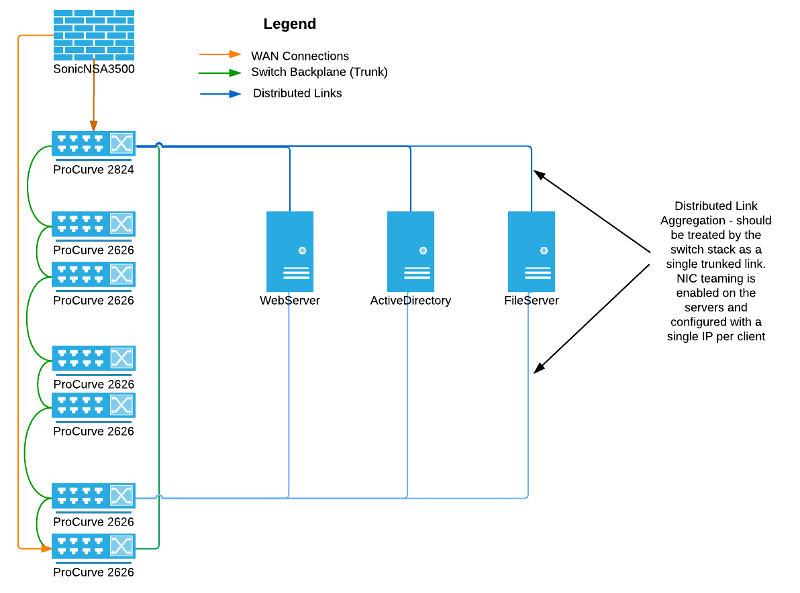

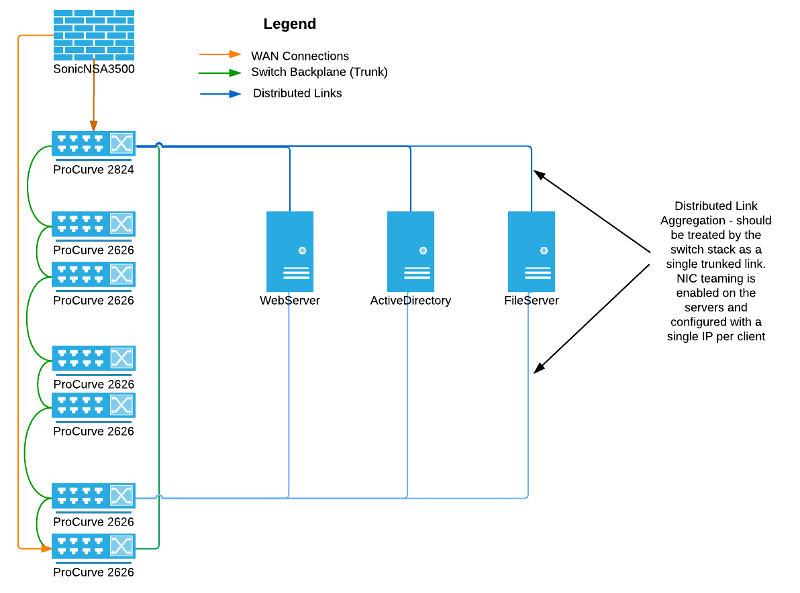

I want some recommendations of some 24 port or 48 port replacements where I would still keep one as a "warm" standby, but I want to be able to do "distributed link aggregation" across switches as shown here...

The servers in the diagram are connected to switches on the top and the bottom of the switch stack, so if there's a failure, clients will have redundant connections to each nic. This means the switches need to support distributed link aggregation across different devices in the stack.

The servers in the diagram are connected to switches on the top and the bottom of the switch stack, so if there's a failure, clients will have redundant connections to each nic. This means the switches need to support distributed link aggregation across different devices in the stack.

What do the experts recommend for switch hardware that supports this feature and may still be easy on the budget? PoE is not required for this application. OR do you have a recommendation for a different approach all-together to make the network robust and resist failure?

I want some recommendations of some 24 port or 48 port replacements where I would still keep one as a "warm" standby, but I want to be able to do "distributed link aggregation" across switches as shown here...

The servers in the diagram are connected to switches on the top and the bottom of the switch stack, so if there's a failure, clients will have redundant connections to each nic. This means the switches need to support distributed link aggregation across different devices in the stack.

The servers in the diagram are connected to switches on the top and the bottom of the switch stack, so if there's a failure, clients will have redundant connections to each nic. This means the switches need to support distributed link aggregation across different devices in the stack.What do the experts recommend for switch hardware that supports this feature and may still be easy on the budget? PoE is not required for this application. OR do you have a recommendation for a different approach all-together to make the network robust and resist failure?

SOLUTION

membership

This solution is only available to members.

To access this solution, you must be a member of Experts Exchange.

SOLUTION

membership

This solution is only available to members.

To access this solution, you must be a member of Experts Exchange.

ASKER CERTIFIED SOLUTION

membership

This solution is only available to members.

To access this solution, you must be a member of Experts Exchange.

ASKER

Thanks for all your input, experts.

I checked out the Brocade switches, the little 8 port guys intrigued me, but I couldn't see in the documentation where they supported cross-stack link aggregation, which is kinda the main feature I was looking for for my servers. I just want to eliminate single point of failure, but the bigger icx switches cost considerably more.

Allocated extra points to Aaron because he was the only one who actually recommended some hardware.

I checked out the Brocade switches, the little 8 port guys intrigued me, but I couldn't see in the documentation where they supported cross-stack link aggregation, which is kinda the main feature I was looking for for my servers. I just want to eliminate single point of failure, but the bigger icx switches cost considerably more.

Allocated extra points to Aaron because he was the only one who actually recommended some hardware.

ASKER

On another note... TP Link has this guy coming out soon. I hate that it only has a 1 year warranty, but I'm intrigued and wonder what the price point and release date will be.

TP-Link T3700G-28TQ

TP-Link T3700G-28TQ

ASKER

Now, I'm considering throwing up 2 Netgear M5300-28G switches at the top of the stack with Virtual Chassis Stacking and just using smart switches for the rest of the access layer. This still means around $3200 for those two for the distributed trunk, but at least I can save money on the other access layer devices. and have a 10GB backplane between the devices.