SSIS Data Flow Task: Is there a way to arrow multiple processes back to one?

Hi All

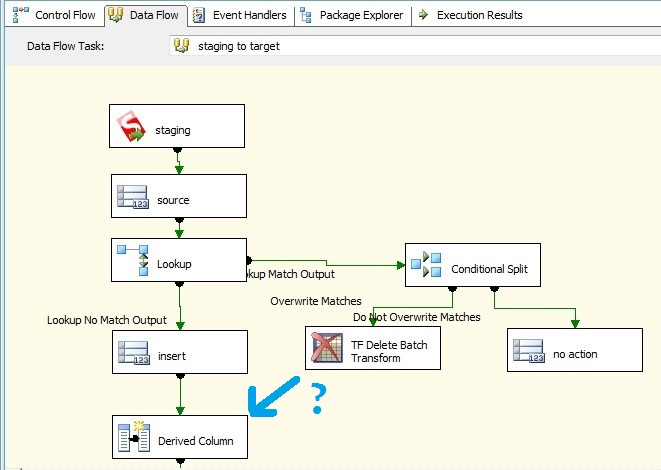

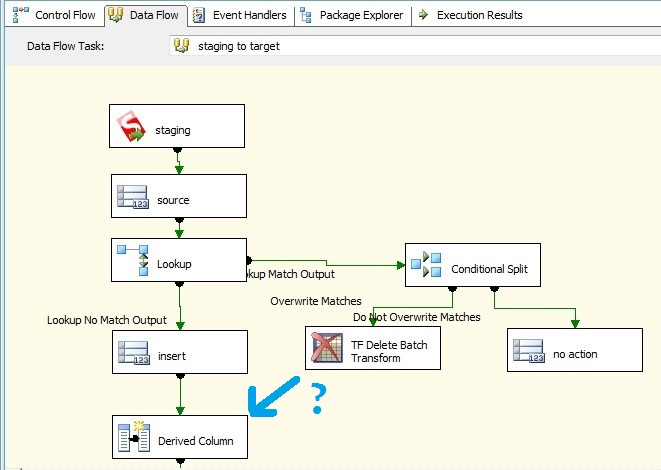

I have a Data Flow task with a Lookup on two columns (id, SystemModstamp) that has three branches:

Question: How can I pull off the blue-green arrow in the below image, set a precedence constraint (I know, they don't exist in data flow tasks) between the #2 TF Delete Batch transform, and the Derived Column, so that the Derived Column does not execute until both have been completed?

All that's coming to mind is a Union All / Merge / Merge Join, but I don't want the stream in the #2 TF delete to impact the downstream INSERT, as that's already in the #1 stream.

Thanks in advance.

Thanks in advance.

Jim

I have a Data Flow task with a Lookup on two columns (id, SystemModstamp) that has three branches:

Match Not Found - Insert. Works fine when it was just this.

... and if a match is found I've just added a Conditional split to test variable overwrite_matches...

If overwrite_matches='Y', delete the matching rows (TF Delete Batch in below image), then insert.

If overwrite_matches='N', get a row count called 'no action' and do nothing.

Question: How can I pull off the blue-green arrow in the below image, set a precedence constraint (I know, they don't exist in data flow tasks) between the #2 TF Delete Batch transform, and the Derived Column, so that the Derived Column does not execute until both have been completed?

All that's coming to mind is a Union All / Merge / Merge Join, but I don't want the stream in the #2 TF delete to impact the downstream INSERT, as that's already in the #1 stream.

Thanks in advance.

Thanks in advance.Jim

SOLUTION

membership

This solution is only available to members.

To access this solution, you must be a member of Experts Exchange.

ASKER

@Simon - No error message, just can't connect the two.

@VV- Pragmatic Works Task Factory Delete Batch Transform, essentially a delete action. Forgot to mention that.

In my design version 1.0 I had staging tables for all of these data flows, but since the client found no scenario where I would pump the data from source and never pump it to target, I deleted them. Some of these tables had 800+ columns (not my decision), so deleting staging was a big time savings.

This issue would be a case to re-add them, as I could do an Execute SQL to 'DELETE all from target where in source', then just let the data flow do the insert.

Thinking, thinking..

@VV- Pragmatic Works Task Factory Delete Batch Transform, essentially a delete action. Forgot to mention that.

In my design version 1.0 I had staging tables for all of these data flows, but since the client found no scenario where I would pump the data from source and never pump it to target, I deleted them. Some of these tables had 800+ columns (not my decision), so deleting staging was a big time savings.

This issue would be a case to re-add them, as I could do an Execute SQL to 'DELETE all from target where in source', then just let the data flow do the insert.

Thinking, thinking..

SOLUTION

membership

This solution is only available to members.

To access this solution, you must be a member of Experts Exchange.

ASKER CERTIFIED SOLUTION

membership

This solution is only available to members.

To access this solution, you must be a member of Experts Exchange.

ASKER

Thinking, thinking ...

Might not be a bad idea to resurrect the source to staging pumps, but ONLY for the two PK columns.

Then I can easily...

Might not be a bad idea to resurrect the source to staging pumps, but ONLY for the two PK columns.

Then I can easily...

Do the above Execute SQL to delete rows if I want, i.e. instead of an UPDATE testing up to 800+ columns this would be the DELETE part of a DELETE-INSERT.

For a full load I can handle the 'In target but not in source' scenario, which I'm currently not handling as this puppy is so big I'm only doing incremental loads, but occasionally something gets upgefucht requiring a full load.

ASKER

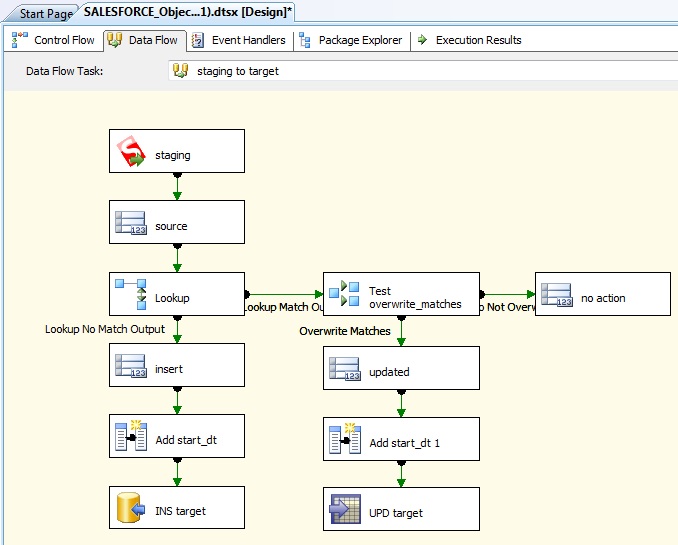

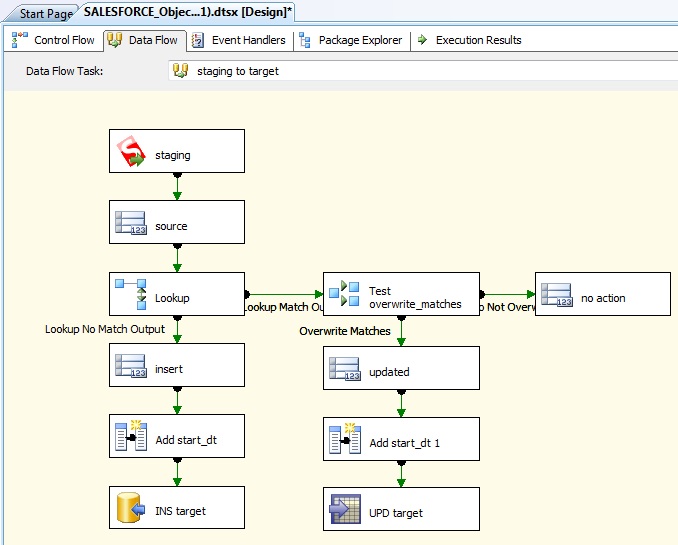

Just realized something based on JimFive's comment: I don't have to join the two, as one path are the matches, and another path is the no matches, so the rows are different.

My original thinking was that the deletes had to happen before the final inserts, but with different rows that's not an issue.

So, the final answer is..

My original thinking was that the deletes had to happen before the final inserts, but with different rows that's not an issue.

So, the final answer is..

No link (blue-green arrow in original question) is required.

Change the Delete in the original image to an TF update. (Could have also been an OLE DB Command)

I sure hope the performance of the TF update is better than the OLE DB Command :) Besides that: your plan sounds good!

ASKER

Thanks.

I'll try to mock up a similar flow in my environment later today.

Other experts may provide you a better answer in the meantime...

Or, this MSDN social blog post might help you.