Quintin Smith

asked on

De-Duplication server 2012

Hi Experts

I have a 2012 virtual File server in a Hyper V environment with de-duplication enabled.

I suspect that Veeam offsite replication jobs are failing due to unexpected file growth caused by De-duplication on the 2012 server which runs once a month.

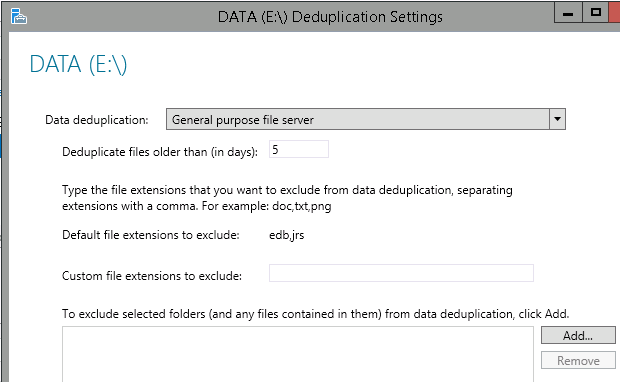

The deduplication settings are setup as follows: (Also refer to screenshots below)

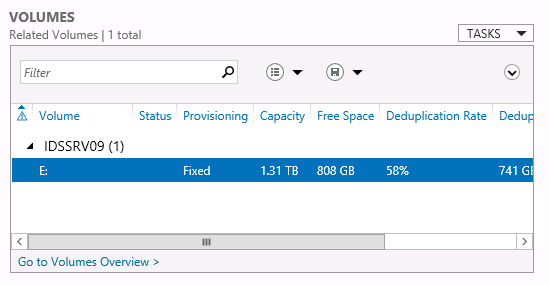

E: drive - Capacity = 1.3Tb

Free = 808Gb

Deduplication Rate = 58%

Data deduplication option set to: General purpose files server

Deduplication files older than (in days) = 5

Deduplication Savings = 741Gb

My questions are:

1.What is the best method to reduce the Deduplication say from 58% to 20%

2.Would it be worth reducing d-dup given the deduplication settings mentioned above/attached or would you recommend disabling De-dupliation

3.Would data and data access at all be affected if de-dup settngs are altered?

4.Would Veeam backup or replica jobs have to be re-created or have to re-run/rebuild from scratch if de-dup settings are altered?

5.Would simply reducing the de-duplication files older than 5 days set to 2 (example) days be the only settings that need to be changed?

Thank you

I have a 2012 virtual File server in a Hyper V environment with de-duplication enabled.

I suspect that Veeam offsite replication jobs are failing due to unexpected file growth caused by De-duplication on the 2012 server which runs once a month.

The deduplication settings are setup as follows: (Also refer to screenshots below)

E: drive - Capacity = 1.3Tb

Free = 808Gb

Deduplication Rate = 58%

Data deduplication option set to: General purpose files server

Deduplication files older than (in days) = 5

Deduplication Savings = 741Gb

My questions are:

1.What is the best method to reduce the Deduplication say from 58% to 20%

2.Would it be worth reducing d-dup given the deduplication settings mentioned above/attached or would you recommend disabling De-dupliation

3.Would data and data access at all be affected if de-dup settngs are altered?

4.Would Veeam backup or replica jobs have to be re-created or have to re-run/rebuild from scratch if de-dup settings are altered?

5.Would simply reducing the de-duplication files older than 5 days set to 2 (example) days be the only settings that need to be changed?

Thank you

ASKER CERTIFIED SOLUTION

membership

This solution is only available to members.

To access this solution, you must be a member of Experts Exchange.

I would say the files that are changed often should be the better candidates - by my logic, that will reduce server load.

Though if Veeam is Dedupe aware, I would take up your issue with their support as an aware system shouldn't cause problems with backing up deduped data.

ASKER

I will only be able to test if by excluding large folders and files has an impact on the bandwidth throughput after a month. I will also be comparing that with diabling de-dup in general. Thanks for the asssits

ASKER

Thank you for your comment. Veeam version 8.0 does offer deduplication support / awareness.

That may be an option to exclude certain folders.

Can file/folder exclusions be set live while the server is in production?

What would be best practive, to exlcude folders/files that are changed often or folders/files that hardly ever changes?

thanks