Isaiah Melendez

asked on

Efficient Way to Query Multiple DBs

Dear Experts,

Can you please give me some advice in the direction I should take?

The scenario that I have is 150 different SQL databases that all have data in them. I would like to run reports and display the data in one database and in this process we are going to need to add primary key when the data is dumped into that 1 master database. All the procedures are going to be done on server side can you please suggest which direction to take ex. Stored procedures, Views or something else. The environment that we are running is SQL 2014 Standard. All the tables are the same.

Can you please give me some advice in the direction I should take?

The scenario that I have is 150 different SQL databases that all have data in them. I would like to run reports and display the data in one database and in this process we are going to need to add primary key when the data is dumped into that 1 master database. All the procedures are going to be done on server side can you please suggest which direction to take ex. Stored procedures, Views or something else. The environment that we are running is SQL 2014 Standard. All the tables are the same.

Hi,

I would suggest your should prepare SSIS package for the same if you need to transfer data only.

SSIS provides you good and structured solution to move data from one database to other.

Its simple and easy to manage is well.

I would suggest your should prepare SSIS package for the same if you need to transfer data only.

SSIS provides you good and structured solution to move data from one database to other.

Its simple and easy to manage is well.

ASKER

So if I am understanding correctly, you guys are suggesting to us SSIS to import the data from all the separate databases and make one bulk database? Is that the most efficient way?

Jim: to answer your question, I would like to do that, but I have read that it is a daunting and complex process. Would you agree? Do you know of any tutorials that show how to do what you are talking about?

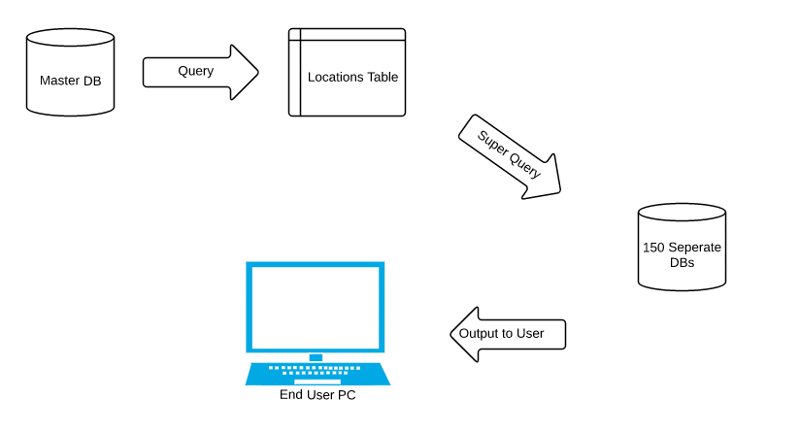

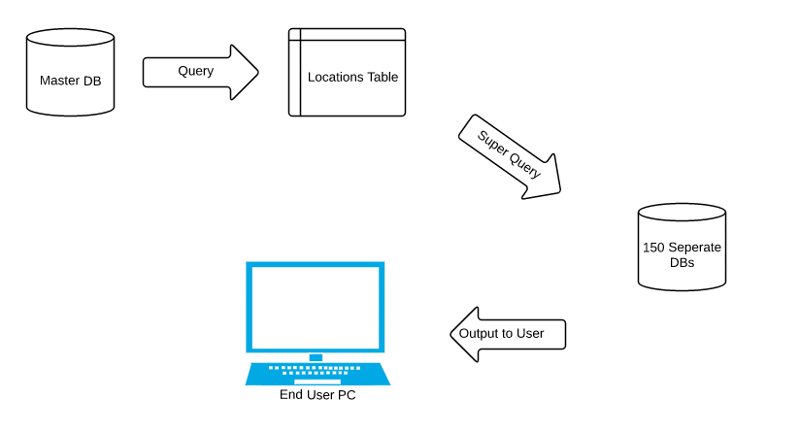

USE CASE: We know that we have 150 site locations, but to simplify, I will use 2 site locations called location A and location B.

I will create a master DB with a locations table only that will use the PK in that table to link a FK on the Location A and B DBs to display queried data to the end user.

I have enclosed a picture of my initial thought process, but, would not mind any additional suggestions.

Jim: to answer your question, I would like to do that, but I have read that it is a daunting and complex process. Would you agree? Do you know of any tutorials that show how to do what you are talking about?

USE CASE: We know that we have 150 site locations, but to simplify, I will use 2 site locations called location A and location B.

I will create a master DB with a locations table only that will use the PK in that table to link a FK on the Location A and B DBs to display queried data to the end user.

I have enclosed a picture of my initial thought process, but, would not mind any additional suggestions.

ASKER

To clarify, in the image, the End User queries master DB and the flow continues as normal to output to end user. Sorry for the honest oversight, gents.

>So if I am understanding correctly, you guys are suggesting to us SSIS to import the data from all the separate databases and make one bulk database?

>Is that the most efficient way?

Yes for now, as there's not a world of details in this question. Other options would be other ETL tools, PowerShell, an all-T:SQL Stored Procedure solution with a big cursor / FOR loop.

>I would like to do that, but I have read that it is a daunting and complex process

All ETL applications have a steep learning curve, and SSIS is no different, although I've heard that Informatica is easier to use but there is a > $100k price tag with it.

>Is that the most efficient way?

Yes for now, as there's not a world of details in this question. Other options would be other ETL tools, PowerShell, an all-T:SQL Stored Procedure solution with a big cursor / FOR loop.

>I would like to do that, but I have read that it is a daunting and complex process

All ETL applications have a steep learning curve, and SSIS is no different, although I've heard that Informatica is easier to use but there is a > $100k price tag with it.

ASKER CERTIFIED SOLUTION

membership

This solution is only available to members.

To access this solution, you must be a member of Experts Exchange.

Whilst this may not be greeted with enthusiasm, I'd question the wisdom of having 150 db's with the same table structure in the first instance, and then ask why you aren't considering a re-design.

Paul - I've been at gigs where they ran that way. Enterprise front-end app, 150 clients, each with a database whose schema was exactly the same but the database name was the client name. Login to the app included the client name, where after successful login it pointed to the appropriate db.

Ok. Is your intent to just create the same schema but with a PK 'database' column, and pump data from 150 locations into one? That can be handled in an SSIS package with a ForEach loop that loops through all 150 datasets, using the same SQL as a source but with a variable to handle what gets inserted into the database column.