sglee

asked on

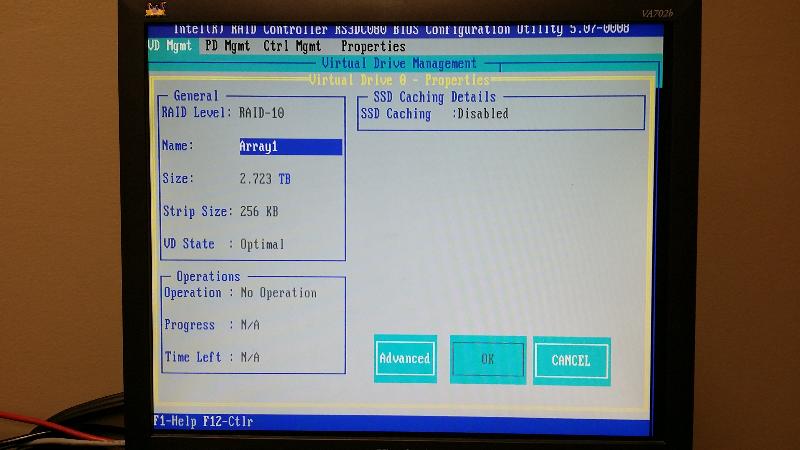

RAID 10 Configuration

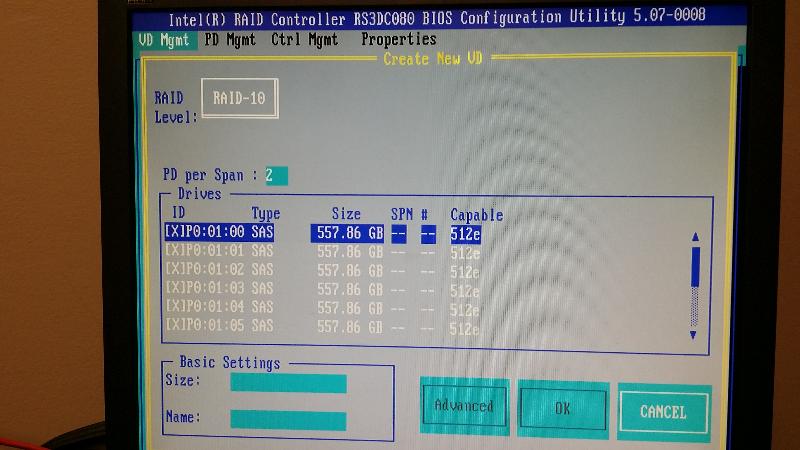

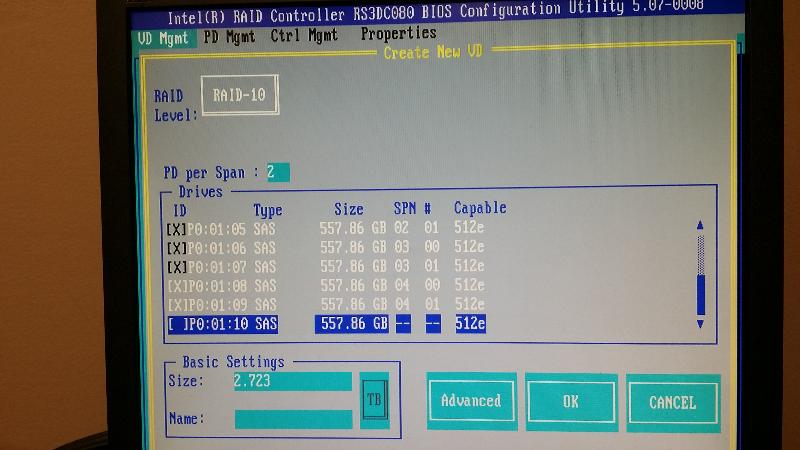

I had qty. 10 brand new SAS 600GB HDs and was setting them up in RAID 10 on INTEL RS3DC080 RAID CONTROLLER in BIOS.

After selecting RAID 10 and Span: 2 in Virtual Drive Configuration screen, I was on my way to select hard drives to be included in the raid 10.

Each time I press space bar (on the keyboard) on first 8 hard drives (0 thru 7), it left "X" in the check box in "BLACK" color.

When I selected 8th and 9th (which is 9 and 10th physical hard drive), it left "X" mark in "WHITE" color instead of "BLACK" color. Although it generated correct total space for the raid (600GB x 5), I found it strange.

Upon further examination, (although I don't recall exact column title on this configuration screen), each hard drive was labeled "0" thru "9" and under span column(?), each HD showed either 1 & 2 (or 0 & 1) in sequence. So hard drive "0" had 1 in span column and hard drive "1" had 2 in span column. Hard drive "2" had 1 in span column and hard drive "3" had 2 in span column.

HD# Span

[X] 0 1

[X] 1 2

[X] 2 1

[X] 3 2

[X] 4 1

[X] 5 2

[X] 6 1

[X] 7 2

[X] 8 2 * X in white color

[X] 9 1 * X in white color

However hard drive "8" had 2 in span column and hard drive "9" had 1 in span column. Why would last two HDs be out of "Sync"? and perhaps that was the reason why it left "White colored X" mark in the check box?

What does this mean and what causes this? These SAS hard drives are brand new and just put in to hot-swap bay on the brand new rack server.

I will take pictures of RAID configuration page later on today and post it.

After selecting RAID 10 and Span: 2 in Virtual Drive Configuration screen, I was on my way to select hard drives to be included in the raid 10.

Each time I press space bar (on the keyboard) on first 8 hard drives (0 thru 7), it left "X" in the check box in "BLACK" color.

When I selected 8th and 9th (which is 9 and 10th physical hard drive), it left "X" mark in "WHITE" color instead of "BLACK" color. Although it generated correct total space for the raid (600GB x 5), I found it strange.

Upon further examination, (although I don't recall exact column title on this configuration screen), each hard drive was labeled "0" thru "9" and under span column(?), each HD showed either 1 & 2 (or 0 & 1) in sequence. So hard drive "0" had 1 in span column and hard drive "1" had 2 in span column. Hard drive "2" had 1 in span column and hard drive "3" had 2 in span column.

HD# Span

[X] 0 1

[X] 1 2

[X] 2 1

[X] 3 2

[X] 4 1

[X] 5 2

[X] 6 1

[X] 7 2

[X] 8 2 * X in white color

[X] 9 1 * X in white color

However hard drive "8" had 2 in span column and hard drive "9" had 1 in span column. Why would last two HDs be out of "Sync"? and perhaps that was the reason why it left "White colored X" mark in the check box?

What does this mean and what causes this? These SAS hard drives are brand new and just put in to hot-swap bay on the brand new rack server.

I will take pictures of RAID configuration page later on today and post it.

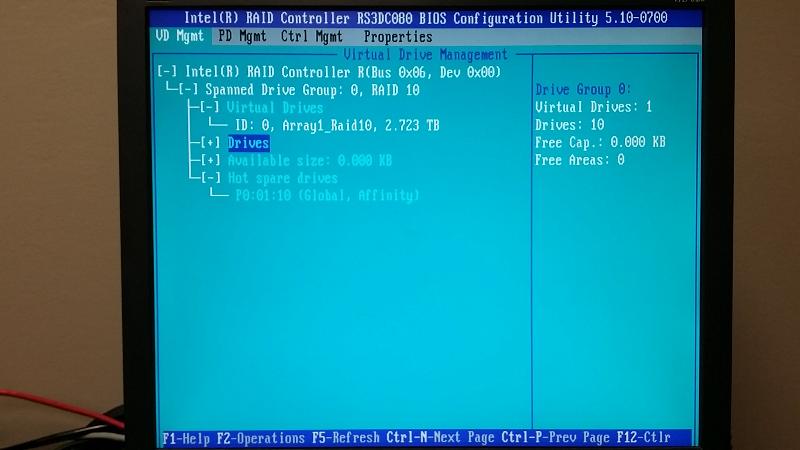

ASKER

@arnold,

"I think 8,9 are the respective hot spares." ---> I bought 11 HDs. After setting up first 10 in Raid 10, I added 11th one as Global Hot Spare and it shows up as GHS in RAID BIOS screen.

"you have two raid 10 s spanned." ---> Should I have selected "1" in Span field instead of "2"?

"I think 8,9 are the respective hot spares." ---> I bought 11 HDs. After setting up first 10 in Raid 10, I added 11th one as Global Hot Spare and it shows up as GHS in RAID BIOS screen.

"you have two raid 10 s spanned." ---> Should I have selected "1" in Span field instead of "2"?

raid 10 definition is a set of 4 drives minimum, a span of mirrors

look in the config which drive mirrors which. each pair of mirrors has to be initialized, they might be in process of rebuilding....

I'm unfamiliar with this controller.

look in the config which drive mirrors which. each pair of mirrors has to be initialized, they might be in process of rebuilding....

I'm unfamiliar with this controller.

Well RAID10 is defined as a Stripe of Mirrors, Spanning is something else.

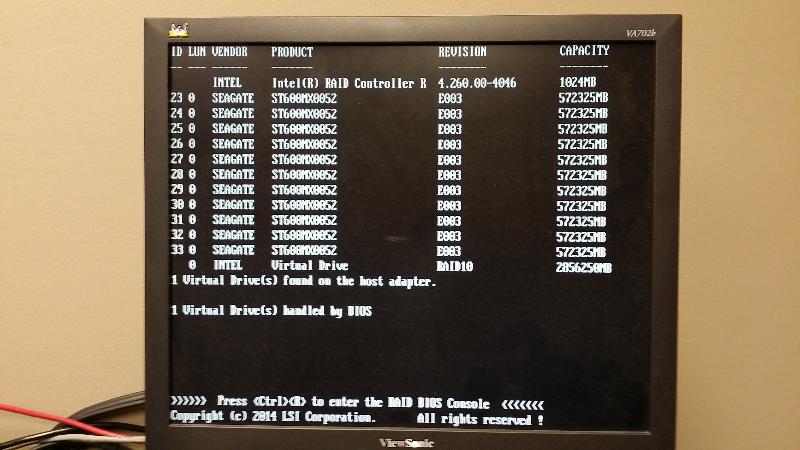

Silly question .. but do you have an expander? The card only has 8 ports, so unless you have an expander you won't be able to physically talk to more than 8 drives.

Just because you have a hot swap bay doesn't mean you have an expander. You need to investigate the backplane specs. The BIOS will also indicate if you have an expander by revealing an expander and disks attached to it, by make/model.

Just because you have a hot swap bay doesn't mean you have an expander. You need to investigate the backplane specs. The BIOS will also indicate if you have an expander by revealing an expander and disks attached to it, by make/model.

ASKER

@dlethe

Good to hear from you!

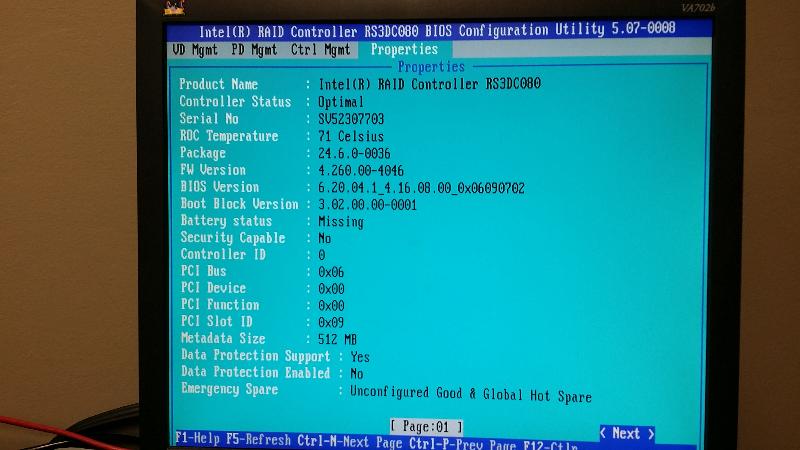

Yes the controller is for 8 HDs, but the vendor is supposed to have added expander (I paid additional $) to hook up to 18 HDs.

I asked the vendor before the purchase how this raid controller would handle 16 hot swap bays when it is for olny 8 ports. His answer was the card is connected to the backplane and HDs are connected to the backplane.

Good to hear from you!

Yes the controller is for 8 HDs, but the vendor is supposed to have added expander (I paid additional $) to hook up to 18 HDs.

I asked the vendor before the purchase how this raid controller would handle 16 hot swap bays when it is for olny 8 ports. His answer was the card is connected to the backplane and HDs are connected to the backplane.

IIRC, the mirrored sets, that is disk groups, need to be created first. It's been a while since we've set up RAID 10 so the grey matter is a bit rusty. We exclusively use Intel RAID in our solutions.

ASKER

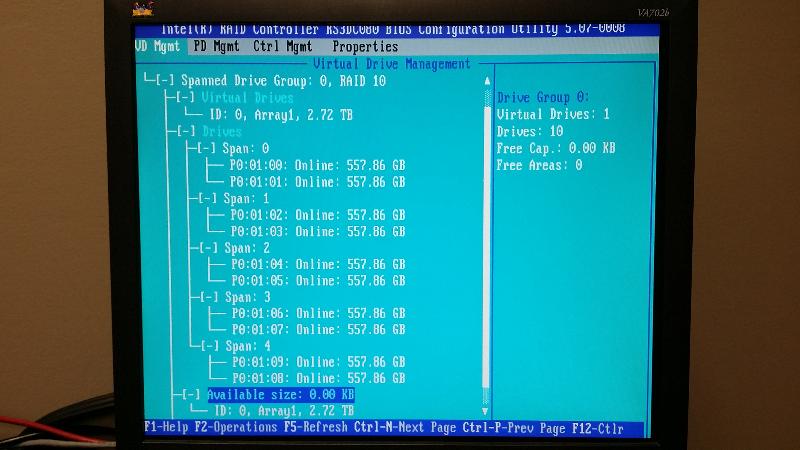

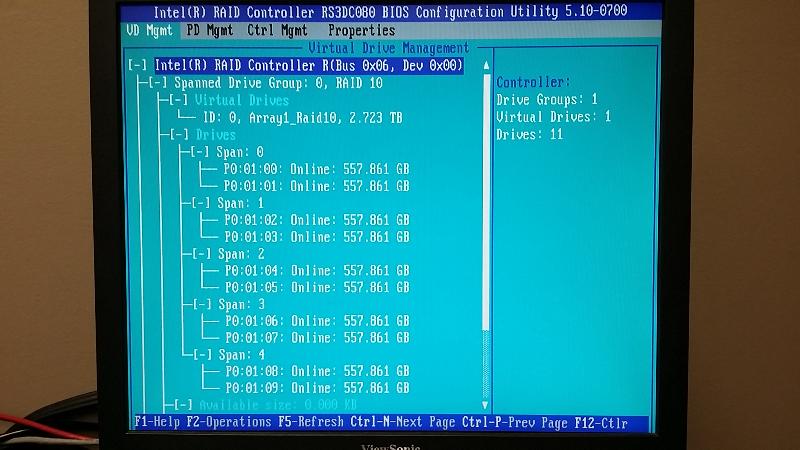

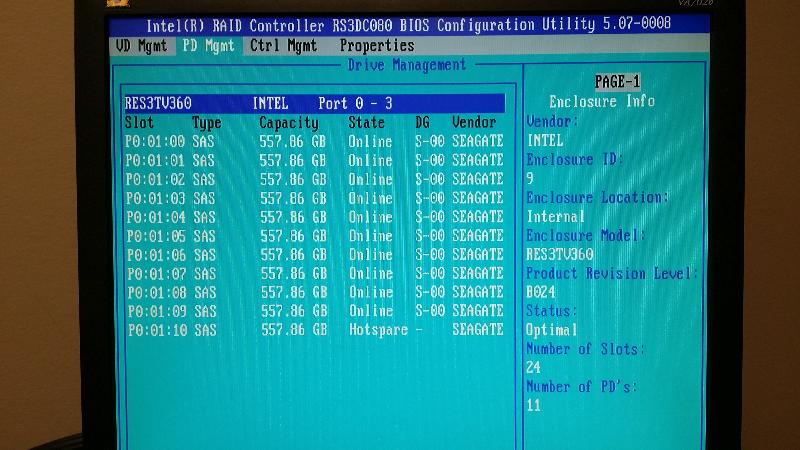

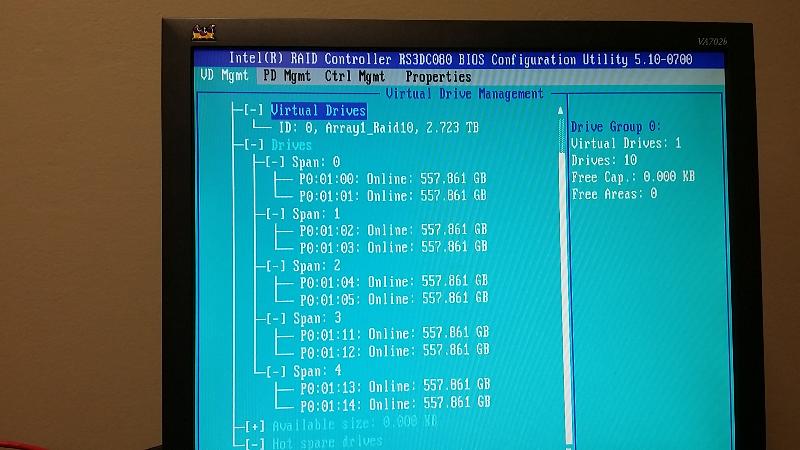

As you can see, drive numbers (09 & 08) are out of order and these were two drives with white[X] mark when I selected them in RAID 10 setup screen.

As you can see, drive numbers (09 & 08) are out of order and these were two drives with white[X] mark when I selected them in RAID 10 setup screen. The hard drives from Span: 0 thru 3, they are in increasing order from 0 to 7 and I saw black [X] mark when I selected them in RAID 10 setup screen.

Span: 0

PI:01:00: Online: 557.86GB

PI:01:01: Online: 557.86GB

Span: 4

PI:01:09: Online: 557.86GB ** Out of order **

PI:01:08: Online: 557.86GB

Please post screen shots for the PDs. (Not being in sequential mode is a red herring. The controller doesn't care).

But I don't see the problem here, the usable capacity shows 2.72TB which is correctly = 5 * 557GB

This does, however, look suspiciously like all the disks are going through the same port which will KILL performance.

But I don't see the problem here, the usable capacity shows 2.72TB which is correctly = 5 * 557GB

This does, however, look suspiciously like all the disks are going through the same port which will KILL performance.

ASKER

ASKER CERTIFIED SOLUTION

membership

This solution is only available to members.

To access this solution, you must be a member of Experts Exchange.

ASKER

Here are more pics, but I could not find any screen related to the expander in RAID BIOS Configuration Utility.

Here are more pics, but I could not find any screen related to the expander in RAID BIOS Configuration Utility.I am going to switch the hard drive 8 and 9 and go thru the RAID 10 setup process from the scratch.

I don't have any VM loaded yet on this server and I only loaded ESXi V6 which I can re-do in 30 minutes.

I just want to make sure that all 10 drives show [X] in black color as I select them in RAID 10 setup screen. I will post the pic as I create a new VD.

Fyi, the below is what was shipped by the vendor and I purchased these hard drives from different vendor.

INTEL R2208WTTYS 2U RM SOC R3 2XPROC DDR4 G-LAN SATA SY2179 Qty: 1

2U SERVER BF3201 Qty: 1

LSI CBL-SFF8643-SATASB-06M 0.6M SFF8643-X4 SATA LSI00410 CB0652 Qty: 1

INTEL AXXCBL730HDHD CABLE KIT 2X730MM CABLE STRAIGHT SFF8643 CB0736 Qty: 1

INTEL RES3TV360 RAID EXPANDER SAS SATA 12GB/S 36 PORT DC2163 Qty: 1

INTEL 2U HSW DRIVE CAGE 8X2.5" UPGRADE KIT A2U8X25S3HSDK CS2161 Qty: 1

INTEL AXX1100PCRPS 1100W AC POWER SUPPLY REDUDANT PLATINUM PS2041 Qty: 1

6FT 14AWG C13/5-15P 3CONDUCTOR POWERCORD BLACK 10W1-14-01206 CB0753 Qty: 1

INTEL AXXELVRAIL SLIDE RAIL KIT FOR 438MM WIDE CHASSIS RK0018 Qty: 1

INTEL XEON E5-2630V3 2011-3 2.40GHZ 8/16 20M BOX CP9101 Qty: 2

CRUCIAL 16GB DDR4 2133 ECC REG CT16G4RFD4213 DUAL RANK 1.2V RM1623 Qty: 8

INTEGRATED SATA RAID 0, 1, 0+1, 5 MC9028 Qty: 1

INTEL RS3DC080 RAID CONTROLLER 12GB SAS/PCIE3.0X8/8PORT/1GB DC2168 Qty: 1

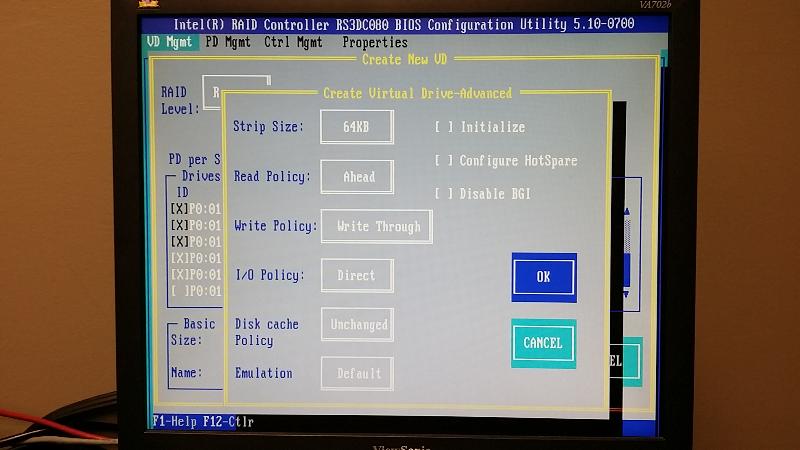

256K stripe size? No, no,no. create a single 2-drive RAID1 with a much smaller stripe size for your O/S & SWAP & scratch table space and such. The 256KB stripe means you're going to have to write 256KB at a time, but the filesystem is going to be doing 4KB at a time.

Then use the other disks for a data pool, and set whatever filesystem you use to match the stripe size. 256KB is Absolutely horrible unless this is a video streaming system. Remember assuming you are using windows, SQL Server uses 64KB I/O, so stripe size other than 64KB is wasteful.

Then use the other disks for a data pool, and set whatever filesystem you use to match the stripe size. 256KB is Absolutely horrible unless this is a video streaming system. Remember assuming you are using windows, SQL Server uses 64KB I/O, so stripe size other than 64KB is wasteful.

ASKER

You should make the boot LUN (2 disk RAID1) have the smallest possible stripe, and 2nd LUN 64KB if this is to be used with database, OLTP, exchange, or just about anything else. Make sure you build the filesystems with the SAME I/O size as well.

You want to optimize for IOPs, not throughput.

You want to optimize for IOPs, not throughput.

ASKER

"create a single 2-drive RAID1 with a much smaller stripe size for your O/S & SWAP " --> I am setting up VMware server on this box. I will be creating multiple Virtual Machines with various Windows OS. None of VMs will be for streaming videos. They will all be Application Servers (IIS, TS ... etc including SBS2011).

Should I still change the strip size to 64KB?

Should I still change the strip size to 64KB?

VMWare is going to want to use a larger stripe size, which is a function of the size of the LUN itself. I do not know off top of my head what the correct setting would be based on the usable capacity. Best you look at the documentation. But I expect 256KB is likely correct. So just make it one big LUN to make life easy.

If you ever wanted a screaming fast LUN you could always install a small SSD and use the VMDIRECT method to nail that drive to a specific machine, then you could use host-based software RAID1 if you wanted to protect it. RAID1 with software RAID has effectively zero overhead, and you'll get nearly 2x the read performance than if you had a non-raid config with just one disk.

If you ever wanted a screaming fast LUN you could always install a small SSD and use the VMDIRECT method to nail that drive to a specific machine, then you could use host-based software RAID1 if you wanted to protect it. RAID1 with software RAID has effectively zero overhead, and you'll get nearly 2x the read performance than if you had a non-raid config with just one disk.

ASKER

I don't understand "So just make it one big LUN to make life easy."

I will leave strip size to 256KB which was a default value.

I will leave strip size to 256KB which was a default value.

I mean just build it as a single large logical unit rather than a 2-disk RAID1 + 8 disk RAID10, with different sizes. That will be easier to maintain and provision.

ASKER

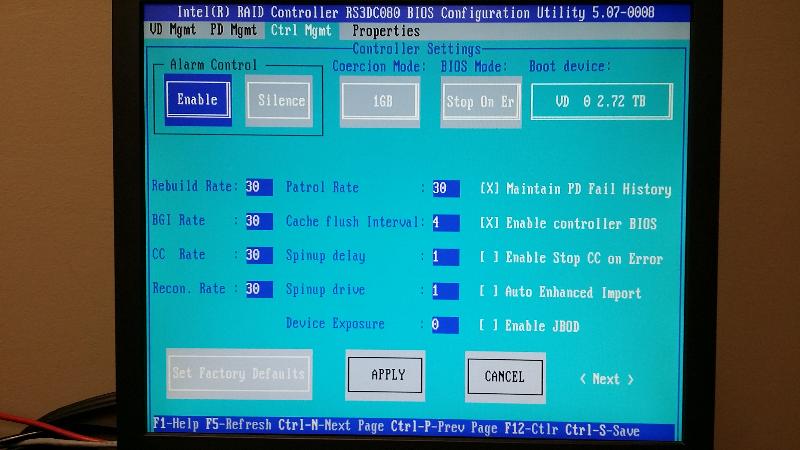

Before asking another question about " just build it as a single large logical unit rather than a 2-disk RAID1 + 8 disk RAID10" comment, let me do some updates on this RAID card and also MB BIOS thru Intel website because I feel like current RAID set up screen looks outdated (certainly different than LSI MegaRAID utility where "Configuration Wizard" is available"). Once I am done applying pdates, I will go thru RAID 10 setup from the scratch.

Let me get back to you in a couple of hours.

Let me get back to you in a couple of hours.

ASKER

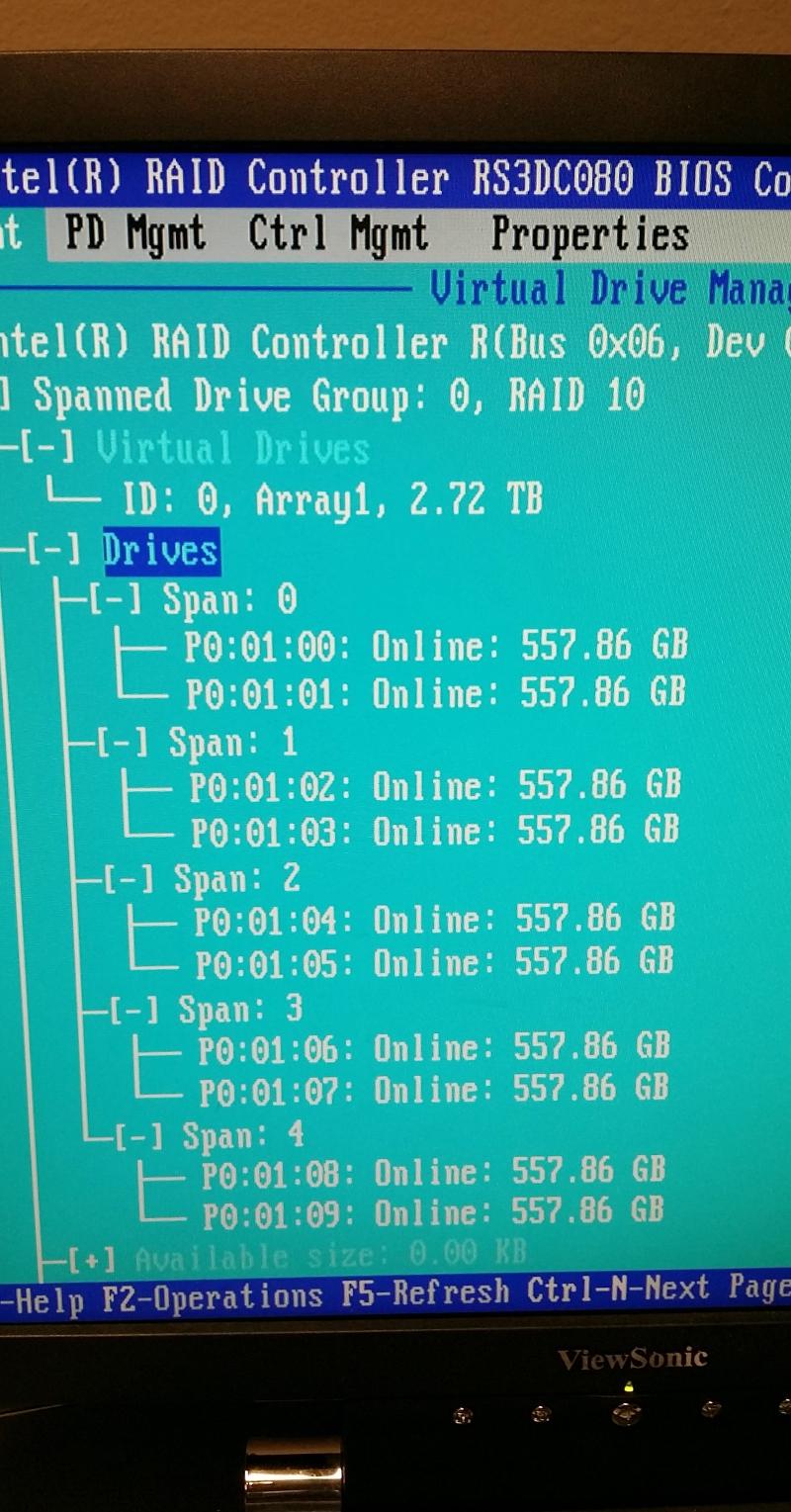

Before applying updates, I decided to create a new VD from the scratch using current version of Configuration Utility screen. I cleared existing VD and chose RAID 10. As seen on the screenshot, it automatically defaulted to '2' in "PD for SPAN" field. When I selected all 10 hard drives (11th HD is a global HS), it still shows 8 and 9 HD in white X instead of black X. Although HDs are numbered in orderly fashion after swapping 8 & 9 HDs.

Should I be concerned about white X versus black X?

Should I be concerned about white X versus black X?

White vs black X? Ignore it. There is no difference in any of the configuration settings.

ASKER

OK. I will ignore Black vs White X from now on. I just wanted to make sure it was OK.

Let me proceed with updates.

Let me proceed with updates.

ASKER

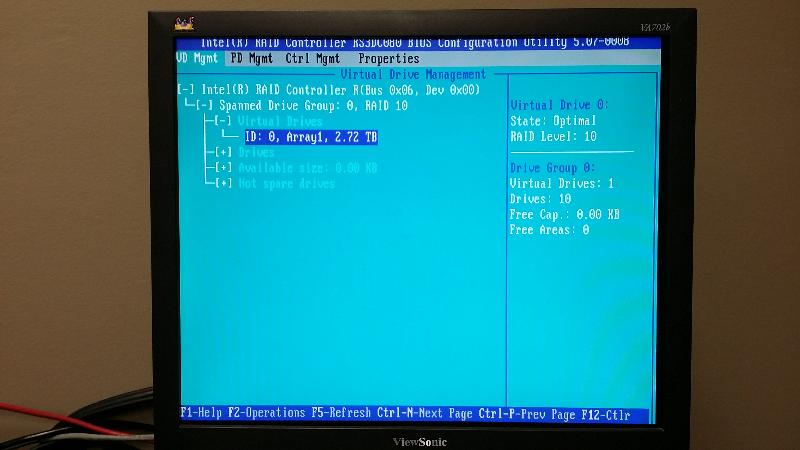

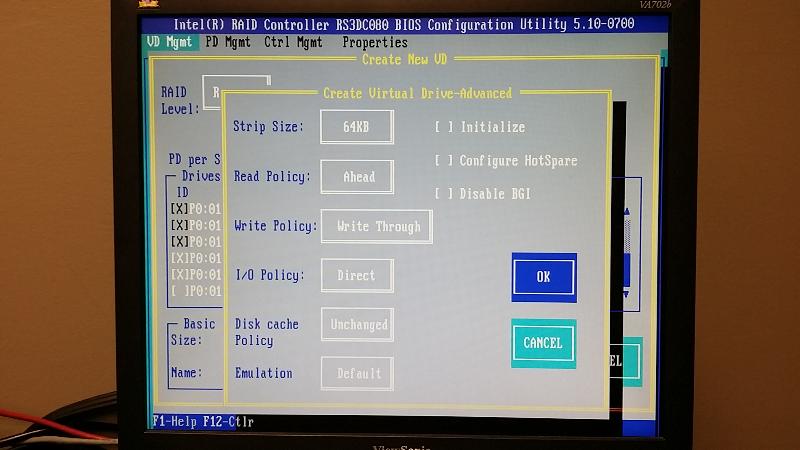

I upgraded RAID Controller BIOS and set up RAID 10 again from the scratch.

I upgraded RAID Controller BIOS and set up RAID 10 again from the scratch.However the white [X] were still there on HD 8 & 9, but I will ignore it. At least they show up in order after HD swap.

I changed the stripe size to 64K. (I don't understand why INTEL is recommending 256K size in this video though: http://www.intel.com/support/motherboards/server/sb/CS-030340.htm

"I mean just build it as a single large logical unit rather than a 2-disk RAID1 + 8 disk RAID10, with different sizes. That will be easier to maintain and provision." --> Based on what I posted (screenshots), where do I go to do what you were referring to?

Don't worry about it. You are on VMware, and best is to not break up the disks into 2 separate VMware drives that you provision.

ASKER

Thanks for your help on this issue.

SOLUTION

membership

This solution is only available to members.

To access this solution, you must be a member of Experts Exchange.

ASKER

@Philip,

I posted the picture of my rack server above.

I installed 11 drives from the left from bay 1 thru 11. First 10 is for raid 10 and 11th one is global hot spare.

As to raid controller bios interface is concerned, I am not familiar with it. Rather I am used to using LSI Megaraid interface that I see when I click the link you posted. It came with the server and I do not know how to change or install LSI MegaRaid Interface.

I posted the picture of my rack server above.

I installed 11 drives from the left from bay 1 thru 11. First 10 is for raid 10 and 11th one is global hot spare.

As to raid controller bios interface is concerned, I am not familiar with it. Rather I am used to using LSI Megaraid interface that I see when I click the link you posted. It came with the server and I do not know how to change or install LSI MegaRaid Interface.

The server looks like an R2216WT or an R2216GZ4 or their equivalent?

I'm a bit confused by the link reference. The RAID BIOS images above don't look anything like the linked to Intel RAID BIOS pictures on either link I posted?

I'm a bit confused by the link reference. The RAID BIOS images above don't look anything like the linked to Intel RAID BIOS pictures on either link I posted?

ASKER

Please see the order description posted above for part numbers.

I know. They look totally different. I am puzzled too.

I know. They look totally different. I am puzzled too.

To dlethe and most recent comments, the card has two sas/sata ports.

Does the backplane you use has the option to be split where you would connect each port to each half. This is what dlethe reference to IO bottleneck meaning using a single port, all IO will be going over the single channel versus with the split every set of 8 will go through a single channel

Does the backplane you use has the option to be split where you would connect each port to each half. This is what dlethe reference to IO bottleneck meaning using a single port, all IO will be going over the single channel versus with the split every set of 8 will go through a single channel

Okay, I missed the build. EQUUS? Give them a call for support.

There is something not right there.

There is something not right there.

ASKER

I do not get it. what is not right?

The links to Intel's RAID BIOS setup steps do _not_ match the images shown above. We've been doing Intel Server Systems and RAID for fifteen to twenty years now. The Intel variant of LSI's RAID BIOS setup has not changed in at least 10 to 15 years of that. That's what I see on Intel's site.

For that matter, neither has Dell's implementation of LSI's RAID BIOS changed either. The images posted here are very similar to Dell's variant.

For that matter, neither has Dell's implementation of LSI's RAID BIOS changed either. The images posted here are very similar to Dell's variant.

ASKER

I agree.

I was told that intel uses lsi cards. So I was expecting lsi megaraid interface. I found it very strange. I got on youtube and found out what i have, as you mentioned, dell raid interface.

Even intel website does not show this.

I was told that intel uses lsi cards. So I was expecting lsi megaraid interface. I found it very strange. I got on youtube and found out what i have, as you mentioned, dell raid interface.

Even intel website does not show this.

Go figure, the linked site is out of date. :P

http://www.intel.com/support/motherboards/server/sb/CS-022358.htm

That User Guide is up to date and shows the correct images as above.

My apologies.

Call your system builder or Intel if you are the system builder and get clarification on the difference when selecting the drives. In my mind the behaviours should be consistent across the board.

Also, we'd put 6 drives in the left bay (of 8) having three in the left most slots, skip one, and the next three (distributes across the two cables for that bay). We'd put 2 drives in the left most slots, skip two, and then the final three in the next set leaving one blank at the end.

This would distribute the IO across the four cables plugged into the hot swap backplane.

http://www.intel.com/support/motherboards/server/sb/CS-022358.htm

That User Guide is up to date and shows the correct images as above.

My apologies.

Call your system builder or Intel if you are the system builder and get clarification on the difference when selecting the drives. In my mind the behaviours should be consistent across the board.

Also, we'd put 6 drives in the left bay (of 8) having three in the left most slots, skip one, and the next three (distributes across the two cables for that bay). We'd put 2 drives in the left most slots, skip two, and then the final three in the next set leaving one blank at the end.

This would distribute the IO across the four cables plugged into the hot swap backplane.

ASKER

In this case, I do not think it makes a difference as it the backplane to which the drive connects is not split.

The controller has two SAS ports

The 16 backplane has a pair of 8 drive blocks, some include a break meaning you would use both SAS ports from the INtel board and connect one to the left 8 and the remaining to the right.

i/e. left 8 will have a max through port on port 0 of the SAS RAID controller at 6GB

The right set of drives will also have a max through put of 6GB on port 1 of the SAS RAID controller.

The issue is to determine which options you have.

If possible, the change will be the 6-10 drives might show up as

P0:02:04

P0:02:08

By the looks of it, all 16 drives are connected to the single SAS controller port.

The controller has two SAS ports

The 16 backplane has a pair of 8 drive blocks, some include a break meaning you would use both SAS ports from the INtel board and connect one to the left 8 and the remaining to the right.

i/e. left 8 will have a max through port on port 0 of the SAS RAID controller at 6GB

The right set of drives will also have a max through put of 6GB on port 1 of the SAS RAID controller.

The issue is to determine which options you have.

If possible, the change will be the 6-10 drives might show up as

P0:02:04

P0:02:08

By the looks of it, all 16 drives are connected to the single SAS controller port.

ASKER

@Arnold, Philip Elder, dlethe

@Arnold, Philip Elder, dletheLet me wrap this up by asking these two questions:

(1) Based the fact that I am building VMware box on this server hardware, should I keep the default stripe size of 256K or change to 64k?

(2) Based on the server specs sheet above, should I install all 11 hard drives in bay 1 thru 11 from or split them into two zones (6 hard drives at bay 1 thru 6 and 5 hard drives from bay 12 thru 16)?

1: Workloads to run? If all Db with high IOs then go for the 64KB size. If mixed environment with Db IOs not high then stick with the defaults through the entire storage stack.

2: Our preference is what you did in your second pic. Though we fill left to right in the second and third bays.

In the above example we'd be populating each bay with 3 SAS SSDs as indicated.

2: Our preference is what you did in your second pic. Though we fill left to right in the second and third bays.

In the above example we'd be populating each bay with 3 SAS SSDs as indicated.

SOLUTION

membership

This solution is only available to members.

To access this solution, you must be a member of Experts Exchange.

ASKER

It looks to me, when I went to RAID BIOS screen, my RAID 10 was there no matter how I arranged my HDs in hot swap bays.

Since opinions are split among experts regarding HD arrangement and I need to get started on creating virtual machines on this VMware box...

Does anyone see a problem with me going ahead and create virtual machines now and deal with HD arrangement later?

Since opinions are split among experts regarding HD arrangement and I need to get started on creating virtual machines on this VMware box...

Does anyone see a problem with me going ahead and create virtual machines now and deal with HD arrangement later?

Intel/LSI defines RAID on disk.

Those disks could be taken to another setup with Intel/LSI RAID installed and "Import Foreign Configuration" would be offered.

There are two paths/cables to the controller. The expander has two cables per hot swap bay. Each cable backs four hot swap bays. We could go so far as splitting up the disks along those lines too.

Our way of doing things is offered as a suggestion.

We bench everything before selling/deploying the solution. So, we figure out what particular configuration will give us what we need for IOPS, Throughput, and VM concentration.

Those disks could be taken to another setup with Intel/LSI RAID installed and "Import Foreign Configuration" would be offered.

There are two paths/cables to the controller. The expander has two cables per hot swap bay. Each cable backs four hot swap bays. We could go so far as splitting up the disks along those lines too.

Our way of doing things is offered as a suggestion.

We bench everything before selling/deploying the solution. So, we figure out what particular configuration will give us what we need for IOPS, Throughput, and VM concentration.

ASKER

[IMAGE REMOVED AS IT DID NOT MATCH THE CAPTION. REMOVED BECAUSE IT INCLUDED A VEHICLE WITH PLATE NUMBER]

How is this now?

How is this now?

once you start the only time it will be revisited is during the normal upgrade cycle when a new system is added replacing this one.......

IMHO, since you are planning on placing a significant number of VMs on this, it is best to get it right from the get go. It is a simple question to answer. If the backplane you have only has a single connection from a SAS RAID controller, the arrangement of the disks is of no consequence.

If it has a one connection from the controller and then an interconnect bridging connection

one half connecting to the other half, and you have two cables from the controller to the backplane, look at the specs of the backplane/controller i..e disconnect the bridging cable and connect the second half using the second cable to the controller.

Unfortunately, I can not tell whose case that is to look at their specs. This is an Intel server case as well?

IS this the expander card that you have?

http://www.intel.com/content/www/us/en/servers/raid/raid-controller-res2sv240.html

How is your controller/expander cabling connection to the backplane?

IMHO, since you are planning on placing a significant number of VMs on this, it is best to get it right from the get go. It is a simple question to answer. If the backplane you have only has a single connection from a SAS RAID controller, the arrangement of the disks is of no consequence.

If it has a one connection from the controller and then an interconnect bridging connection

one half connecting to the other half, and you have two cables from the controller to the backplane, look at the specs of the backplane/controller i..e disconnect the bridging cable and connect the second half using the second cable to the controller.

Unfortunately, I can not tell whose case that is to look at their specs. This is an Intel server case as well?

IS this the expander card that you have?

http://www.intel.com/content/www/us/en/servers/raid/raid-controller-res2sv240.html

How is your controller/expander cabling connection to the backplane?

ASKER

Thank you. I thought I deleted it, but it still showed up and I did not see "delete" option anymore.

The appearance on the front is fine, it is the connections on the back that make it important.

As I am unfamiliar with the Intel RaId Controllers, I do not know whether they reflect Each SAS port as unique in the BIOS or it reflects DEVICES connected to either port

Not sure how the expander comes into play in the notation.

P0:01 for port 1 0-7

P0:02 for port 2 0-7

The RAID controller feeds the expander that connects to the backplane?

As I am unfamiliar with the Intel RaId Controllers, I do not know whether they reflect Each SAS port as unique in the BIOS or it reflects DEVICES connected to either port

Not sure how the expander comes into play in the notation.

P0:01 for port 1 0-7

P0:02 for port 2 0-7

The RAID controller feeds the expander that connects to the backplane?

ASKER

Here was the response from a tech support regarding my question;

"This server’s RAID system is going to expander, so there are internal divide by 4 to expander. I believe the picture one will be the choice, but you can use any combination that you want. I don’t think it is really matter in this system."

I sent him two pictures - Picture 1 showing all 11 HDs from bay1 thru 11.

Picture 2 showing First 6 HDs from bay 1 thru 6 and last 5 HDs from bay 12 thru 16.

"This server’s RAID system is going to expander, so there are internal divide by 4 to expander. I believe the picture one will be the choice, but you can use any combination that you want. I don’t think it is really matter in this system."

I sent him two pictures - Picture 1 showing all 11 HDs from bay1 thru 11.

Picture 2 showing First 6 HDs from bay 1 thru 6 and last 5 HDs from bay 12 thru 16.

You have 2 cables from the HBA -> backplane, right? If so, then there is an easy way to verify that you are distributing the bandwidth between the two ports.

Unplug one of the internal cables. If NONE or ALL of the disks disappear, then you know only one of the two cables are being used.

Unplug one of the internal cables. If NONE or ALL of the disks disappear, then you know only one of the two cables are being used.

Keep in mind that each cable supports 4 SAS paths.

The controller is smart enough to manage bandwidth between the two cables to the expander and on to the backplane.

The controller is smart enough to manage bandwidth between the two cables to the expander and on to the backplane.

ASKER

Page 63 in the Intel Server System R2000WT Integration and Service Guide.

EDIT: And, a lot more detail: Enclosure Management Cabling Guide for Intel C610 Series Chipset Server Systems with Hot-Swap Drive Enclosures. This is a direct link to the PDF.

EDIT: And, a lot more detail: Enclosure Management Cabling Guide for Intel C610 Series Chipset Server Systems with Hot-Swap Drive Enclosures. This is a direct link to the PDF.

Sglee,

So you have two SAS cables leaving the raid controller feeding the expander that connects to the backplane.

When I've seen multi disk backplanes, they have feed from a controller, and they had a bridging mechanism i.e. a cable from the first portion, interconnects to the second, interconnects to third when there is no individual feed for each section.

Philip,

From your comment whether there are four feeding or at least one, all drives will be seen i.e. the PATH will converge without any issue?

So you have two SAS cables leaving the raid controller feeding the expander that connects to the backplane.

When I've seen multi disk backplanes, they have feed from a controller, and they had a bridging mechanism i.e. a cable from the first portion, interconnects to the second, interconnects to third when there is no individual feed for each section.

Philip,

From your comment whether there are four feeding or at least one, all drives will be seen i.e. the PATH will converge without any issue?

@Arnold,

The drives are all there and should be seen as has already been shown by moving them about between hot-swap bays.

The drives are all there and should be seen as has already been shown by moving them about between hot-swap bays.

ASKER

I will just keep HDs as they are.

It may not have been necessary to put three at a time and skip one bay. However having done this, at least, I am not loosing any performance compared to the scenario where I put all 11 HDs one after another.

Thank you all!

It may not have been necessary to put three at a time and skip one bay. However having done this, at least, I am not loosing any performance compared to the scenario where I put all 11 HDs one after another.

Thank you all!

you have two raid 10 s spanned.