mssql_v2005

asked on

Partitioning by GUID+ SQL Server 2012

Hi ALL:

I have a table which has a Uniqueidentifier Column. I would like to Partition this table using Partition function and schema in SQL Server 2012 by this Uniqueidentifier Column. I would like to partition by first 2 bytes.

I have created 16 partitions with RANGE RIGHT as below. Is my calculation analysis on Partition function and schema & below script is correct??

I have a table which has a Uniqueidentifier Column. I would like to Partition this table using Partition function and schema in SQL Server 2012 by this Uniqueidentifier Column. I would like to partition by first 2 bytes.

I have created 16 partitions with RANGE RIGHT as below. Is my calculation analysis on Partition function and schema & below script is correct??

CREATE PARTITION FUNCTION [pf_GUIDRangePartition](uniqueidentifier) AS RANGE RIGHT

FOR VALUES

(

'00000000-0000-0000-0000-000000000000',

'00000000-0000-0000-0000-100000000000',

'00000000-0000-0000-0000-200000000000',

'00000000-0000-0000-0000-300000000000',

'00000000-0000-0000-0000-400000000000',

'00000000-0000-0000-0000-500000000000',

'00000000-0000-0000-0000-600000000000',

'00000000-0000-0000-0000-700000000000',

'00000000-0000-0000-0000-800000000000',

'00000000-0000-0000-0000-900000000000',

'00000000-0000-0000-0000-A00000000000',

'00000000-0000-0000-0000-B00000000000',

'00000000-0000-0000-0000-C00000000000',

'00000000-0000-0000-0000-D00000000000',

'00000000-0000-0000-0000-E00000000000',

'00000000-0000-0000-0000-F00000000000',

'FFFFFFFF-FFFF-FFFF-FFFF-FFFFFFFFFFFF'

)

CREATE PARTITION SCHEME [ps_GUIDRangePartition]

AS

PARTITION [pf_GUIDRangePartition] TO

(FG_1,FG_2,FG_3,FG_4,FG_6,FG_7,FG_8,FG_9,FG_10,FG_11,FG_12,FG_13,FG_14,FG_15,FG_16,[PRIMARY])

GOASKER

I've referred below URL to create PF.

https://social.msdn.microsoft.com/Forums/sqlserver/en-US/e9960c94-4059-4d96-a680-01ac3422cb5a/how-to-partition-table-by-uniqueidentifier-column-sql-server-2005?forum=transactsql

<<Is your UNIQUEIDENTIFIER random or sequential>>

How to check do you have any sql query to check GUID order?

https://social.msdn.microsoft.com/Forums/sqlserver/en-US/e9960c94-4059-4d96-a680-01ac3422cb5a/how-to-partition-table-by-uniqueidentifier-column-sql-server-2005?forum=transactsql

<<Is your UNIQUEIDENTIFIER random or sequential>>

How to check do you have any sql query to check GUID order?

ASKER

select * from Table_Name

go

14D0D8E4-F77A-E511-88D7-00

85B2C30C-3FE4-E511-B741-00

go

14D0D8E4-F77A-E511-88D7-00

85B2C30C-3FE4-E511-B741-00

Yup. But this does not match your describtion about "first 2 bytes". Your using the GUID sort-interpretation of SQL Server. These are different things.

When you use your CreatedOn column, then

How to check do you have any sql query to check GUID order?How do you create those UIDs?

When you use your CreatedOn column, then

SELECT * FROM yourTable ORDER BY CreatedOn;and

SELECT * FROM yourTable ORDER BY primaryKeyUID;select * from Table_Name

go

14D0D8E4-F77A-E511-88D7-005056B661F4 --1st row

85B2C30C-3FE4-E511-B741-005056B661F4 --last rows

With some millions rows, this means it is random.

ASKER

I've verified based on CreatedOn column. Looks like the data is in random order.

Can you please advise me how to correct above partition function?

Can you please advise me how to correct above partition function?

ASKER

I'm herewith attaching output of below queries.

SELECT * FROM Tblname ORDER BY GUIDCol;

SELECT * FROM Tblname ORDER BY CreatedOn;

Can you please advise me how to correct above partition function?

Book1.xlsx

SELECT * FROM Tblname ORDER BY GUIDCol;

SELECT * FROM Tblname ORDER BY CreatedOn;

Can you please advise me how to correct above partition function?

Book1.xlsx

What are your candidate keys in this table?

ASKER

PFA.

PFA.txt

PFA.txt

ASKER

We have only one column as prim key in table.

Well, then you have no other choice as using that column.

But you will not gain performance from partitioning that table.

But you will not gain performance from partitioning that table.

ASKER

OK, Let me try on local or TEST server. You have advised that the above mentioned partition function is not correct. Can you share updated partition function OR let me know where is changes required.

Thanks in advance.

Thanks in advance.

SOLUTION

membership

This solution is only available to members.

To access this solution, you must be a member of Experts Exchange.

ASKER

I got below error for above query. Can you advise how to fix below error.

Column '@Sample.GroupNo' is invalid in the select list because it is not contained in either an aggregate function or the GROUP BY clause.

Column '@Sample.GroupNo' is invalid in the select list because it is not contained in either an aggregate function or the GROUP BY clause.

ASKER

I have created below PF and while executing I got error. Can you confirm below syntax is correct?

Error: Duplicate range boundary values are not allowed in partition function boundary values list. Partition boundary values at ordinal 2 and 3 are equal.

Error: Duplicate range boundary values are not allowed in partition function boundary values list. Partition boundary values at ordinal 2 and 3 are equal.

CREATE PARTITION FUNCTION [PF_TESTDB](UNIQUEIDENTIFIER) AS

RANGE LEFT FOR VALUES

(

'00000000-0000-0000-0000-000000000000', '00000000-0000-0000-0000-100000000000' ,

'00000000-0000-0000-0000-100000000000', '00000000-0000-0000-0000-200000000000' ,

'00000000-0000-0000-0000-200000000000', '00000000-0000-0000-0000-300000000000' ,

'00000000-0000-0000-0000-300000000000', '00000000-0000-0000-0000-400000000000' ,

'00000000-0000-0000-0000-400000000000', '00000000-0000-0000-0000-500000000000' ,

'00000000-0000-0000-0000-500000000000', '00000000-0000-0000-0000-600000000000' ,

'00000000-0000-0000-0000-600000000000', '00000000-0000-0000-0000-700000000000' ,

'00000000-0000-0000-0000-700000000000', '00000000-0000-0000-0000-800000000000' ,

'00000000-0000-0000-0000-800000000000', '00000000-0000-0000-0000-900000000000' ,

'00000000-0000-0000-0000-900000000000', '00000000-0000-0000-0000-A00000000000' ,

'00000000-0000-0000-0000-A00000000000', '00000000-0000-0000-0000-B00000000000' ,

'00000000-0000-0000-0000-B00000000000', '00000000-0000-0000-0000-C00000000000' ,

'00000000-0000-0000-0000-C00000000000', '00000000-0000-0000-0000-D00000000000' ,

'00000000-0000-0000-0000-D00000000000', '00000000-0000-0000-0000-E00000000000' ,

'00000000-0000-0000-0000-E00000000000', '00000000-0000-0000-0000-F00000000000' ,

'00000000-0000-0000-0000-F00000000000', 'FFFFFFFF-FFFF-FFFF-FFFF-FFFFFFFFFFFF'

);

Best candidates for being the partition keys are dates. Partitioning by date will let you have live data in the smallest partition (the one that should be more often accessed) and cold data in historical/archive partition so you'll have a better performance.

Btw, how big is your table?

Btw, how big is your table?

ASKER

Table details:

rows reserved data index_size unused

==========================

40731880 33005256 KB 13581384 KB 19422280 KB 1592 KB

We have date column with the name of CreatedOn. As of now this column doesn't contains NULL values but in future it may contain NULL, because of this we have not marked this column as primary key.

We have another column with name of ProductID with Uniqueidentifier datatype and this is primary key and have created clustered index on this table. So we are planning to create partition on this column. We are planning to create secondary files(ndf) and distributing rows in different secondary files....

rows reserved data index_size unused

==========================

40731880 33005256 KB 13581384 KB 19422280 KB 1592 KB

We have date column with the name of CreatedOn. As of now this column doesn't contains NULL values but in future it may contain NULL, because of this we have not marked this column as primary key.

We have another column with name of ProductID with Uniqueidentifier datatype and this is primary key and have created clustered index on this table. So we are planning to create partition on this column. We are planning to create secondary files(ndf) and distributing rows in different secondary files....

The size of data might not justify a partitioning. Why do you want to partition this table?

Btw, for a 13GB data the table has 19GB of indexes. You should review your indexes for this table since I bet you've some duplicate indexes on it.

Can you post the table definition here (script table in SSMS)?

Btw, for a 13GB data the table has 19GB of indexes. You should review your indexes for this table since I bet you've some duplicate indexes on it.

Can you post the table definition here (script table in SSMS)?

ASKER

PFA file.

Table.txt

Table.txt

The table has 8 UID. What for you need 8 UIDs? Each of one occupies 16byte, so 8 by row will be 128byte. Then add the 4 nvarchar(100) fields and you'll have more than half KB by record.

Since you're using MSSQL 2012 you have datetimeoffset data type to store the time zone. The maximum size of this field is 10byte. A normal datetime is 8byte and you're adding 2 more int (2x4byte) so spending 16byte to store a datetime with the respective timezone. Plus the complexity for you to make the calculations.

Can you also script the existing indexes for this table?

Since you're using MSSQL 2012 you have datetimeoffset data type to store the time zone. The maximum size of this field is 10byte. A normal datetime is 8byte and you're adding 2 more int (2x4byte) so spending 16byte to store a datetime with the respective timezone. Plus the complexity for you to make the calculations.

Can you also script the existing indexes for this table?

ASKER

We can't change existing table structure (including UID) and this structure is recommended by MS engineer.

PFA index file.

Is it not useful if we implement table partition on UID column?

Index.txt

PFA index file.

Is it not useful if we implement table partition on UID column?

Index.txt

ASKER CERTIFIED SOLUTION

membership

This solution is only available to members.

To access this solution, you must be a member of Experts Exchange.

ASKER

So, can you tell me/or advise which indexes we have to keep and which indexes we have to remove?

You'll need to confirm the need of the first index but I'll recommend the following:

DROP INDEX [_etel_crm_ndx_etel_productresourceBase_etel_serialnumber]

CREATE NONCLUSTERED INDEX [_etel_crm_ndx_etel_productresourceBase_etel_serialnumber] ON [dbo].[etel_productresourceBase]

(

[etel_serialnumber] ASC

)

WITH (PAD_INDEX = OFF, STATISTICS_NORECOMPUTE = OFF, SORT_IN_TEMPDB = OFF, DROP_EXISTING = OFF, ONLINE = OFF, ALLOW_ROW_LOCKS = ON, ALLOW_PAGE_LOCKS = ON, FILLFACTOR = 80) ON [PRIMARY]

GOASKER

OK, we will check with our DBA on above index to drop/or retain in database.

We are planning to test table partition based on the column UID on our test server. while executing below PF we are getting error. Can you please review below PF and code.

Error: Duplicate range boundary values are not allowed in partition function boundary values list. Partition boundary values at ordinal 2 and 3 are equal.

We are planning to test table partition based on the column UID on our test server. while executing below PF we are getting error. Can you please review below PF and code.

Error: Duplicate range boundary values are not allowed in partition function boundary values list. Partition boundary values at ordinal 2 and 3 are equal.

CREATE PARTITION FUNCTION [PF_TESTDB](UNIQUEIDENTIFIER) AS

RANGE LEFT FOR VALUES

(

'00000000-0000-0000-0000-000000000000', '00000000-0000-0000-0000-100000000000' ,

'00000000-0000-0000-0000-100000000000', '00000000-0000-0000-0000-200000000000' ,

'00000000-0000-0000-0000-200000000000', '00000000-0000-0000-0000-300000000000' ,

'00000000-0000-0000-0000-300000000000', '00000000-0000-0000-0000-400000000000' ,

'00000000-0000-0000-0000-400000000000', '00000000-0000-0000-0000-500000000000' ,

'00000000-0000-0000-0000-500000000000', '00000000-0000-0000-0000-600000000000' ,

'00000000-0000-0000-0000-600000000000', '00000000-0000-0000-0000-700000000000' ,

'00000000-0000-0000-0000-700000000000', '00000000-0000-0000-0000-800000000000' ,

'00000000-0000-0000-0000-800000000000', '00000000-0000-0000-0000-900000000000' ,

'00000000-0000-0000-0000-900000000000', '00000000-0000-0000-0000-A00000000000' ,

'00000000-0000-0000-0000-A00000000000', '00000000-0000-0000-0000-B00000000000' ,

'00000000-0000-0000-0000-B00000000000', '00000000-0000-0000-0000-C00000000000' ,

'00000000-0000-0000-0000-C00000000000', '00000000-0000-0000-0000-D00000000000' ,

'00000000-0000-0000-0000-D00000000000', '00000000-0000-0000-0000-E00000000000' ,

'00000000-0000-0000-0000-E00000000000', '00000000-0000-0000-0000-F00000000000' ,

'00000000-0000-0000-0000-F00000000000', 'FFFFFFFF-FFFF-FFFF-FFFF-FFFFFFFFFFFF'

); DECLARE @Sample TABLE ( GroupNo INT, StartID UNIQUEIDENTIFIER, EndID UNIQUEIDENTIFIER );

INSERT INTO @Sample

VALUES

( 1, '00000000-0000-0000-0000-000000000000', '00000000-0000-0000-0000-100000000000' ),

( 2, '00000000-0000-0000-0000-100000000000', '00000000-0000-0000-0000-200000000000' ),

( 3, '00000000-0000-0000-0000-200000000000', '00000000-0000-0000-0000-300000000000' ),

( 4, '00000000-0000-0000-0000-300000000000', '00000000-0000-0000-0000-400000000000' ),

( 5, '00000000-0000-0000-0000-400000000000', '00000000-0000-0000-0000-500000000000' ),

( 6, '00000000-0000-0000-0000-500000000000', '00000000-0000-0000-0000-600000000000' ),

( 7, '00000000-0000-0000-0000-600000000000', '00000000-0000-0000-0000-700000000000' ),

( 8, '00000000-0000-0000-0000-700000000000', '00000000-0000-0000-0000-800000000000' ),

( 9, '00000000-0000-0000-0000-800000000000', '00000000-0000-0000-0000-900000000000' ),

( 10, '00000000-0000-0000-0000-900000000000', '00000000-0000-0000-0000-A00000000000' ),

( 11, '00000000-0000-0000-0000-A00000000000', '00000000-0000-0000-0000-B00000000000' ),

( 12, '00000000-0000-0000-0000-B00000000000', '00000000-0000-0000-0000-C00000000000' ),

( 13, '00000000-0000-0000-0000-C00000000000', '00000000-0000-0000-0000-D00000000000' ),

( 14, '00000000-0000-0000-0000-D00000000000', '00000000-0000-0000-0000-E00000000000' ),

( 15, '00000000-0000-0000-0000-E00000000000', '00000000-0000-0000-0000-F00000000000' ),

( 16, '00000000-0000-0000-0000-F00000000000', 'FFFFFFFF-FFFF-FFFF-FFFF-FFFFFFFFFFFF' );

SELECT S.GroupNo,

COUNT(*)

FROM @Sample S

INNER JOIN dbo.Table T ON T.productId >= S.StartID AND T.productId < S.EndID;

I think it's because you configured same value for end and begin of the partitions. Try this:

CREATE PARTITION FUNCTION [PF_TESTDB](UNIQUEIDENTIFIER) AS

RANGE LEFT FOR VALUES

(

'00000000-0000-0000-0000-000000000000', '00000000-0000-0000-0000-100000000000' ,

'00000000-0000-0000-0000-100000000001', '00000000-0000-0000-0000-200000000000' ,

'00000000-0000-0000-0000-200000000001', '00000000-0000-0000-0000-300000000000' ,

'00000000-0000-0000-0000-300000000001', '00000000-0000-0000-0000-400000000000' ,

'00000000-0000-0000-0000-400000000001', '00000000-0000-0000-0000-500000000000' ,

'00000000-0000-0000-0000-500000000001', '00000000-0000-0000-0000-600000000000' ,

'00000000-0000-0000-0000-600000000001', '00000000-0000-0000-0000-700000000000' ,

'00000000-0000-0000-0000-700000000001', '00000000-0000-0000-0000-800000000000' ,

'00000000-0000-0000-0000-800000000001', '00000000-0000-0000-0000-900000000000' ,

'00000000-0000-0000-0000-900000000001', '00000000-0000-0000-0000-A00000000000' ,

'00000000-0000-0000-0000-A00000000001', '00000000-0000-0000-0000-B00000000000' ,

'00000000-0000-0000-0000-B00000000001', '00000000-0000-0000-0000-C00000000000' ,

'00000000-0000-0000-0000-C00000000001', '00000000-0000-0000-0000-D00000000000' ,

'00000000-0000-0000-0000-D00000000001', '00000000-0000-0000-0000-E00000000000' ,

'00000000-0000-0000-0000-E00000000001', '00000000-0000-0000-0000-F00000000000' ,

'00000000-0000-0000-0000-F00000000001', 'FFFFFFFF-FFFF-FFFF-FFFF-FFFFFFFFFFFF'

); ASKER

@Vitor: Can you please tell me how to fix below error

"

The associated partition function 'PF_TESTDB' generates more partitions than there are file groups mentioned in the scheme 'PS_TESTDB'.

"

"

The associated partition function 'PF_TESTDB' generates more partitions than there are file groups mentioned in the scheme 'PS_TESTDB'.

"

use DBName

go

CREATE PARTITION FUNCTION [PF_TESTDB](UNIQUEIDENTIFIER) AS

RANGE LEFT FOR VALUES

(

'00000000-0000-0000-0000-000000000000', '00000000-0000-0000-0000-100000000000' ,

'00000000-0000-0000-0000-100000000001', '00000000-0000-0000-0000-200000000000' ,

'00000000-0000-0000-0000-200000000001', '00000000-0000-0000-0000-300000000000' ,

'00000000-0000-0000-0000-300000000001', '00000000-0000-0000-0000-400000000000' ,

'00000000-0000-0000-0000-400000000001', '00000000-0000-0000-0000-500000000000' ,

'00000000-0000-0000-0000-500000000001', '00000000-0000-0000-0000-600000000000' ,

'00000000-0000-0000-0000-600000000001', '00000000-0000-0000-0000-700000000000' ,

'00000000-0000-0000-0000-700000000001', '00000000-0000-0000-0000-800000000000' ,

'00000000-0000-0000-0000-800000000001', '00000000-0000-0000-0000-900000000000' ,

'00000000-0000-0000-0000-900000000001', '00000000-0000-0000-0000-A00000000000' ,

'00000000-0000-0000-0000-A00000000001', '00000000-0000-0000-0000-B00000000000' ,

'00000000-0000-0000-0000-B00000000001', '00000000-0000-0000-0000-C00000000000' ,

'00000000-0000-0000-0000-C00000000001', '00000000-0000-0000-0000-D00000000000' ,

'00000000-0000-0000-0000-D00000000001', '00000000-0000-0000-0000-E00000000000' ,

'00000000-0000-0000-0000-E00000000001', '00000000-0000-0000-0000-F00000000000' ,

'00000000-0000-0000-0000-F00000000001', 'FFFFFFFF-FFFF-FFFF-FFFF-FFFFFFFFFFFF'

);

USE DBName

GO

CREATE PARTITION SCHEME [PS_TESTDB]

AS

PARTITION [PF_TESTDB] TO

(FG_2000,FG_2001,FG_2002,FG_2003,FG_2004,FG_2005,FG_2006,FG_2007,FG_2008,FG_2009,FG_2010,FG_2011,FG_2012,FG_2013,FG_2014,FG_2015,FG_2016,[PRIMARY])

GO

Each partition need to be in a filegroup. Go to your database properties and check how many filegroups it has. You might need to create more filegroups to accomplish your partition structure.

ASKER

We have 18 FG (17 +1 primary file group). Is there any changes required on partition function or schema to fix above error?

file group

======

PRIMARY

FG_2000

FG_2001

FG_2002

FG_2003

FG_2004

FG_2005

FG_2006

FG_2007

FG_2008

FG_2009

FG_2010

FG_2011

FG_2012

FG_2013

FG_2014

FG_2015

FG_2016

file group

======

PRIMARY

FG_2000

FG_2001

FG_2002

FG_2003

FG_2004

FG_2005

FG_2006

FG_2007

FG_2008

FG_2009

FG_2010

FG_2011

FG_2012

FG_2013

FG_2014

FG_2015

FG_2016

ASKER

Do I need to create 32 file groups for above partition function?

Pls advise.

Pls advise.

Yes, this means you'll need 32 partitions. If you only want 16 you'll need to reduce it:

CREATE PARTITION FUNCTION [PF_TESTDB](UNIQUEIDENTIFIER) AS

RANGE LEFT FOR VALUES

(

'00000000-0000-0000-0000-000000000000',

'00000000-0000-0000-0000-100000000000' ,

'00000000-0000-0000-0000-200000000000' ,

'00000000-0000-0000-0000-300000000000' ,

'00000000-0000-0000-0000-400000000000' ,

'00000000-0000-0000-0000-500000000000' ,

'00000000-0000-0000-0000-600000000000' ,

'00000000-0000-0000-0000-700000000000' ,

'00000000-0000-0000-0000-800000000000' ,

'00000000-0000-0000-0000-900000000000' ,

'00000000-0000-0000-0000-A00000000000' ,

'00000000-0000-0000-0000-B00000000000' ,

'00000000-0000-0000-0000-C00000000000' ,

'00000000-0000-0000-0000-D00000000000' ,

'00000000-0000-0000-0000-E00000000000' ,

'00000000-0000-0000-0000-F00000000000'); ASKER

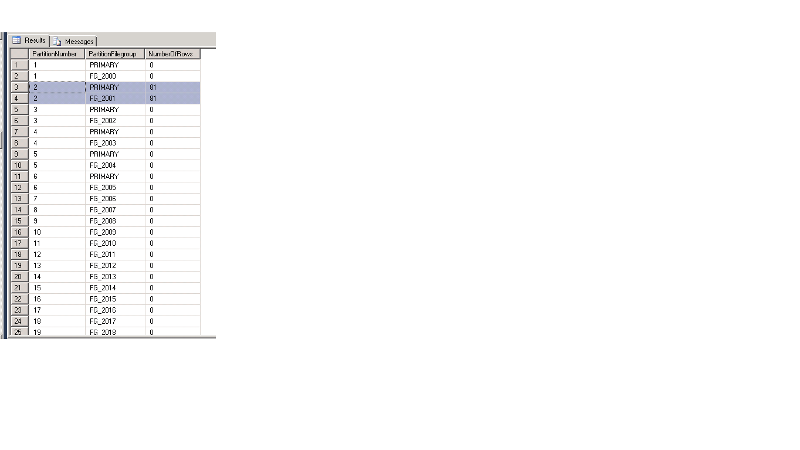

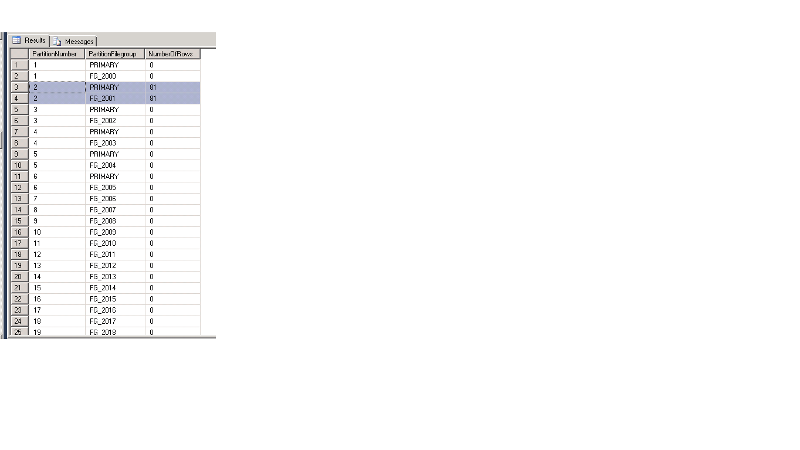

Oki. I have created 32 file groups & partitions. But when I run below command it was NOT showing 32 partitions. I think I am missing something here....

SELECT

p.partition_number AS PartitionNumber,

f.name AS PartitionFilegroup,

p.rows AS NumberOfRows

FROM sys.partitions p

JOIN sys.destination_data_spaces dds ON p.partition_number = dds.destination_id

JOIN sys.filegroups f ON dds.data_space_id = f.data_space_id

WHERE OBJECT_NAME(OBJECT_ID) = 'TableName_1'

Output:

PartitionNumber PartitionFilegroup NumberOfRows

1 PRIMARY 0

1 FG_2000 0

Try with LEFT JOIN. I guess that you don't have rows yet and might be because of that.

ASKER

I have resolved issue by drop and re-creating existing clustered index. However, we have 81 rows in table and after running below sql command I can see 81 rows onboth primary file group and FG_2002 FG. Is it correct?

My Partition schema definition:

My Partition schema definition:

My Partition function definition:

SELECT

p.partition_number AS PartitionNumber,

f.name AS PartitionFilegroup,

p.rows AS NumberOfRows

FROM sys.partitions p

JOIN sys.destination_data_spaces dds ON p.partition_number = dds.destination_id

JOIN sys.filegroups f ON dds.data_space_id = f.data_space_id

WHERE OBJECT_NAME(OBJECT_ID) = 'Tablename' My Partition schema definition:

My Partition schema definition:

CREATE PARTITION SCHEME [PS_TESTDB]

AS

PARTITION [PF_TESTDB] TO

(FG_2000,

FG_2001,

FG_2002,

FG_2003,

FG_2004,

FG_2005,

FG_2006,

FG_2007,

FG_2008,

FG_2009,

FG_2010,

FG_2011,

FG_2012,

FG_2013,

FG_2014,

FG_2015,

FG_2016,

FG_2017,

FG_2018,

FG_2019,

FG_2020,

FG_2021,

FG_2022,

FG_2023,

FG_2024,

FG_2025,

FG_2026,

FG_2027,

FG_2028,

FG_2029,

FG_2030,

FG_2031,

FG_2032,

[PRIMARY]

)

GOMy Partition function definition:

CREATE PARTITION FUNCTION [PF_TESTDB](UNIQUEIDENTIFIER) AS

RANGE left FOR VALUES

(

'00000000-0000-0000-0000-000000000000', '00000000-0000-0000-0000-100000000000' ,

'00000000-0000-0000-0000-100000000001', '00000000-0000-0000-0000-200000000000' ,

'00000000-0000-0000-0000-200000000001', '00000000-0000-0000-0000-300000000000' ,

'00000000-0000-0000-0000-300000000001', '00000000-0000-0000-0000-400000000000' ,

'00000000-0000-0000-0000-400000000001', '00000000-0000-0000-0000-500000000000' ,

'00000000-0000-0000-0000-500000000001', '00000000-0000-0000-0000-600000000000' ,

'00000000-0000-0000-0000-600000000001', '00000000-0000-0000-0000-700000000000' ,

'00000000-0000-0000-0000-700000000001', '00000000-0000-0000-0000-800000000000' ,

'00000000-0000-0000-0000-800000000001', '00000000-0000-0000-0000-900000000000' ,

'00000000-0000-0000-0000-900000000001', '00000000-0000-0000-0000-A00000000000' ,

'00000000-0000-0000-0000-A00000000001', '00000000-0000-0000-0000-B00000000000' ,

'00000000-0000-0000-0000-B00000000001', '00000000-0000-0000-0000-C00000000000' ,

'00000000-0000-0000-0000-C00000000001', '00000000-0000-0000-0000-D00000000000' ,

'00000000-0000-0000-0000-D00000000001', '00000000-0000-0000-0000-E00000000000' ,

'00000000-0000-0000-0000-E00000000001', '00000000-0000-0000-0000-F00000000000' ,

'00000000-0000-0000-0000-F00000000001', 'FFFFFFFF-FFFF-FFFF-FFFF-FFFFFFFFFFFF'

);

In your SELECT you missed to join with sys.indexes:

JOIN sys.indexes AS i

ON i.object_id = p.object_id

AND i.index_id = p.index_id

JOIN sys.indexes AS i

ON i.object_id = p.object_id

AND i.index_id = p.index_id

ASKER

OK, I have done changes in sql query & we are in the final step that is review the output performance results. The partition table scan count is showing very high as compared to original table (without partitioning table). Is it OK or will it cause issue?

SET STATISTICS IO, TIME ON

GO

Select * from Table_without_Part

GO

performance results:

Scan count 1, logical reads 5, physical reads 0, read-ahead reads 0, lob logical reads 0, lob physical reads 0, lob read-ahead reads 0.

SET STATISTICS IO, TIME ON

GO

Select * from Table_with_Part

GO

performance results:

Scan count 33, logical reads 5, physical reads 0, read-ahead reads 0, lob logical reads 0, lob physical reads 0, lob read-ahead reads 0.

SET STATISTICS IO, TIME ON

GO

Select * from Table_without_Part

GO

performance results:

Scan count 1, logical reads 5, physical reads 0, read-ahead reads 0, lob logical reads 0, lob physical reads 0, lob read-ahead reads 0.

SET STATISTICS IO, TIME ON

GO

Select * from Table_with_Part

GO

performance results:

Scan count 33, logical reads 5, physical reads 0, read-ahead reads 0, lob logical reads 0, lob physical reads 0, lob read-ahead reads 0.

ASKER

I'm more concerned on high scan count on partitioned table. Is it OK or will it impact on application performance?

Well, it need to scan each filegroup plus the Primary filegroup and that's why you have the 33 scans.

Isn't bad I would say. Usually you'll see the improvement when you're returning data from one or two filegroups only (less data processed).

Btw, I've reviewed your Partition function and I think you'll be better with these initial 16 ranges. Later if you need more, then you just need to add it:

Isn't bad I would say. Usually you'll see the improvement when you're returning data from one or two filegroups only (less data processed).

Btw, I've reviewed your Partition function and I think you'll be better with these initial 16 ranges. Later if you need more, then you just need to add it:

CREATE PARTITION FUNCTION [PF_TESTDB](UNIQUEIDENTIFIER) AS

RANGE LEFT FOR VALUES

(

'00000000-0000-0000-0000-000000000000',

'00000000-0000-0000-0000-100000000000' ,

'00000000-0000-0000-0000-200000000000' ,

'00000000-0000-0000-0000-300000000000' ,

'00000000-0000-0000-0000-400000000000' ,

'00000000-0000-0000-0000-500000000000' ,

'00000000-0000-0000-0000-600000000000' ,

'00000000-0000-0000-0000-700000000000' ,

'00000000-0000-0000-0000-800000000000' ,

'00000000-0000-0000-0000-900000000000' ,

'00000000-0000-0000-0000-A00000000000' ,

'00000000-0000-0000-0000-B00000000000' ,

'00000000-0000-0000-0000-C00000000000' ,

'00000000-0000-0000-0000-D00000000000' ,

'00000000-0000-0000-0000-E00000000000' ,

'00000000-0000-0000-0000-F00000000000'); SOLUTION

membership

This solution is only available to members.

To access this solution, you must be a member of Experts Exchange.

ASKER

@Anthony : You mean we have to add new column in table with datatype NEWSEQUENTIALID and we have to build table partition on this column using above partition function definition?

Ok, let me rephrase since my phrase wasn't clear and can mislead even it was specific for author's issue:

Each partition need to have a filegroup assigned. A filegroup can handle more than one partition.

Each partition need to have a filegroup assigned. A filegroup can handle more than one partition.

ASKER

@Anthony : You mean we have to add new column in table with datatype NEWSEQUENTIALID and we have to build table partition on this column using above partition function definition?

I would never consider using a GUID in the first place for partitioning. The purpose of partitioning is mainly for large databases. Anything greater than say 200GB is a good candidate for partitioning, provided they have a good key that is either a date or failing that you can resort to using a sequential key. Anything less than 50GB and you are probably wasting time.

ASKER

My DB Prod DB size is around 280 GB. So whats your thoughts on partitioning for large row count tables with GUID?

ASKER

For DB size 280 GB: Do we need to add new column in existing table with datatype NEWSEQUENTIALID and do we have to build table partition on this column using above partition function definition?

or is there any other techniques/or mechanism for partitioning the table with UID?

or is there any other techniques/or mechanism for partitioning the table with UID?

Why do you want to partition anything? What are your concrete reasons?

ASKER

We have few large row count tables in our DB. These DB tables growing very rapidly (we are inserting data on weekly basis). I have given one table row count table above.

To avoid performance issues in future for these large row count tables we are planning for table partition.

To avoid performance issues in future for these large row count tables we are planning for table partition.

So you don't plan to outage old data? Just let the tables grow?

OK, in this case: Partitioning will NOT avoid performanceissues in this case as long as you don't have the hardware to support it.

The only use of partitioning is to split your table over different file groups placed on different disks.

OK, in this case: Partitioning will NOT avoid performanceissues in this case as long as you don't have the hardware to support it.

The only use of partitioning is to split your table over different file groups placed on different disks.

ASKER

1. We have three disks, one for data files, second one for log & 3rd one for Backup. Can we place different file groups on single disk (that is on data file disk)?

2. We have around 256 GB RAM, we have allocated 230 GB to SQL & remaining memory to OS. (Min memory: 50 GB, max memory: 180 GB). Is this configuration is correct?

2. We have around 256 GB RAM, we have allocated 230 GB to SQL & remaining memory to OS. (Min memory: 50 GB, max memory: 180 GB). Is this configuration is correct?

1. With that few disks, the only advantage of partitioning you'll get: file groups and file group backup.

2. imho 4GB or 8GB are enough for the OS.

2. imho 4GB or 8GB are enough for the OS.

ASKER

Can you pls elaborate more on point#1

When you have different spindles for each file group, thus each FG is located on its own volume and the volumns are spread over different disks, then a parallel query gets the data faster, cause you have separate physical IO paths for each partion.

When you place all partions on the same spindles, even when they span multiple volumes, then you won't get any performance advantage.

This considerations are only valid for physical reads. According to your RAM assigned to your SQL Server instance I would guess that you have an high PLE value. So I think probable all the data you need is already in the buffer pool (hot buffers).

So even when optimizing the IO paths, I don't think that there is a way to gain performance with partions.

When I think about your table structure, you will also not gain that much from proper indexing, cause the space required for indices in your case is almost the same as your table size. So using appropriate indices will double (or even more) the disk space needed.

When you place all partions on the same spindles, even when they span multiple volumes, then you won't get any performance advantage.

This considerations are only valid for physical reads. According to your RAM assigned to your SQL Server instance I would guess that you have an high PLE value. So I think probable all the data you need is already in the buffer pool (hot buffers).

So even when optimizing the IO paths, I don't think that there is a way to gain performance with partions.

When I think about your table structure, you will also not gain that much from proper indexing, cause the space required for indices in your case is almost the same as your table size. So using appropriate indices will double (or even more) the disk space needed.

To avoid performance issues in future for these large row count tables we are planning for table partition.

I suspect you missed my comment from earlier on, even though it was in bold, so I am going to repeat it again here:

Just to correct one other fallacy: Partitioning per se does not improve performance. Partitioning is implemented to simplify maintenance, which can in turn improve performance.

I suspect you missed my comment from earlier on, even though it was in bold, so I am going to repeat it again here:

Just to correct one other fallacy: Partitioning per se does not improve performance. Partitioning is implemented to simplify maintenance, which can in turn improve performance.

Even I agree with the others Experts relatively to your current table size doesn't justify yet a partitioning solution I disagree when they say that it won't improve performance.

You'll see a performance improvement and you'll see it better when the table grows more, so if you're planning this in a proactive way then you're doing correctly.

Even if the filegroups are stored in the same disk you'll see the improvement in the performance since SQL Server engine it's intelligent enough to only read the necessary filegroups (by the partitioning function he knows where the data are stored and will avoid to read unnecessary filegroups so processing much less records).

You'll see a performance improvement and you'll see it better when the table grows more, so if you're planning this in a proactive way then you're doing correctly.

Even if the filegroups are stored in the same disk you'll see the improvement in the performance since SQL Server engine it's intelligent enough to only read the necessary filegroups (by the partitioning function he knows where the data are stored and will avoid to read unnecessary filegroups so processing much less records).

ASKER

I have learnt a lot on Table partitioning from all of you :)

Final conclusion: We won't get too much performance if we do partitioning based on UID column. Please correct me If I'm wrong.

My final & last question:

1. We have another column with the name of CreatedOn (Datetime datatype), but we are rarely using this column in our SP's and SQL scripts. Is it good idea for table partitioning based on CreatedOn column as compared to UID column?

2. The createdOn column is not primary key in the table (no clustered index). If answer is YES for above question, do we need to mark CreatedOn column as primary key in table (creating clustered index)?

Our main concern is to avoid performance issues for large row count tables in future and these tables growing very rapidly. For next 2-3 years our management is not ready to buy any new hardware due to budget issue :)

Final conclusion: We won't get too much performance if we do partitioning based on UID column. Please correct me If I'm wrong.

My final & last question:

1. We have another column with the name of CreatedOn (Datetime datatype), but we are rarely using this column in our SP's and SQL scripts. Is it good idea for table partitioning based on CreatedOn column as compared to UID column?

2. The createdOn column is not primary key in the table (no clustered index). If answer is YES for above question, do we need to mark CreatedOn column as primary key in table (creating clustered index)?

Our main concern is to avoid performance issues for large row count tables in future and these tables growing very rapidly. For next 2-3 years our management is not ready to buy any new hardware due to budget issue :)

We won't get too much performance if we do partitioning based on UID column.Correct. Is what we're being saying to you since the beginning.

Is it good idea for table partitioning based on CreatedOn column as compared to UID column?Yes. And for better performance, create a partitioned index (by using the same partition function) on that column as well.

2. The createdOn column is not primary key in the table (no clustered index). If answer is YES for above question, do we need to mark CreatedOn column as primary key in table (creating clustered index)?Doesn't matter. Isn't a demand to be PK.

ASKER

Can you please review below steps in attached file (Partitioning based on CreatedOn column) .

a. CREATE PARTITION FUNCTION

b. CREATE PARTITION SCHEME

c. CREATE TABLE (partition schema - column name at the end & index).

I have already shared existing index details above.

Please let me know if I missed any steps in script.

Final.sql

a. CREATE PARTITION FUNCTION

b. CREATE PARTITION SCHEME

c. CREATE TABLE (partition schema - column name at the end & index).

I have already shared existing index details above.

Please let me know if I missed any steps in script.

Final.sql

Steps are those ones that you posted but don't forget to create an index on CreatedOn column.

Mind that the created extra data files are growing by percentage (the default) so I recommend you to configure them to autogrow in a constant value.

You'll also need to set a process to run every to create a new partition every year.

Mind that the created extra data files are growing by percentage (the default) so I recommend you to configure them to autogrow in a constant value.

You'll also need to set a process to run every to create a new partition every year.

ASKER

Is it correct?

CREATE NONCLUSTERED INDEX [NCI_CreatedOn] ON [dbo].[Table_New]

(

[CreatedOn] ASC

)WITH (PAD_INDEX = OFF, STATISTICS_NORECOMPUTE = OFF, SORT_IN_TEMPDB = OFF, DROP_EXISTING = OFF, ONLINE = OFF, ALLOW_ROW_LOCKS = ON, ALLOW_PAGE_LOCKS = ON, FILLFACTOR = 80)

ON VisitorToFilegroup (CreatedOn)

GO

ALTER DATABASE [TEMP]

ADD FILE

( NAME = FG_2012,

FILENAME = 'D:\Microsoft SQL Server\MSSQL11.MSSQLSERVER\MSSQL\DATA\DATA_2012.ndf',FILEGROWTH = 512MB)

TO FILEGROUP FG_2012

GO

Seems ok.

CreatedOn can accept NULL? If so, those records will be in PRIMARY filegroup instead of the partitioned ones?

CreatedOn can accept NULL? If so, those records will be in PRIMARY filegroup instead of the partitioned ones?

ASKER

Yes, CreatedOn column accepts NULL values.

Ok. Just be aware of that.

ASKER

If the partitioning column (createdon) does not appear in the queries, SQL Serve will search all partitions?

That's correct. Only if your query filters by CreatedOn column the SQL engine will be able to use the partition function.

ASKER

As I said above, we are rarely using CreatedOn column in our queries. how this partition will help us to improve the performance?

You need to chose the best key. I would say that in general, a date field is the best candidate for the partitioning since when working with historical or archived data, the filter is usually by date.

Another option for your case is partitioning by Country, City or Postal Code. But this only works good if your query uses one of this field to filter records.

Another option for your case is partitioning by Country, City or Postal Code. But this only works good if your query uses one of this field to filter records.

A UNIQUEIDENTIFER, cause it is a GUID, defines a certain byte grouping. Under this common interpretation your approch to use only 2 bytes means splitting an atomic value or much worse violating 1NF.

Also your calculus is incorrect:

Open in new window

And cause it is dup: Is your UNIQUEIDENTIFIER random or sequential?