enigmasolutions

asked on

SQL Log File Filling up Rapidly

Hi,

I have a customer with a 6GB database. They process approximately 200 sales transactions per day. After 24 hours they run out of storage space because the log file has gone from 0MB to 100GB. So every day I set the Logging method to SIMPLE. I then shrink the log file. I then set logging back to FULL. I then take a full backup and a log backup for good measure - both execute happily. Now the log file is 0MB. 24 hours later log file is 100GB again. There are no open transactions at any stage (I checked before and after). When I select log_reuse_wait_desc from sys.databases I get log_reuse_wait_desc=LOG_BA

What is causing the log file to go crazy and fill up the drive?

I have a customer with a 6GB database. They process approximately 200 sales transactions per day. After 24 hours they run out of storage space because the log file has gone from 0MB to 100GB. So every day I set the Logging method to SIMPLE. I then shrink the log file. I then set logging back to FULL. I then take a full backup and a log backup for good measure - both execute happily. Now the log file is 0MB. 24 hours later log file is 100GB again. There are no open transactions at any stage (I checked before and after). When I select log_reuse_wait_desc from sys.databases I get log_reuse_wait_desc=LOG_BA

What is causing the log file to go crazy and fill up the drive?

SOLUTION

membership

This solution is only available to members.

To access this solution, you must be a member of Experts Exchange.

The database size is not as important as the activity. If it grows that large, a single transaction backup every twenty four hours for transaction log backup is not enough. Start with a transaction log vackup every four hours. Then look at the transaction backup file size.

Keep an eye on the space where the backups are stored.

Backups should be done automatically.

Keep an eye on the space where the backups are stored.

Backups should be done automatically.

ASKER

OK will do.

I would love to know how 200 transactions result in 100GB of log activity?

I wish there was some way to analyse how this can happen?

I would love to know how 200 transactions result in 100GB of log activity?

I wish there was some way to analyse how this can happen?

Indeed it's not unusual to schedula log backups every 15 minutes. The choice of this frequency actually is the choice of the timeframe of data you accept as worst case loss, if the drive with data and log file fails.

Bye, Olaf.

Bye, Olaf.

Look at the transaction, what is it doing.

If you are doing en mass update/s that might account to log growth, but yours is a rather rapid growth.

Is this a custom app of comercial origin or it is an internally created application.

You could use Dbcc to see the transaction in different detail levels.

Dbcc log

Before you change the recovery model from full to simple, do you stop any service?

Did you run Dbcc opentran(database)

Monitor the backups to see when the transaction log backup grows.

Set the size of the log to a fixed size. Note that when it reaches the limit, errors pointing to transaction log running out of space will be generated.

If you are doing en mass update/s that might account to log growth, but yours is a rather rapid growth.

Is this a custom app of comercial origin or it is an internally created application.

You could use Dbcc to see the transaction in different detail levels.

Dbcc log

Before you change the recovery model from full to simple, do you stop any service?

Did you run Dbcc opentran(database)

Monitor the backups to see when the transaction log backup grows.

Set the size of the log to a fixed size. Note that when it reaches the limit, errors pointing to transaction log running out of space will be generated.

Which sql server version are you using?

See if dbcc sqlperf referenced in https://msdn.microsoft.com/en-us/library/ms189768(v=sql.105).aspx is available to you?

See if dbcc sqlperf referenced in https://msdn.microsoft.com/en-us/library/ms189768(v=sql.105).aspx is available to you?

there can be several reasons behind filling up rapidly of a SQL log file as mentioned in above comments. Check if it is helpful for you: https://support.microsoft.com/en-in/kb/317375

ASKER

Hi Jason, actually I read that article prior to submitting this question. It was quite informative.

Anyway I think I may know what was causing the problem... I found that someone had set TCP Port 1433 open to the public. And of course there were lots of attempted logins in the SQL log from hackers. I suspect this was the cause of the log file issue. I just hope no one cracked the sa password (which was very long). Fortunately there is no financial data.

I saw this issue once before, but on that occasion the effect was a hit on their in-house bandwidth to the point of almost denial of service. On that occasion the hackers probably didn't crack it.

Does someone know if hackers could cause log file growth by just login attempts?

Anyway I think I may know what was causing the problem... I found that someone had set TCP Port 1433 open to the public. And of course there were lots of attempted logins in the SQL log from hackers. I suspect this was the cause of the log file issue. I just hope no one cracked the sa password (which was very long). Fortunately there is no financial data.

I saw this issue once before, but on that occasion the effect was a hit on their in-house bandwidth to the point of almost denial of service. On that occasion the hackers probably didn't crack it.

Does someone know if hackers could cause log file growth by just login attempts?

Since you dumped the log, it is now academic.

Usually, a transaction log will only have transaction/Db updates.....

Double check security on the database to which this log belongs, they might not have cracked the sa password, but this db might not have needed it. I.e. Has admin and no password as the dbo user.

Double check your code to make sure it is not suseptible to SQL injection issues.

Usually, a transaction log will only have transaction/Db updates.....

Double check security on the database to which this log belongs, they might not have cracked the sa password, but this db might not have needed it. I.e. Has admin and no password as the dbo user.

Double check your code to make sure it is not suseptible to SQL injection issues.

ASKER

Even though I has wiping out the log and then doing a full backup...

I suspect the issue was that I was never doing a log backup.

Although this doesn't make a lot of sense to me.

Perhaps it was a combination of both.

Anyway today the log file is tiny.

I will check it again tomorrow.

I suspect the issue was that I was never doing a log backup.

Although this doesn't make a lot of sense to me.

Perhaps it was a combination of both.

Anyway today the log file is tiny.

I will check it again tomorrow.

you should not set the log to auto-shrink. transaction log growing and shrinking puts an undue/unnecessary burden on the Disk I/O.

Your Log file should be around 1GB if you backup the transaction log regularly. While the size on the filesystem will be 1Gb, everytime the transaction log is backed up, all the inactive transactions are effectively "deleted" from the log.

Your Log file should be around 1GB if you backup the transaction log regularly. While the size on the filesystem will be 1Gb, everytime the transaction log is backed up, all the inactive transactions are effectively "deleted" from the log.

ASKER

PLEASE HELP...

Both the Log file and the Log Backup files are still growing exponentially.

I setup both a Log backup and Full Backup to run nightly. I don't really need hourly transaction log backups. The transaction activity is tiny!!! But the log files are still growing out of control.

This is crazy - there is very little activity in this database.

In the last 24 hours there were just 224 invoice transactions posted to the database.

But the log file has jumped by 3GB ???

Both the Log file and the Log Backup files are still growing exponentially.

I setup both a Log backup and Full Backup to run nightly. I don't really need hourly transaction log backups. The transaction activity is tiny!!! But the log files are still growing out of control.

This is crazy - there is very little activity in this database.

In the last 24 hours there were just 224 invoice transactions posted to the database.

But the log file has jumped by 3GB ???

ASKER CERTIFIED SOLUTION

membership

This solution is only available to members.

To access this solution, you must be a member of Experts Exchange.

ASKER

FYI - the ap is our own accounting software. But there is a website www.housegarden.com.au that reads data from the same database. There is no updating of data to our database by this website.

Arnold - in response to your questions:

* a 10Gb transaction log in a 24 hour period might be what your system has.

> I guess so

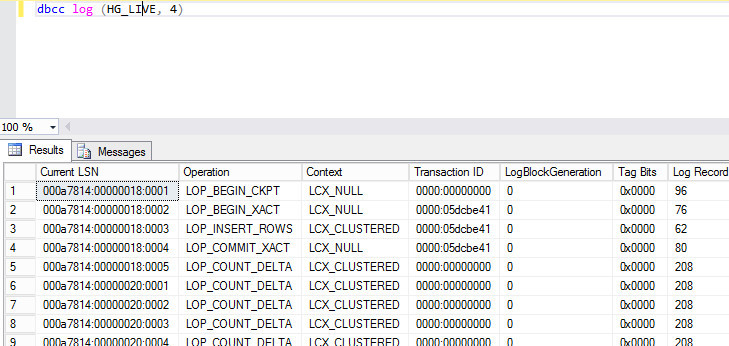

* have you had a chance to look at the dbcc log to look at what the transaction are?

> How do I use/interpret DBCC Log (I did look at it briefly - it was hard to read)

> Do your DB transaction include deletes as well as additions?

* No

> Do you have your settings such that both DBs and Transaction logs are set to auto-shrink?

* Yes - basically use default settings

> Do you have transaction for reports, etc. (data reads only)

* Yes - queries are executed to retrieve data from the database (eg in our Ap user may lookup a list of stock codes / and on the website web users might retrieve a list of stock codes under a category).

* as note mid day, run dbcc log(HG_LIVE, 4) and see what the transactions that are in the DB.

> Unfortunately I decided to completely clear the Log (as per the process at top of this post). We are heading into the weekend and I didn't want phone calls.

> Anyway I just ran this - but hard to interpret - see screen shot below - what does it all mean

* If you do not need to have a recovery model for a point in time, such that you are ok with recovering with a potential loss of work from 24 hours, change the recovery model to simple and .......

> Yes I know I can do this. But I like to be able to do point in time restore. More importantly I want to understand why my log file is going mad.

* Another option, is use the server profiler and capture a 10-60 minute during peak and see what is being run against the DB.

> Not a bad idea - I will investigate

* Usually, you should not schedule the transaction log backup at the same time that you are performing a full or other backup, it unnecessarily tasks the DB and the DIsks.

Move transaction log up by 30 minutes or postpone the full backup by 45 minutes.

> Sure. Although it is not really at the same time since the Log Backup runs first and when it is finished then the Full Backup Runs. I don't think this is the issue. But point noted.

What is the log file growth rate is set to? What is the maximum size of the transaction log allowed? (DB properties, files)

The Log File is set to Grow by 10% with Unlimited Size

Arnold - in response to your questions:

* a 10Gb transaction log in a 24 hour period might be what your system has.

> I guess so

* have you had a chance to look at the dbcc log to look at what the transaction are?

> How do I use/interpret DBCC Log (I did look at it briefly - it was hard to read)

> Do your DB transaction include deletes as well as additions?

* No

> Do you have your settings such that both DBs and Transaction logs are set to auto-shrink?

* Yes - basically use default settings

> Do you have transaction for reports, etc. (data reads only)

* Yes - queries are executed to retrieve data from the database (eg in our Ap user may lookup a list of stock codes / and on the website web users might retrieve a list of stock codes under a category).

* as note mid day, run dbcc log(HG_LIVE, 4) and see what the transactions that are in the DB.

> Unfortunately I decided to completely clear the Log (as per the process at top of this post). We are heading into the weekend and I didn't want phone calls.

> Anyway I just ran this - but hard to interpret - see screen shot below - what does it all mean

* If you do not need to have a recovery model for a point in time, such that you are ok with recovering with a potential loss of work from 24 hours, change the recovery model to simple and .......

> Yes I know I can do this. But I like to be able to do point in time restore. More importantly I want to understand why my log file is going mad.

* Another option, is use the server profiler and capture a 10-60 minute during peak and see what is being run against the DB.

> Not a bad idea - I will investigate

* Usually, you should not schedule the transaction log backup at the same time that you are performing a full or other backup, it unnecessarily tasks the DB and the DIsks.

Move transaction log up by 30 minutes or postpone the full backup by 45 minutes.

> Sure. Although it is not really at the same time since the Log Backup runs first and when it is finished then the Full Backup Runs. I don't think this is the issue. But point noted.

What is the log file growth rate is set to? What is the maximum size of the transaction log allowed? (DB properties, files)

The Log File is set to Grow by 10% with Unlimited Size

When your log grows larger than the db there have to be lots of either deletes and inserts - or lots of updates. Any combination of operations not letting data grow, but the log.

Bye, Olaf.

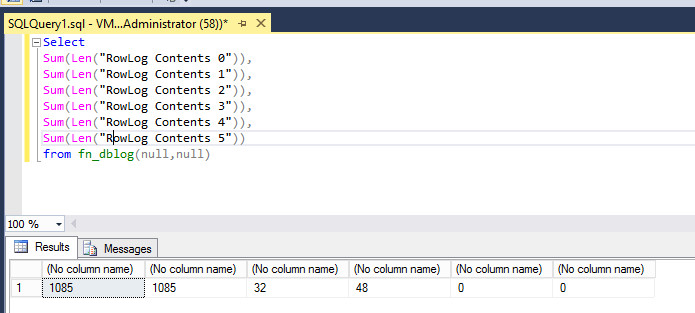

Select

Sum(Len("RowLog Contents 0")),

Sum(Len("RowLog Contents 1")),

Sum(Len("RowLog Contents 2")),

Sum(Len("RowLog Contents 3")),

Sum(Len("RowLog Contents 4")),

Sum(Len("RowLog Contents 5"))

from fn_dblog(null,null) Bye, Olaf.

ASKER

Well, those columns contain the net changes of bytes in binary form. If you CAST("RowLog Contents 0" as varchar(max)) you might see something familiar from text fields.

For now you have very low changes in your data, about 2KB.

At some point at the day this is going through the roof, as you experienced. Then the records with long content or the sum of long content will point nearer towards the time it happens or happened.

Bye, Olaf.

For now you have very low changes in your data, about 2KB.

At some point at the day this is going through the roof, as you experienced. Then the records with long content or the sum of long content will point nearer towards the time it happens or happened.

Bye, Olaf.

Does your internal accounting system when generating reports creates temporary tables/views, for the purpose of facilitating/aggregating data for report presentations?

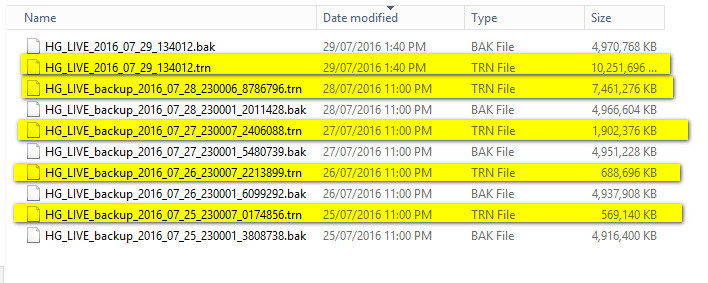

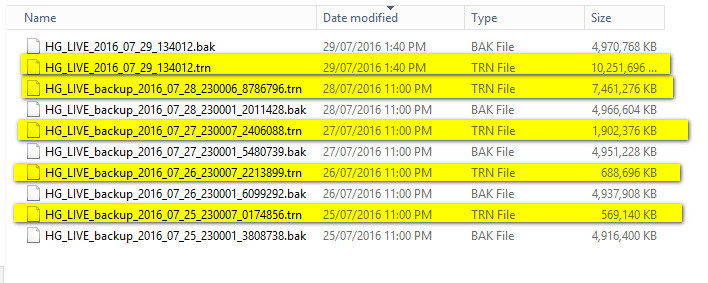

Try scheduling the transaction log back to run hourly and see where tge .trn backup file jumps in size.

Then try to capture SQL queries during that time frame.

Double check you code to make sure you are not directly passing user input into queries being sent to the server.

I.e. You have an input_field from form

Where you have select * from table where column=$data['input_field'

Try scheduling the transaction log back to run hourly and see where tge .trn backup file jumps in size.

Then try to capture SQL queries during that time frame.

Double check you code to make sure you are not directly passing user input into queries being sent to the server.

I.e. You have an input_field from form

Where you have select * from table where column=$data['input_field'

ASKER

Aha, awesome!

Thanks to your help Arnold... I worked it out.

To find the issue I did this:

* First I set Log Backups to run every 30 minutes

* And at the same time I set SQL Profiler running

I could tell from the Log backups when the issue occurred. And by saving the Profiler results back into a table - then by querying this table I discovered what was causing the problem.

I discovered that one of my programmers was running a script to optimize indexes at 1:30am in the morning. The script called "IndexOptimize" came from this website: https://ola.hallengren.com/. So for now I disabled it.

FYI - he was also running sp_UpdateStatus nightly at 1:30am - which has resulted in huge performance boosts for our customers (so I kept this bit).

Thanks to your help Arnold... I worked it out.

To find the issue I did this:

* First I set Log Backups to run every 30 minutes

* And at the same time I set SQL Profiler running

I could tell from the Log backups when the issue occurred. And by saving the Profiler results back into a table - then by querying this table I discovered what was causing the problem.

I discovered that one of my programmers was running a script to optimize indexes at 1:30am in the morning. The script called "IndexOptimize" came from this website: https://ola.hallengren.com/. So for now I disabled it.

FYI - he was also running sp_UpdateStatus nightly at 1:30am - which has resulted in huge performance boosts for our customers (so I kept this bit).

ASKER

Using frequent Log Backups to find the point in time + using profiler to analyse the activity was the answer.

You should not disable the index optimization/rebuilding task, but schedule it to run every other monthly or every month basis not a daily.

Double check which options are set, it might be the person set the fragmentation too low where by everytime, the indexes are getting rebuilt versus optimized.

Double check which options are set, it might be the person set the fragmentation too low where by everytime, the indexes are getting rebuilt versus optimized.

ASKER

Awesome. Thanks for the tip.

I haven't the time right now to get into it... (Not sure what too low is).

But guess the default fragmentation setting will do for a relatively small database.

When I get a few minutes I am sure I can work it out.

Or perhaps you can recommend some good websites on this?

I haven't the time right now to get into it... (Not sure what too low is).

But guess the default fragmentation setting will do for a relatively small database.

When I get a few minutes I am sure I can work it out.

Or perhaps you can recommend some good websites on this?

I've not had a chance to recheck Ola's site.

Double check what the task is supposed to do.

I think thresholds I use if fragmentation in a table is less than 40% reorganize otherwise rebuild.

Ola's a powerful tool, double check which options/things are run.

It includes backups, etc.

Double check what the task is supposed to do.

I think thresholds I use if fragmentation in a table is less than 40% reorganize otherwise rebuild.

Ola's a powerful tool, double check which options/things are run.

It includes backups, etc.

ASKER

Thanks Arnold

ASKER

I am still wondering how a log file can jump from 0MB to 100GB in 24 hours, in a small database.

Anyway, I was effectively doing the backup and log backup manually.

Well, in truth yesterday I only did the database backup.

But today I have done both a database backup and a log backup.

And at 11pm tonight there will be another backup of database and log.

If this fixes the problem, then I would expect the log file to remain small by tomorrow. We shall see...