svMotion between two different datastore limitation ?

Hi All,

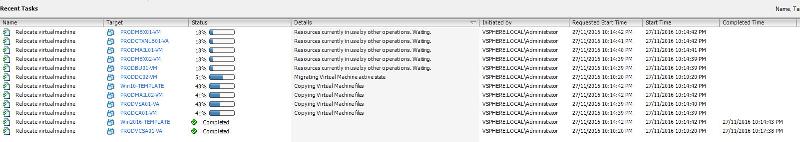

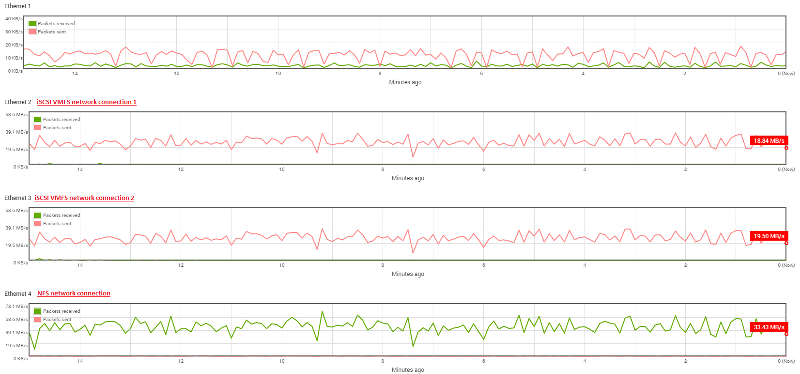

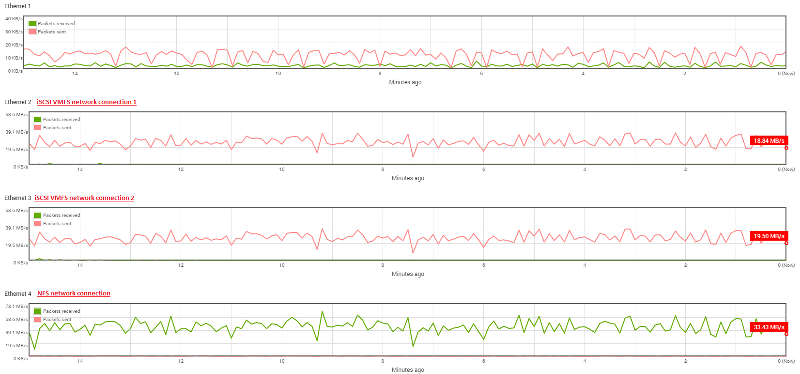

Is there any reason as to why the sVmotion from my iSCSI VMFS data store to my NFS data store is limited to just 4x VMs at the same time ?

Is this because of the ethernet MPIO bandwidth limitation, NAS IOPS performance limitation or ESXi 6 limitation ?

How to increase it into its maximum value ?

See the screenshot below from the QNAP network traffic and each own data store network performance:

Is there any reason as to why the sVmotion from my iSCSI VMFS data store to my NFS data store is limited to just 4x VMs at the same time ?

Is this because of the ethernet MPIO bandwidth limitation, NAS IOPS performance limitation or ESXi 6 limitation ?

How to increase it into its maximum value ?

See the screenshot below from the QNAP network traffic and each own data store network performance:

ASKER CERTIFIED SOLUTION

membership

This solution is only available to members.

To access this solution, you must be a member of Experts Exchange.

no pronlems

ASKER