Pkafkas

asked on

VMware and SAN Design

I am preparing to install a SAN in our IT Infrastructure. I am preparing for the installation; but, I think I miss-understood the advice, from a previous question that I posted to experts-exchange a few weeks ago: https://www.experts-exchange.com/questions/29082500/VMware-6-0-Update-3-Vmotion-configuration-High-Availability-configuration-for-new-SAN.html

Specifically, I question what was described to me as:

- A functional Design

- A good design

- A bad design.

Allow me to describe our current VMware setup. Then describe how I plan to design the new VMware system, with the SAN and Vmotion enabled. Then please explain if my plan is a functional/good plan or if I need to change the plan.

Current VMware Setup:

3 x ESXi Hosts with local storage. We do have a VMware Cluster with version 6.0 Update 3 installed. We are licensed with VMware Essentials Plus (VMware vSphere 6 Essentials Plus Licensed for 2 physical CPUs (unlimited cores per CPU).

Our Company LAN currently consists of 2 fully routable IP-Based VLans:

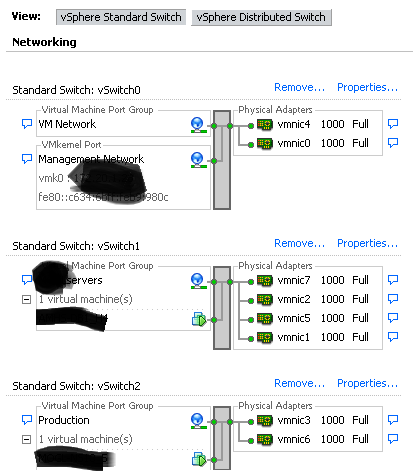

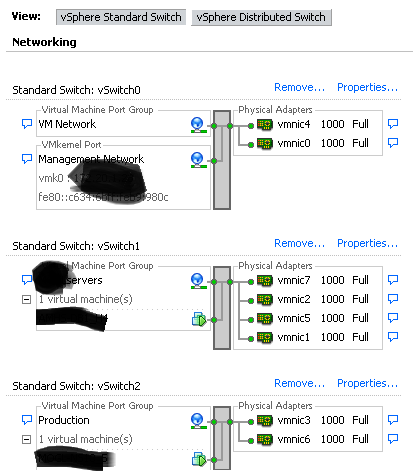

The ESXI Hosts currently have 3 vSwitches:

1. Mgmt vSwitch, without any virtual Servers connected.

a. connected to VLan-1

2. Production vSwitch, non-server Virtual Devices.

a. Connected to VLan-1

3. Server vSwitch, connected to VLan-10

a,. This vSwitch is on a difference Vlan from everything else.

My plan is to stick with the 3 vSwitch structure; but, to make sure that the Mgmnt vSwitch has no Virtual devices connected to it and that the Mgmt vSwitch has at least 2 vmnics. Such as below:

Keep in mind that this is not a huge datacenter with hundreds of virtual servers. My questions are:

1. Will this design with 3 vSwitches work for Vmotion and High Availability, between the 3 ESXi hsots?

2. Is there anything wrong with the above design?

3. In a perfect world, for a small company that has 22 virtual Servers (3 ESXI hosts) how can I design this Virtual Environment best?

I am not talking about vMotion, just setup and design right now.

Specifically, I question what was described to me as:

- A functional Design

- A good design

- A bad design.

Allow me to describe our current VMware setup. Then describe how I plan to design the new VMware system, with the SAN and Vmotion enabled. Then please explain if my plan is a functional/good plan or if I need to change the plan.

Current VMware Setup:

3 x ESXi Hosts with local storage. We do have a VMware Cluster with version 6.0 Update 3 installed. We are licensed with VMware Essentials Plus (VMware vSphere 6 Essentials Plus Licensed for 2 physical CPUs (unlimited cores per CPU).

Our Company LAN currently consists of 2 fully routable IP-Based VLans:

- VLan-1 172.XXX.XXX.XXX/24 (non-server devices)

- VLan-10 10.XXX.XXX.XXX/24 (Server VLan)

The ESXI Hosts currently have 3 vSwitches:

1. Mgmt vSwitch, without any virtual Servers connected.

a. connected to VLan-1

2. Production vSwitch, non-server Virtual Devices.

a. Connected to VLan-1

3. Server vSwitch, connected to VLan-10

a,. This vSwitch is on a difference Vlan from everything else.

My plan is to stick with the 3 vSwitch structure; but, to make sure that the Mgmnt vSwitch has no Virtual devices connected to it and that the Mgmt vSwitch has at least 2 vmnics. Such as below:

Keep in mind that this is not a huge datacenter with hundreds of virtual servers. My questions are:

1. Will this design with 3 vSwitches work for Vmotion and High Availability, between the 3 ESXi hsots?

2. Is there anything wrong with the above design?

3. In a perfect world, for a small company that has 22 virtual Servers (3 ESXI hosts) how can I design this Virtual Environment best?

I am not talking about vMotion, just setup and design right now.

ASKER

Mr. Hancock, thank you for the feedback. I am glad you are here in this question. Can you elaborate more with your Answer to #1

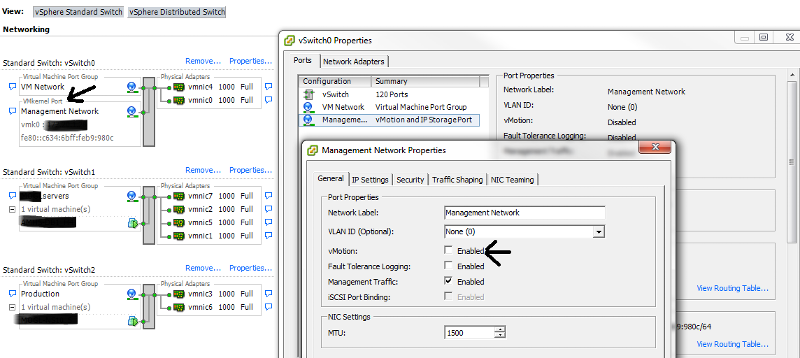

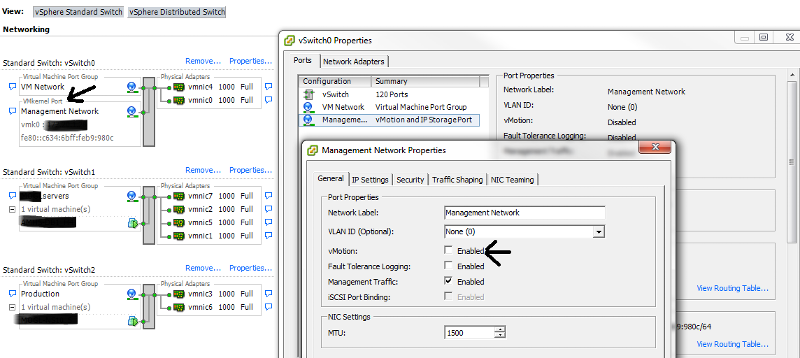

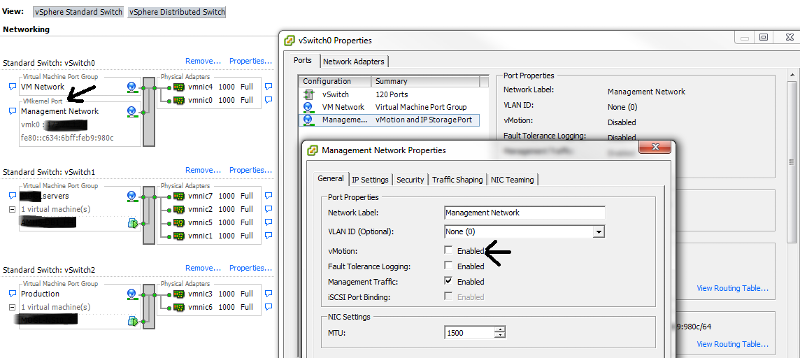

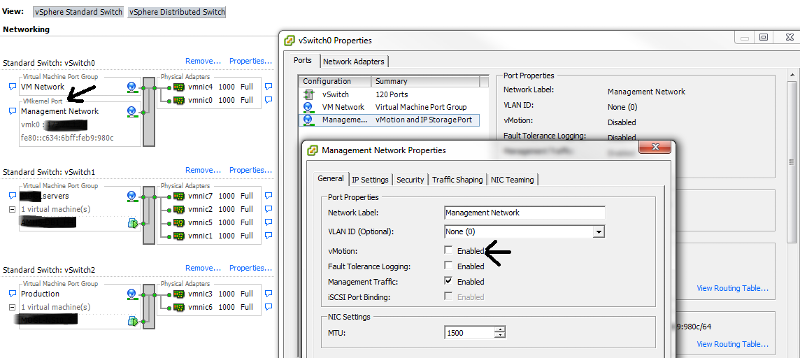

You said that my plan may work, just make sure you enable Vmotion on the Vmkernal port group? Do you mean to check the box to enable VMotion for vSwitch0?

I would have to do this on all of the ESXi hosts, where the vmkernal port group is located. The fact that it is VLan-1, should not prevent vMotion from working correctly right?

Equally important, I can use the current VMware Cluster, that we are using correct? I do not have to delrte the current cluster and create a new cluster to enable vMotion, I hope, correct?

You said that my plan may work, just make sure you enable Vmotion on the Vmkernal port group? Do you mean to check the box to enable VMotion for vSwitch0?

I would have to do this on all of the ESXi hosts, where the vmkernal port group is located. The fact that it is VLan-1, should not prevent vMotion from working correctly right?

Equally important, I can use the current VMware Cluster, that we are using correct? I do not have to delrte the current cluster and create a new cluster to enable vMotion, I hope, correct?

Mr. Hancock, thank you for the feedback. I am glad you are here in this question. Can you elaborate more with your Answer to #1

if you ignore VMware Best Practice, and just randomly enable vMotion on a VMKernel Portgroup. This is not following best practice, as VMware recommend, creating a dedicated standalone isolated network for vMotion.

Ignore it at your peril!

You said that my plan may work, just make sure you enable Vmotion on the Vmkernal port group? Do you mean to check the box to enable VMotion for vSwitch0?

Yes

I would have to do this on all of the ESXi hosts, where the vmkernal port group is located. The fact that it is VLan-1, should not prevent vMotion from working correctly right?

Correct, all hosts need to be enabled for vMotion. All hosts need to be able to communicate with each other across the vMotion network, or vMotions cannot happen.

Equally important, I can use the current VMware Cluster, that we are using correct? I do not have to delrte the current cluster and create a new cluster to enable vMotion, I hope, correct?

Correct,

However, we would strongly advise following VMware Best Practice - if you ignore VMware Best Practice, and just randomly enable vMotion on a VMKernel Portgroup. This is not following best practice, as VMware recommend, creating a dedicated standalone isolated network for vMotion.

vMotions could fail!

You mention the word DESIGN, you have not DESIGNED anything, you've just hit a tick box!!! Yes it will likely work, but it's not a smart design, or recommended.

If you find you have issues with vMotion in the future, you have the answer above.

ASKER

OK,

I believed you have answered questions 1-2 and now I would like to elaborate about question 3, which is regarding a better design.

Questions:

1. Will this design with 3 vSwitches work for Vmotion and High Availability, between the 3 ESXi hsots?

Answer: Yes, but it is not VMware best practice.

2. Is there anything wrong with the above design?

Answer: It is not Vmware best practice, move forward at your own risk.

3. In a perfect world, for a small company that has 22 virtual Servers (3 ESXI hosts) how can I design this Virtual Environment best?

If I understand your suggestions, for best practice is to do the following:

- Create a separate and fully routable VLan, with its own separate IP Address Scheme. For example, VLan-2 with 10.50.50.XX/24.

- Create a separate vSwitch and move around vmnics so there are 2 vmnics on each vswitch:

* 2 x vmnics on the vswitch0 "VM Network" (VLan-1)

* 2 x vmnics on the vswitch1 "Production" (VLan-1)

* 2 x vmnics on the vSwitch2 "Servers" (VLan-10)

* 2 x vmnics on the vSwitch3 "Management Network" (VLan-2)

- Remove the move "Management Network" port from vSwitch0 to the new vSwitch3.

- Then enable/check the vMotion check box.

- Then do the same Vmware networking configuration changes to all of the ESXi hosts.

- Then test, test and test some more during a maintenance window.

I want to make sure I understand your suggestions and what is considered best practice. if it s not, I would like to know where I missunderstood.

I believed you have answered questions 1-2 and now I would like to elaborate about question 3, which is regarding a better design.

Questions:

1. Will this design with 3 vSwitches work for Vmotion and High Availability, between the 3 ESXi hsots?

Answer: Yes, but it is not VMware best practice.

2. Is there anything wrong with the above design?

Answer: It is not Vmware best practice, move forward at your own risk.

3. In a perfect world, for a small company that has 22 virtual Servers (3 ESXI hosts) how can I design this Virtual Environment best?

If I understand your suggestions, for best practice is to do the following:

- Create a separate and fully routable VLan, with its own separate IP Address Scheme. For example, VLan-2 with 10.50.50.XX/24.

- Create a separate vSwitch and move around vmnics so there are 2 vmnics on each vswitch:

* 2 x vmnics on the vswitch0 "VM Network" (VLan-1)

* 2 x vmnics on the vswitch1 "Production" (VLan-1)

* 2 x vmnics on the vSwitch2 "Servers" (VLan-10)

* 2 x vmnics on the vSwitch3 "Management Network" (VLan-2)

- Remove the move "Management Network" port from vSwitch0 to the new vSwitch3.

- Then enable/check the vMotion check box.

- Then do the same Vmware networking configuration changes to all of the ESXi hosts.

- Then test, test and test some more during a maintenance window.

I want to make sure I understand your suggestions and what is considered best practice. if it s not, I would like to know where I missunderstood.

We would not do any of the above

We would define at least

Management Network vSwitch - 2 nics (if you decide to VLAN that is entirely up to you)

Virtual Machine vSwitch - 4 nics - TRUNK with all VLANS you need VLAN 1 and VLAN 10

vMotion vSwitch - 2 nics - with non-routabe IP Address

of course the above could be split using VLANS

All traffic on the vMotion network vSwitch is just that, no other traffic! You are still not creating an isolated network for vMotion.

how is your SAN connected here ?

We would define at least

Management Network vSwitch - 2 nics (if you decide to VLAN that is entirely up to you)

Virtual Machine vSwitch - 4 nics - TRUNK with all VLANS you need VLAN 1 and VLAN 10

vMotion vSwitch - 2 nics - with non-routabe IP Address

of course the above could be split using VLANS

All traffic on the vMotion network vSwitch is just that, no other traffic! You are still not creating an isolated network for vMotion.

how is your SAN connected here ?

ASKER

Regarding the new SAN that we just ordered it and it has not arrived yet; but, according to the invoice it will be using 10GBase-TiSCSI ports. It also has 4 x 16GB FC (Fiber Chanel) ports (for future expansion).

So getting back to the question. I do not need to create a new IP based VLan on our Ethernet backbone, to correctly use VMotion. But the connections used for VMotion should be on their own specific vSwitch and somehow connected to the other ESXi Hosts using a vSwitch that again is configured and used for VMotion. - Correct?

The Management Network vSwitch should be on a separate vSwitch used for VMotion and this management vSwitch should not have any Virtual Servers connected on it either. Also the Management vSwitch have a minimum of 2 vmnics,

The other Vswitch(s) may have as many Virtual Servers on there as we want. Again make sure to have at least 2 vmnics on the other vSwitches. Am I getting closer to understanding

So getting back to the question. I do not need to create a new IP based VLan on our Ethernet backbone, to correctly use VMotion. But the connections used for VMotion should be on their own specific vSwitch and somehow connected to the other ESXi Hosts using a vSwitch that again is configured and used for VMotion. - Correct?

The Management Network vSwitch should be on a separate vSwitch used for VMotion and this management vSwitch should not have any Virtual Servers connected on it either. Also the Management vSwitch have a minimum of 2 vmnics,

The other Vswitch(s) may have as many Virtual Servers on there as we want. Again make sure to have at least 2 vmnics on the other vSwitches. Am I getting closer to understanding

ASKER CERTIFIED SOLUTION

membership

This solution is only available to members.

To access this solution, you must be a member of Experts Exchange.

ASKER

The SAN vendor/re-seller is responsible for installing and configuring how the SAN will be connecting to the ESXi hosts and the already existing network. I will check with them to make sure everyone is on the same page; but, it is good to have these specifics understood and discussed before the installation date.

We have ordered 8 x 10GBase-T iSCSI ports. It sounds as if we have enough for 3 separate ESXi hosts and for the Dual controllers on the SAN; but, i will check with the vendor.

Sounds as if the iSCSI connections will be used for connecting the ESXi hosts with the SAN and not the vmnics. Ethernet connections (vmnics) may be used for management network. What do you think? What would be good design with iSCSI connections for the SAN and ESXi Hosts.

We have ordered 8 x 10GBase-T iSCSI ports. It sounds as if we have enough for 3 separate ESXi hosts and for the Dual controllers on the SAN; but, i will check with the vendor.

Sounds as if the iSCSI connections will be used for connecting the ESXi hosts with the SAN and not the vmnics. Ethernet connections (vmnics) may be used for management network. What do you think? What would be good design with iSCSI connections for the SAN and ESXi Hosts.

SOLUTION

membership

This solution is only available to members.

To access this solution, you must be a member of Experts Exchange.

ASKER

In my previous work place we used Fiber Channel Switch and Fiber connections for the 2 x ESXi hosts and the SAN controllers.

The ESXi Hosts were connected to the network with 1 GB Ethernet connection each, so the setup was different. Thanks for the background information.

The ESXi Hosts were connected to the network with 1 GB Ethernet connection each, so the setup was different. Thanks for the background information.

It may work, as is, otherwise you've got to enable vMotion on a VMKernel Portgroup.

see 1 above.

Re-design vSwitch 1 and vSwitch2, 6 network interfaces for virtual machines can be reduced to at least 4 network interfaces, and 2 network interfaces for vMotion, which is recommended to be on it's on network. (isolated).

or embrace VLANS, and use VLANS for everything, including a new VLAN for vMotion.

and then there is the SAN, does this require networking ?

what network is going to be used for the SAN ?