PHP

--

Questions

--

Followers

Top Experts

The problem that I'm having is that I can't seem to download the entire site in one sitting. Every time I try to download to site to my local machine via FTP (using Filezilla) .. it's not indexing all of the files, and what I end up with is a directory that's only about 15GB in size.

Note that I've also tried creating a full backup archive of the site via the site's hosting cpanel .. but that isn't working at all. I've tried initiating the backup archive in the early evening, and in the morning, the ZIP file that I'm expecting to find in the site root is no where to be found.

I'm reluctant to experiment or waste time with any of those WordPress "Backup" or "Duplicator" plugins, as they work pretty much the same way, where they attempt to create giant ZIP file archives, which I'm nearly certain will fail .. and will also hog all of the server's CPU's resources in the process.

I've already contacted technical support about this problem, and they're annoyingly clueless as to why this is happening. It's almost like they're holding the site hostage or something, and this is quickly turning into an emergency situation. We need to get this site off of the shared hosting environment and set up on a VPS of some kind on another host ASAP.

Why is this happening? And what alternatives do I have besides FTP to get these files onto my local machine --- or transferred directly over to the other host?

Thanks,

- Yvan

Zero AI Policy

We believe in human intelligence. Our moderation policy strictly prohibits the use of LLM content in our Q&A threads.

Have you tried some Wordpress Backup plugin? Take a look at Vaultpress (https://vaultpress.com)

It seems to be nice, and costs only $3.50/month. Here you can find how to migrate your site to a new host:

https://help.vaultpress.com/migrate-to-a-new-host/

Best Regads,

EARN REWARDS FOR ASKING, ANSWERING, AND MORE.

Earn free swag for participating on the platform.

You could do this by SSH'ing into one of the servers and using Rsync, to sync the data from one to the other.

https://en.wikipedia.org/wiki/Rsync

...you'll be able to maintain all the file permissions, directory structures, etc. It's really the "right" way to do this. There are even rsync gui clients on the market if you're not comfortable with shell prompts: https://acrosync.com/home.html

Alternatively you could use an FTP client that supports FXP (assuming both hosts also support it):

https://en.wikipedia.org/wiki/File_eXchange_Protocol

...however FXP has been known to be a security issue, so there's a chance your host doesn't allow it.

Get a FREE t-shirt when you ask your first question.

We believe in human intelligence. Our moderation policy strictly prohibits the use of LLM content in our Q&A threads.

To avoid the oversized failing zip size, you could simply copy your website in chunks, then restore them all at the other host.

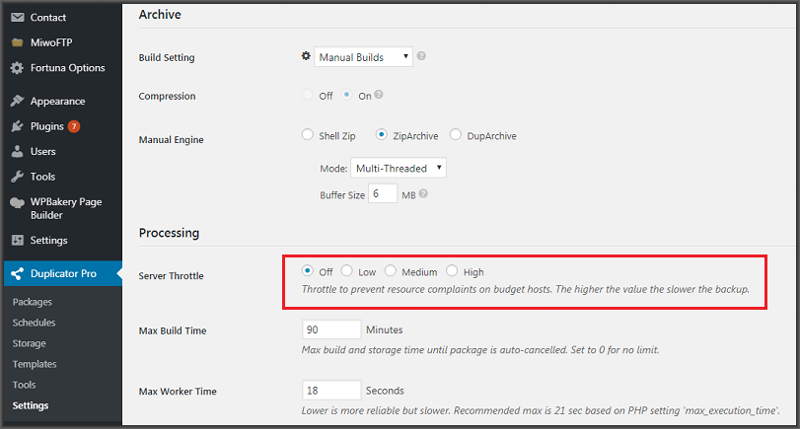

In so far as this problem - "and will also hog all of the server's CPU's resources in the process"

It can be avoided by setting Duplicator Pro's limit to throttle the amount of server resources you want it to use.

Hope that's helpful.

Regards, Andrew

It seems like you have already tried everything

I used to have a very big web site, then i checked why it was so big...

You don't probably need all the 40 gb files.

If i were you, i would list all my files according to their size and delete unnecessary files. You will be amazed after you see that you do not have to keep most of the old files.

Also try to resize the picture files with php.

I would recommend you to dowload all big video files and put it on your disk or another server so that you can delete them before the back up to lighten your backup process

-- Yvan

EARN REWARDS FOR ASKING, ANSWERING, AND MORE.

Earn free swag for participating on the platform.

I think what I'm going to need to do is set up a VPS on another host .. and somehow make use of it's shell access to download the data from the current shared hosting environment onto it .. preferably without having the first create one or more ZIP files.

I believe that I can use "wget" via the command line to do this .. although I'm not sure as to what the specific syntax is that I should use to retrieve an entire directory tree and all of it's subfolders and contents. For example .. what's the correct way to do something like this?

wget https://mydomain.com/wp-content/uploads/*

(Where "*" represents all folders, subfolders and files)

Thanks,

_ Yvan

Get a FREE t-shirt when you ask your first question.

We believe in human intelligence. Our moderation policy strictly prohibits the use of LLM content in our Q&A threads.

Lucas --- I really wish it was that simple. I've already inquired with them about this, and they've made it very clear to me that they refuse to cooperate in this regard. So as much as I agree with you, .. it just simply isn't an option.

Shaun -- I saw no indication that I'd be able to stream the data directly from one server to the other. It appears that I'd first need to create multiple 400gb zip files .. and only once those file have been created would I be able to transfer them anywhere. What's more -- I was recently forced to permanently disable CRON in my wp-config.php file -- using "define('DISABLE_WP_CRON',

As best as I can tell, I'm going to have to request the technical assistance of the new web hosting provider to retrieve the contents of our uploads folder and transfer them over to the new VPS .. using whatever means necessary.

Thanks again for your help.

- Yvan

PHP

--

Questions

--

Followers

Top Experts

PHP is a widely-used server-side scripting language especially suited for web development, powering tens of millions of sites from Facebook to personal WordPress blogs. PHP is often paired with the MySQL relational database, but includes support for most other mainstream databases. By utilizing different Server APIs, PHP can work on many different web servers as a server-side scripting language.