Hyper-V Manager Console Slow to Connect to VM's

Hello,

I recently deployed a new server running Windows Server 2016 with the Hyper-V role installed with 6 virtual machines. The issue that I am noticing is when connecting to a VM through the console in Hyper-V Manager. When I first try to connect to any VM it will usually take about 10 seconds to connect and display the screen, but any subsequent attempts to connect to any virtual machine, including the first one that had the delay, will be almost instant. It will remain this way for a while actually and any attempt to connect any of the VM's are very fast. I basically have to wait around 10-20 minutes without connecting to one of them before the delay will be noticed, and again only on the first attempt to connect the console. It behaves like it has to cache certain things before it loads completely.

As for troubleshooting, I have disabled VMQ's and Large Send Offloads on all network adapters, which did seem to help with the delay time a bit, but did not fix the first initial console connection delay. RDP sessions do not experience this problem and network ping times as well as data transfers are completely fine as well.

In all honesty, the delay isn't horrendous, but I am curious as to why it would do it only when I first connect to a VM.

Any information is appreciated.

Thank you

I recently deployed a new server running Windows Server 2016 with the Hyper-V role installed with 6 virtual machines. The issue that I am noticing is when connecting to a VM through the console in Hyper-V Manager. When I first try to connect to any VM it will usually take about 10 seconds to connect and display the screen, but any subsequent attempts to connect to any virtual machine, including the first one that had the delay, will be almost instant. It will remain this way for a while actually and any attempt to connect any of the VM's are very fast. I basically have to wait around 10-20 minutes without connecting to one of them before the delay will be noticed, and again only on the first attempt to connect the console. It behaves like it has to cache certain things before it loads completely.

As for troubleshooting, I have disabled VMQ's and Large Send Offloads on all network adapters, which did seem to help with the delay time a bit, but did not fix the first initial console connection delay. RDP sessions do not experience this problem and network ping times as well as data transfers are completely fine as well.

In all honesty, the delay isn't horrendous, but I am curious as to why it would do it only when I first connect to a VM.

Any information is appreciated.

Thank you

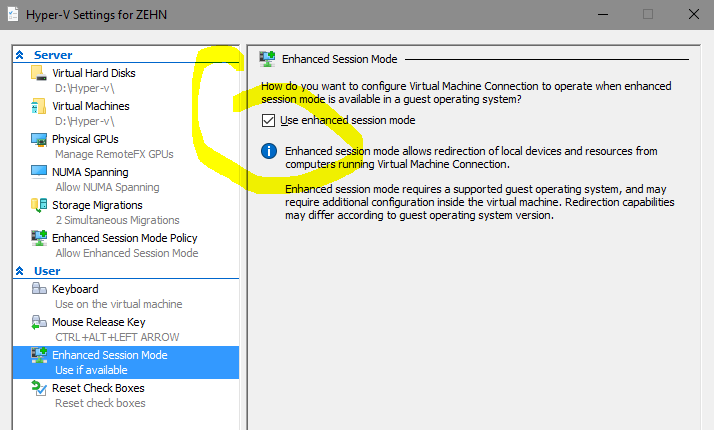

This is a Hyper-V internal problem. There have been connection problems here and there with the Hyper-V console for as long as I know Win10. The enhanced session is the culprit, I have never seen any problems without. See if it happens when you turn off the enhanced session.

->Uncheck the depicted box.

->Uncheck the depicted box.

ASKER

I just disabled Enhanced Session Mode but unfortunately the same delay behavior was noticed as before. That setting didn't seem to make a difference.

Ok... do you have a clean 2016 hyper-v and the same happens there? Maybe just a problem of this single server? Should be investigated a little further.

ASKER

I don't have another server with a similar setup to test unfortunately. I do have an SMTP gateway that is running Hyper-V Server 2016 that doesn't experience the issue, but it's really not a great comparison considering they are drastically different setups/hardware. I'll definitely have to troubleshoot other areas it seems.

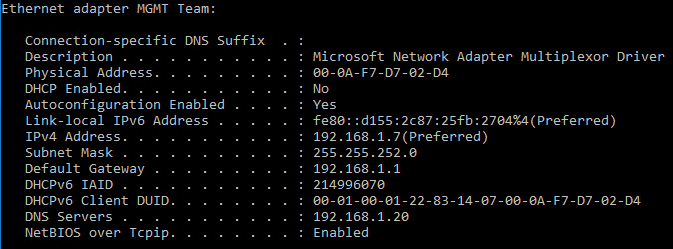

A slow console start can indicate either a network issue or a DNS issue. Where does DNS0/1 on the Hyper-V host point? Please post the results of:

IPConfig /ALLASKER

I only have one DC/DNS server on the network and all static settings are defined on the Hyper-V host. And as I mentioned before, the delay only happens on the very first attempt to connect to a VM. Any subsequent attempts will never experience the issue until I have closed all console sessions and waited for a while, around 10-20 minutes or so.

Is the IP address shown for DNS0 of .20 correct?

If so, then check DNS Management on the DC to make sure that the IP addresses assigned for the host and guests are correct.

The initial pause to me indicates a DNS issue and once the first resolution happens the rest are quick until the local cache expires.

Please post the following from the DC:

If so, then check DNS Management on the DC to make sure that the IP addresses assigned for the host and guests are correct.

The initial pause to me indicates a DNS issue and once the first resolution happens the rest are quick until the local cache expires.

Please post the following from the DC:

IPConfig /AllASKER

Thank you for the suggestion, Philip. Although I believe I may have stumbled upon something that may have been the culprit. This particular server has onboard Intel NIC's as well as a Broadcom network card. When I first set up the virtual switches I teamed port 1 on both the Intel and Broadcom NIC's for the management network, and then port 2 on both NIC's for the virtual machine network, in an attempt to have further redundancy. I realized this may not have been the best approach since they are entirely different chipsets and maybe that was causing the odd delays. As a test, I changed the virtual switches so the Intel NIC was only handling the management traffic, and then the Broadcom NIC to only handle the virtual machine traffic. Ever since making that change I have not noticed the delay even after waiting over an hour to try and connect via the console. Usually the issue would show itself after just 10-20 minutes so I am hopeful this was the issue.

I will continue to monitor this for a little while and report back once I am confident it fixed the issue.

I will continue to monitor this for a little while and report back once I am confident it fixed the issue.

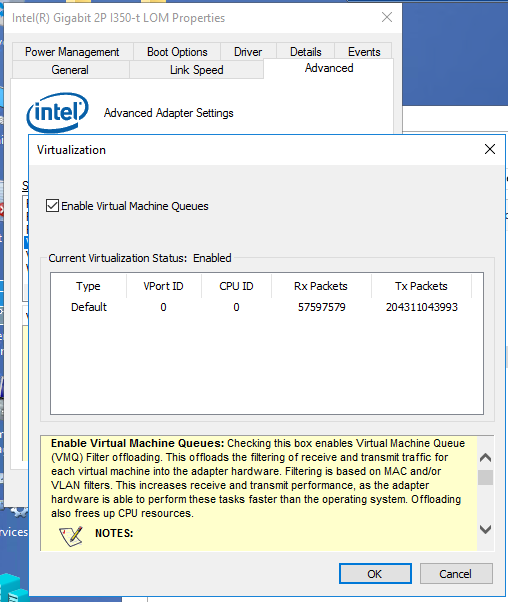

Update the Broadcom driver set then make sure to turn off Virtual Machine Queues for each physical port on the Broadcom NIC. There should not be a problem with having disparate NIC vendor's products in a Net LBFO Team. That was the whole purpose behind native teaming.

I have two very thorough EE articles on all things Hyper-V:

Some Hyper-V Hardware and Software Best Practices

Practical Hyper-V Performance Expectations

I have two very thorough EE articles on all things Hyper-V:

Some Hyper-V Hardware and Software Best Practices

Practical Hyper-V Performance Expectations

ASKER

I will try that and see what happens. Do you also suggest turning off Large Send Offload on the physical ports as well?

We normally don't touch any of the extraneous settings associated with network adapters. In the early days we needed to because the whole setup was just plain buggy. But, we've not run into any network related issues short of Broadcom's belligerence around not following the RFCs regarding VMQ and Gigabit.

ASKER

Philip, what are your thoughts on the VMQ setting under the virtual team interface? Should VMQ be disabled there as well since a physical Broadcom port is assigned to it or should it only be disabled under each physical port?

We only disable at the physical port/driver level. Nothing in the host OS gets touched.

ASKER

Good to know. What about VMQ on the Intel ports? Since an Intel port and Broadcom port are assigned to the same virtual interface should VMQ be disabled on the Intel ports as well?

Intel respects the RFCs. VMQ is never enabled on their Gigabit NIC ports.

ASKER

That's interesting because under the Intel NIC properties on this server it looks like VMQ's are indeed enabled by default. I haven't touched any of these settings myself so it would have been enabled from the factory. Would it be best to disable this on the Intel NIC's so both the Broadcom and Intel ports all have it disabled?

ASKER

Just wanted to give an update on this. After updating both the Broadcom and Intel drivers to the latest version, then disabling VMQ's on all ports, the issue is still present. On the first console connection to a virtual machine it will take around 10 seconds to display, then any subsequent connections are very quick. I have set all static DNS settings to the correct IP addresses so I know its not a DNS issue. At this time I am still not sure what is causing the first initial delay.

Take some old hardware, install 2016 hyper-v and test with one test machine. So much quicker than speculation.

It could be that you are not doing anything wrong.

It could be that you are not doing anything wrong.

ASKER CERTIFIED SOLUTION

membership

This solution is only available to members.

To access this solution, you must be a member of Experts Exchange.

ASKER

After monitoring this I can confirm that when using VM Connect on Hyper-V it will try to connect online to a Microsoft server when you first try. If the Hyper-V server has a live internet connection the console connects immediately, but if internet access is blocked there is a delay of about 15 seconds on the first initial connection.

Another thing I have noticed is that even if you give internet access to the server, and let VM Connect connect online to Microsoft, it seems to cache the certificate data for a while, days even, but will eventually go back to causing a delay if internet access was removed.

I have not tried creating a self-signed certificate to see if that solves the problem, like what the article suggests, but I'm not that concerned with the delay now that I know what is causing it. I may just leave it alone unless at some point in the future I find a need to go down that road.

Thanks again for the suggestions in this case.

Another thing I have noticed is that even if you give internet access to the server, and let VM Connect connect online to Microsoft, it seems to cache the certificate data for a while, days even, but will eventually go back to causing a delay if internet access was removed.

I have not tried creating a self-signed certificate to see if that solves the problem, like what the article suggests, but I'm not that concerned with the delay now that I know what is causing it. I may just leave it alone unless at some point in the future I find a need to go down that road.

Thanks again for the suggestions in this case.

Your research results are a bit weird, since it doesn't happen on my server 2016 Hyper-V (2 of them) and you can be sure, they don't have internet access.

ASKER

All I can say is that this is the behavior I have observed for the last few days. I have even sniffed the packets outbound to the internet and when the delay happens you can see it tries to communicate with Microsoft public IP's, 131.253.61.80 or 131.253.61.100 the last time I tried. I am also running Server 2016 Standard with the Hyper-V role installed. This issue is only observed when using VM Connect directly on the server itself, not through MMC.

Ah, that is a difference, after all. I use mmc (RSAT) on my client to manage and connect to the VMs.

ASKER

Out of curiosity, are you running Hyper-V Server 2016 (free hypervisor), or Server 2016 Standard with the the Hyper-V role?

Both the free Hypervisor. But I doubt it will make a difference.

ASKER

There were great suggestions as to what would be causing the issue but in the end I figured out the fix myself by researching other Microsoft blogs.