Server 2012 R2 Pagefile.sys Size

We have a Windows Server 2012 R2 system that keeps running out of hard drive space on C:. This is preventing users from printing, but can cause other issues like updates not installing, etc.

I noticed that the pagefile.sys was just over 24 GB in size. The server has 24 GB of RAM installed. Is that pagefile a extra large or is that fairly normal? It was set to "system managed sized" and I just manually changed it to a max of 22 GB and restarted.

I noticed that the pagefile.sys was just over 24 GB in size. The server has 24 GB of RAM installed. Is that pagefile a extra large or is that fairly normal? It was set to "system managed sized" and I just manually changed it to a max of 22 GB and restarted.

Windows picked that size as that is the memory you have. So that is a normal practice although you can likely get by with smaller. We use System Managed Size as that works fine in our servers

Running out of space on C: on a Windows server is not uncommon. It's typically the result of not allowing enough space on C: when initially setting up the server. You'll find lots of guides online about how to minimize the use of C: if you search around.

"This is preventing users from printing": this is a good place to start. Stop the print spooler service, then go to Devices and Printers. Click on Server Properties (you may have to click on a network printer for that to show up), Advanced. You'll find the setting in there for the location of the print spool files. Create a folder on another drive (that has plenty of space) and point to it. Restart the print spooler.

I'd also run TreeSize (free version from jam-software.com) to see what else is taking up space on C:. Quite often you'll find things that can be moved or deleted.

In answer to your question about the swap file, I'll look at how much is actually being used. You may well be able to reduce it further without significant penaltly.

"This is preventing users from printing": this is a good place to start. Stop the print spooler service, then go to Devices and Printers. Click on Server Properties (you may have to click on a network printer for that to show up), Advanced. You'll find the setting in there for the location of the print spool files. Create a folder on another drive (that has plenty of space) and point to it. Restart the print spooler.

I'd also run TreeSize (free version from jam-software.com) to see what else is taking up space on C:. Quite often you'll find things that can be moved or deleted.

In answer to your question about the swap file, I'll look at how much is actually being used. You may well be able to reduce it further without significant penaltly.

ASKER

I forgot to add that I did run WinDirStat and the biggest thing was the pagefile - 2nd biggest was the WinSxs folder. I tried running some DISM commands to clean up the store but got error 112, which has to do with lack of file space. My main question was about the Pagefile. I figured it default to that since that equaled the RAM, but I got to say Windows is doing a crap job of managing the size if it unnecessarily fills up the whole drive.

You might also run Disk Cleanup (Admin tools) and select the option to Clean up System Files.

How much disk space is allocated for Drive C: ? On a machine with lots of total disk space we normally allocated 300 GB for the OS to avoid issues in future.

Follow up: WinSxS should not be managed or cleaned up manually. II is needed for Windows operation.

How much disk space is allocated for Drive C: ? On a machine with lots of total disk space we normally allocated 300 GB for the OS to avoid issues in future.

Follow up: WinSxS should not be managed or cleaned up manually. II is needed for Windows operation.

Use this software and find out what file/folder is consumed the space. If the consumed file is not a system files you can move to D drive or other drive.

You can clear temporary files as well.

https://www.jam-software.com/treesize_free/

You can clear temporary files as well.

https://www.jam-software.com/treesize_free/

**shakes fist at the sky**

Damn you WinSXS folder!

I had the same problem on my home machine. As much as it sucks to keep all that space tied up, I have to agree with John in that my research at the time determined that it was unwise to manually clean up space in that folder.

Damn you WinSXS folder!

I had the same problem on my home machine. As much as it sucks to keep all that space tied up, I have to agree with John in that my research at the time determined that it was unwise to manually clean up space in that folder.

If any services are stopped you can compress the C drive (Which is not recommended). I did it to reduce outage/downtime of service.

When you get enough space you can uncompress.

FYI. Compression will reduce system performance.

When you get enough space you can uncompress.

FYI. Compression will reduce system performance.

ASKER

Right now, C: has 128 GB allocated to it, which I know is bare minimum. For the record, I didn't set this up!

Yes, I know better than to delete stuff from WinSxS.

I will try moving the print spool location and see if that helps.

Yes, I know better than to delete stuff from WinSxS.

I will try moving the print spool location and see if that helps.

ASKER CERTIFIED SOLUTION

membership

This solution is only available to members.

To access this solution, you must be a member of Experts Exchange.

Traditional wisdom in Windows was to always have 1 to 1 1/2 times the size of RAM dedicated to the page file. The reason for this was that in the event of a system crash, windows could write a memory dump that you could use after the fact for troubleshooting. You can make the call as to whether or not running with a smaller-than-RAM pagefile is worth the risk to you. I personally don't run a pagefile on my home computer because I'm not planning on debugging my computer if it crashes. But I would think that it would be more important in a server environment.

Agree with john. You can try switch off paging in C drive and enable in D or E where you have space.

John makes an excellent point that I should have mentioned. The page file itself can be moved.

Depending upon the Windows server version (2008r2 minimum )you can run a disk cleanup,but you must install windows desktop experience first.

It will purge all the old patch install files.

It will purge all the old patch install files.

TL;DR

You don't need a pagefile if you purchased enough RAM for your server or allocate enough RAM for your VM.

That's bunk. That's an excuse made years later. You don't need a pagefile to dump memory. When you turn off the page file, windows can still dump RAM into a separate dump file if you really need it. There's a registry key you can toggle. I ran many servers without a pagefile for many years. Turn off your pagefile. You're just a sysadmin, you have no need of it if you sized your RAM correctly. You're never going to debug that dump.

/*rant about pagefile and RAM*/

That original requirement was made when Windows rode on top of DOS. RAM was expensive and Windows needed much more memory to run than DOS. 1 MB (not even GB yet) was not quite enough, but 2-3 MB was needed. The real reason there was that 1 to 1-1/2 times the RAM was because Windows needed that much RAM to run than a typical user had on their system. Before Windows came along, pagefiles weren't really used, because DOS originally fit in RAM. Some very few early programs needed more RAM and they ran their own swap. Windows was the reason for the need for a pagefile and it wasn't really needed for a memory dump. It was needed because Windows needed more expensive RAM that most people didn't buy for their original DOS systems. It also kept growing in size and stayed at the 1 to 1-1/2 times RAM requirement for nearly a decade. <-- That's where the stupid "wisdom" came from. It wasn't about the dumpfile. RAM is cheap now.

Just look up the Windows system requirements of the time and compare it to typical RAM of systems of that era.

https://www.technologytips.com/windows-system-requirements/

That requirement held true until we hit around 2GB - 4GB. By this time, I kept a pagefile under 2GB, because it did slow down appreciably when I made it 4GB or 8GB, then eventually removed it completely when 8 GB became standard. I ran a 32 bit Windows 2008 system without a swap on just 4GB RAM. My system never really got above 3GB usage unless a program was behaving badly. I know that there's an article where someone tested pagefiles and said there was no difference in performance, but he tested much later when there was more RAM and he wasn't as thorough in comparing multiple systems at the same time. I tested during the cusp of the transition from 1GB to 8GB RAM. During the era of 1-8GB RAM, reducing the paged file to 1-2GB made it faster than using the 1 to 1.5 times RAM. Setting a fixed size also made it faster than letting Windows randomly fragment the disk.

I'm not a dev that had time to waste on debugging a dump file. My servers didn't run out of memory to crash. Once in a blue moon I might get an out of memory error, and I just diagnosed the app that was running away and killed it. By preventing disk writes, my system remained responsive enough to close the app, compared to if I left it to Windows to fill the entire disk with a runaway program. I've seen that happen and it required a hard reboot and I saw the huge pagefile later. While a pagefile doesn't hurt during normal operation, it does kill performance if you have a runaway process.

RAM is relatively cheap now. I've run many Windows server without a pagefile because I purchased enough RAM for the physical server or allocated enough RAM on the system. If this is a physical server with enough, you really have no need of a swap. You end up rebooting once a month because of kernel related patches in their monolithic, cumulative patches now. RAM is random access. It doesn't matter if programs are loaded in piecemeal and not in contiguous RAM. I save my disk space for data. I also never let windows decide on the size of RAM, because it fragments it and slowed down. When I had to set it, I made it a fixed sized and that made it more responsive than when Windows decided to randomly grow the pagefile.

RAM is random access. It doesn't matter if it gets fragmented. It's Just like SSDs. It takes the same time to access any part of RAM or SSD. You only care about fragmentation on a spinning disk, because of seek times from the arm movement and disk rotation.

The pagefile isn't a 1 for 1 copy of your RAM. It's stuff that becomes "unused". It's swapped out to make room for new additional program data that you need to work on now. If your swapfile is 24 GB, you either don't have enough RAM, or you have some runaway process, or you have a program that's set incorrectly to believe you have more than what you have in Physical RAM. On old linux systems, you can compile the kernel to fit in RAM and never swap. I had a system running in 32MB of RAM that had, after bootup, a disk disconnected for months and it ran just fine using only RAM. Windows can't quite do that, but you can still optimize programs to fit in RAM to reduce disk access.

/*end rant*/

You don't need a pagefile if you purchased enough RAM for your server or allocate enough RAM for your VM.

Traditional wisdom in Windows was to always have 1 to 1 1/2 times the size of RAM dedicated to the page file. The reason for this was that in the event of a system crash, windows could write a memory dump that you could use after the fact for troubleshooting.

That's bunk. That's an excuse made years later. You don't need a pagefile to dump memory. When you turn off the page file, windows can still dump RAM into a separate dump file if you really need it. There's a registry key you can toggle. I ran many servers without a pagefile for many years. Turn off your pagefile. You're just a sysadmin, you have no need of it if you sized your RAM correctly. You're never going to debug that dump.

/*rant about pagefile and RAM*/

That original requirement was made when Windows rode on top of DOS. RAM was expensive and Windows needed much more memory to run than DOS. 1 MB (not even GB yet) was not quite enough, but 2-3 MB was needed. The real reason there was that 1 to 1-1/2 times the RAM was because Windows needed that much RAM to run than a typical user had on their system. Before Windows came along, pagefiles weren't really used, because DOS originally fit in RAM. Some very few early programs needed more RAM and they ran their own swap. Windows was the reason for the need for a pagefile and it wasn't really needed for a memory dump. It was needed because Windows needed more expensive RAM that most people didn't buy for their original DOS systems. It also kept growing in size and stayed at the 1 to 1-1/2 times RAM requirement for nearly a decade. <-- That's where the stupid "wisdom" came from. It wasn't about the dumpfile. RAM is cheap now.

Just look up the Windows system requirements of the time and compare it to typical RAM of systems of that era.

https://www.technologytips.com/windows-system-requirements/

That requirement held true until we hit around 2GB - 4GB. By this time, I kept a pagefile under 2GB, because it did slow down appreciably when I made it 4GB or 8GB, then eventually removed it completely when 8 GB became standard. I ran a 32 bit Windows 2008 system without a swap on just 4GB RAM. My system never really got above 3GB usage unless a program was behaving badly. I know that there's an article where someone tested pagefiles and said there was no difference in performance, but he tested much later when there was more RAM and he wasn't as thorough in comparing multiple systems at the same time. I tested during the cusp of the transition from 1GB to 8GB RAM. During the era of 1-8GB RAM, reducing the paged file to 1-2GB made it faster than using the 1 to 1.5 times RAM. Setting a fixed size also made it faster than letting Windows randomly fragment the disk.

I'm not a dev that had time to waste on debugging a dump file. My servers didn't run out of memory to crash. Once in a blue moon I might get an out of memory error, and I just diagnosed the app that was running away and killed it. By preventing disk writes, my system remained responsive enough to close the app, compared to if I left it to Windows to fill the entire disk with a runaway program. I've seen that happen and it required a hard reboot and I saw the huge pagefile later. While a pagefile doesn't hurt during normal operation, it does kill performance if you have a runaway process.

RAM is relatively cheap now. I've run many Windows server without a pagefile because I purchased enough RAM for the physical server or allocated enough RAM on the system. If this is a physical server with enough, you really have no need of a swap. You end up rebooting once a month because of kernel related patches in their monolithic, cumulative patches now. RAM is random access. It doesn't matter if programs are loaded in piecemeal and not in contiguous RAM. I save my disk space for data. I also never let windows decide on the size of RAM, because it fragments it and slowed down. When I had to set it, I made it a fixed sized and that made it more responsive than when Windows decided to randomly grow the pagefile.

RAM is random access. It doesn't matter if it gets fragmented. It's Just like SSDs. It takes the same time to access any part of RAM or SSD. You only care about fragmentation on a spinning disk, because of seek times from the arm movement and disk rotation.

The pagefile isn't a 1 for 1 copy of your RAM. It's stuff that becomes "unused". It's swapped out to make room for new additional program data that you need to work on now. If your swapfile is 24 GB, you either don't have enough RAM, or you have some runaway process, or you have a program that's set incorrectly to believe you have more than what you have in Physical RAM. On old linux systems, you can compile the kernel to fit in RAM and never swap. I had a system running in 32MB of RAM that had, after bootup, a disk disconnected for months and it ran just fine using only RAM. Windows can't quite do that, but you can still optimize programs to fit in RAM to reduce disk access.

/*end rant*/

That's bunk. That's an excuse made years later.Not going to dispute the historical claim as I wasn't as into computing back in the days of early Windows, but I did say "traditional wisdom" and not "best practice".

I don't think I've claimed that the pagefile is soley for the purpose of doing a memory dump; rather I was qualifying why the size is typically suggested to be 1.5X. It's kind of hard to argue that's it's not common understanding when MS' own documentation says such:

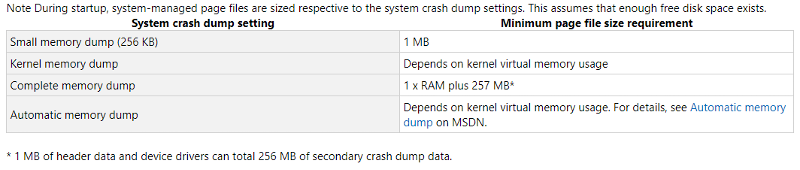

Crash dump setting: If you want a crash dump file to be created during a system crash, a page file or a dedicated dump file must exist and be large enough to back the system crash dump setting. Otherwise, a system memory dump file is not created.

I do see that their documentation says that a dedicated file can be used as well, so no contention there.

= )

Sorry, I wasn't trying to attack you in any way. I just want that completely stupid "wisdom" about 1-1.5 times RAM to completely go away. That "wisdom" is bunk. It's obsolete and was only there because of expensive RAM and bloated Windows OS that rode on top of DOS. It had nothing to do with saving dump files initially. That became an "oh, cool. we can save that for debugging later" side effect. Hardly anyone ever uses it. The dump files are generally much smaller than actual RAM.

I have not met any sysadmin yet that debugs that dump. It's only devs that might do it, and even then, only a very small segment of them might, and I don't know any of those people. Most people just delete that dump file when they find that they need more disk space. Buy enough RAM and turn off the pagefile. It saves wear and tear on your disk or SSD. It's a stupid artifact of the days of expensive RAM and isn't needed. You're just wasting disk space and prematurely wearing out your storage device with constant writes.

I have not met any sysadmin yet that debugs that dump. It's only devs that might do it, and even then, only a very small segment of them might, and I don't know any of those people. Most people just delete that dump file when they find that they need more disk space. Buy enough RAM and turn off the pagefile. It saves wear and tear on your disk or SSD. It's a stupid artifact of the days of expensive RAM and isn't needed. You're just wasting disk space and prematurely wearing out your storage device with constant writes.

I'd like to add something about the memory dump files. Some of us DO use them, but not the full dumps. You can set Windows to make small dumps (64k?) that seem to have all the information that almost anyone would use for debugging.

ASKER

Thanks for all the input!

You are very welcome. Good luck with moving the pagefile.