Ahmmede yehiaabdolhameed

asked on

Need to calculate Shannon entropy

I calculate it using the formula but I'm not sure if its right

(a) The random variable X that takes the values 0, 1, 2 with probability 1/3.

(b) The random variable X that takes the values 1, 2, 3, 4 with probability 1/4.

(c) The random variable X that takes the values in {1, . . . , 1000} uniformly at random.

(d) The random variable X that takes the values in {0, . . . , 1023} uniformly at random.

(a) The random variable X that takes the values 0, 1, 2 with probability 1/3.

(b) The random variable X that takes the values 1, 2, 3, 4 with probability 1/4.

(c) The random variable X that takes the values in {1, . . . , 1000} uniformly at random.

(d) The random variable X that takes the values in {0, . . . , 1023} uniformly at random.

>> using the formula

I don't see the formula you are using. Post it. Where did you get this formula?

>> but I'm not sure if its right.

What are you not sure about - the formula or your computation when you use the formula? Clarify and post whatever you have.

I don't see the formula you are using. Post it. Where did you get this formula?

>> but I'm not sure if its right.

What are you not sure about - the formula or your computation when you use the formula? Clarify and post whatever you have.

ASKER

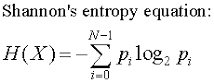

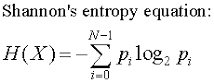

Yes sorry its shannon entropy formula

A answer is 1.59

B answer is 2

C, D I don't know how to calculate

A answer is 1.59

B answer is 2

C, D I don't know how to calculate

Thanks for the additional information.

Information Entropy is very different from Thermodynamic Entropy.

The formula you need is Log_2(X) which is the logarithm to the base 2.

You can calculate it using either log(x)/log(2) or ln(X)/ln(2) where log(X) is base 10 and ln(X) is base e

log(1000)/log(2) = 9.966 and log(1024)/log(2) = 10

Note: The common sense definition of Shannon Entropy is the number of bits required to encode a particular variable. You always have to round up. So (a) and (b) are the same, and so are (c) and (d).

Information Entropy is very different from Thermodynamic Entropy.

The formula you need is Log_2(X) which is the logarithm to the base 2.

You can calculate it using either log(x)/log(2) or ln(X)/ln(2) where log(X) is base 10 and ln(X) is base e

log(1000)/log(2) = 9.966 and log(1024)/log(2) = 10

Note: The common sense definition of Shannon Entropy is the number of bits required to encode a particular variable. You always have to round up. So (a) and (b) are the same, and so are (c) and (d).

Could you show us how you calculated the results that you said are correct?

Is this question resolved?

Do you see how to simplify the formula when the N values of X are uniformly distributed? That means that all the p_i = 1/ N

Do you see how to simplify the formula when the N values of X are uniformly distributed? That means that all the p_i = 1/ N

Here are some log formulas that will help you.

Log Rules:

1) log(mn) = log(m) + log(n)

2) log(m/n) = log(m) – log(n)

3) log(m^n) = n · log(m)ASKER

For the first one its so easy first two but I cant do it for the 3rd and forth

https://ibb.co/pz4gQ9x

https://ibb.co/pz4gQ9x

>> but I cant do it for the 3rd and forth

Post what you have done and tell us where you are having trouble.

Post what you have done and tell us where you are having trouble.

Note in (b), you have three identical terms.

In (c) and (d), you will have 1000 and 1024 identical terms.

You should be able to simplify these expressions to get just a -log(1/N) term.

In (c) and (d), you will have 1000 and 1024 identical terms.

You should be able to simplify these expressions to get just a -log(1/N) term.

Apply the log formula that are posted above., and post your work.

This question needs an answer!

Become an EE member today

7 DAY FREE TRIALMembers can start a 7-Day Free trial then enjoy unlimited access to the platform.

View membership options

or

Learn why we charge membership fees

We get it - no one likes a content blocker. Take one extra minute and find out why we block content.

You have four different definitions of X.

Nothing here sounds anything like entropy.

Are you taking a course or reading a textbook? If so, what is the title?

And maybe a little more information on the calculation and formula that you mentioned.