Determine which record has more than 32767 characters

Hi,

I am trying to link a CSV file to my Access database file and it gives me the attached error.

How can I determine which record/s are causing this?

Thanks

Untitled.png

I am trying to link a CSV file to my Access database file and it gives me the attached error.

How can I determine which record/s are causing this?

Thanks

Untitled.png

ASKER

Hi,

See attached sample of what file looks like.

Untitled.png

Thanks

Have you checked it with a text editor like NotePad++Guess i need instructions/tips how to get to the large record as we are talking of a file with 7.5K records.

See attached sample of what file looks like.

Untitled.png

Thanks

I reckon is that the issue is "not" the length of lines but the enormous amount of columns needed to display your data.

So use my suggestion : Read Text file line by line

Get an idea what your data should be mapped and do the necessary mapping

Split function would be your invaluable assistant....as you will be able to do

strlineData(0) - strlineData(10) table 01 columns

strlineData(11) - strlineData(18) table 02 columns

................

strlineData(n-x) - strlineData(n) table nn columns

So use my suggestion : Read Text file line by line

Get an idea what your data should be mapped and do the necessary mapping

Split function would be your invaluable assistant....as you will be able to do

strlineData(0) - strlineData(10) table 01 columns

strlineData(11) - strlineData(18) table 02 columns

................

strlineData(n-x) - strlineData(n) table nn columns

Comments found on the internet regarding the same problem:

"I'm having the same problem exporting to csv file. I can import fine, but it won't let me export. I need all these columns as to duplicate a csv file for importing to another program. I'm working on developing an electronic timesheet system and I'm trying to automate as much of it as possible. If I could get access to export this file it would be ideal for the approach I'm testing right now. Nobody has come up with a way of working around this issue yet??"

Ok this is what I did to get around the issue. I went into the table design view, and for the fields that were always empty, but necessary to preserve for csv formatting reasons, I just changed the field size from 255 to 2. Until it worked fine. I would have made all empty fields this (maybe 0 would be a better idea, I never tried it. Seems that it would cause problems lol) but there were so many fields to go through I didn't bother.

Hope that might help anyone else having this issue.

I tried to change the field size to 0, but that didn't work. Changing it to 2 worked for me.

What an annoying error!

Workaround with exporting to excel, saving as csv and changing extension to .txt also worked, but then I still had to change the delimiter, because I needed | as delimiter, not ;.

"I'm having the same problem exporting to csv file. I can import fine, but it won't let me export. I need all these columns as to duplicate a csv file for importing to another program. I'm working on developing an electronic timesheet system and I'm trying to automate as much of it as possible. If I could get access to export this file it would be ideal for the approach I'm testing right now. Nobody has come up with a way of working around this issue yet??"

Ok this is what I did to get around the issue. I went into the table design view, and for the fields that were always empty, but necessary to preserve for csv formatting reasons, I just changed the field size from 255 to 2. Until it worked fine. I would have made all empty fields this (maybe 0 would be a better idea, I never tried it. Seems that it would cause problems lol) but there were so many fields to go through I didn't bother.

Hope that might help anyone else having this issue.

I tried to change the field size to 0, but that didn't work. Changing it to 2 worked for me.

What an annoying error!

Workaround with exporting to excel, saving as csv and changing extension to .txt also worked, but then I still had to change the delimiter, because I needed | as delimiter, not ;.

ASKER

The story is as follows...

We have an application designed and hosted by Caspio.com, we export data on a scheduled bases to MS Access and do some reporting from from there.

Now the export utility is failing due to one record.

By now I was able to figure out which record that is.

However I still need help determine what is wrong with that record in order to Manually fix it, as nothing unusual seems to be there.

Thanks

We have an application designed and hosted by Caspio.com, we export data on a scheduled bases to MS Access and do some reporting from from there.

Now the export utility is failing due to one record.

By now I was able to figure out which record that is.

However I still need help determine what is wrong with that record in order to Manually fix it, as nothing unusual seems to be there.

Thanks

That isn't a CSV file, it is JSON

This issue was once discussed on this site. Perhaps the solution will work for you:

The Field "XXXXX" Contains a Start Position of '32896', The Maximum Start Position allowed is 32767

Paul

The Field "XXXXX" Contains a Start Position of '32896', The Maximum Start Position allowed is 32767

Paul

ASKER

That isn't a CSV file, it is JSONWhat makes you think so, it is CSV.

Perhaps the solution will work for you:Not looking for solutions suggesting to re-fracture db for now as this is happening for one record only.

Again I'm looking for a way to figure out what is wrong with a particular record.

Thanks

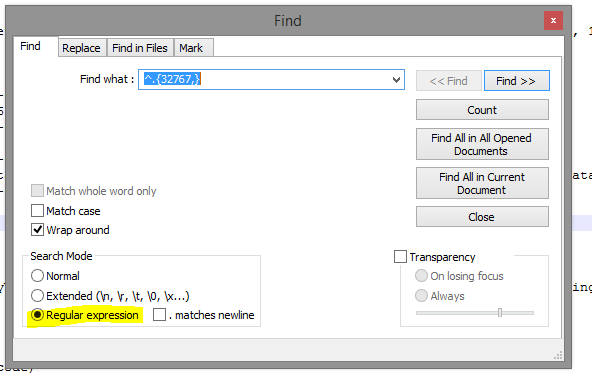

When it is a length problem, then you can use notepad++ and search by using a regular expression:

p.s. your files screenshot looks like it could be indeed JSON. All those curly brackets and quotes and key-value pairs.. You may test it by formatting it as JSON using the JSON viewer plugin.

^.{32767,}p.s. your files screenshot looks like it could be indeed JSON. All those curly brackets and quotes and key-value pairs.. You may test it by formatting it as JSON using the JSON viewer plugin.

As others mentioned its probably is a JSON file (it would be almost crazy to have a .CSV with such length)

So do use this code : https://dymeng.com/parsing-json-with-vba/

to read it and post back on your findings.

Even if you did linked the CSV there isn't an object in Access to hold 32767 individual elements (in the concept of table - fields as Access has a hard limit of 255 columns)

So do use this code : https://dymeng.com/parsing-json-with-vba/

to read it and post back on your findings.

Even if you did linked the CSV there isn't an object in Access to hold 32767 individual elements (in the concept of table - fields as Access has a hard limit of 255 columns)

What makes you think so, it is CSV.The structure of the data:

{"name":value}

is the structure of JSON objects.

I'm concerned that it isn't a well formed JSON file, since you have pairs of quote characters around the name. {""lx"":25,""ly"":55,""mx"

There should only be single quote characters.

{"lx":25,"ly":55,"mx":28,"

ASKER

will match lines with 32767 characters or more.Tested it, it didn't show the right record/s, we are looking for a record exceeding the limit not a line.

Even if you did linked the CSV there isn't an object in Access to hold 32767 individual elementsThere are no 32767 elements, there are 32767 characters in this record excluding the memo fields.

The structure of the data:Actually you right about that as there is one field displayed in JSON format, and it happened to be the the longest and therefore its showing up on that pic.

{"name":value}

is the structure of JSON objects.

However the file is CSV as Caspio dont offer exports in JSON formats (Except for that single field).

Now the story is as follows, if I first export to CSV then I'm able to link it to Access, but CSV is not an option as it causes different issues.

At the moment I'm basically looking to figure out what is wrong with that record so I can.

1- Fix it manually.

2- Know which field contains the largest amount of characters so I can convert that field to Text(6400) in Caspio, which in that case its being handled differently and will not cause such problem.(Had this in the past long time ago and after converting field it fixed the problem).

Thanks

You can define that particular field as a memo data type.

ASKER

You can define that particular field as a memo data type.How do i know which field (Table has close to a hundred...topic for itself..)?

just some ideas to share, probably you can do a "reverse engineering"?

1. try verify the data stored in caspio and what's the data type and length

2. compare it with the Access target table's field data type and length

3. do you really want to import all fields to MS Access? the original error seems like a limitation error in MS Access.

We probably need to test it out for the fields before field: ScheduleID. You may first import the data into the "staging" table (with field's data type set to Memo), and then you could have another step for your data cleansing phase.

1. try verify the data stored in caspio and what's the data type and length

2. compare it with the Access target table's field data type and length

3. do you really want to import all fields to MS Access? the original error seems like a limitation error in MS Access.

You can define that particular field as a memo data type.>>How do i know which field (Table has close to a hundred...topic for itself..)?

We probably need to test it out for the fields before field: ScheduleID. You may first import the data into the "staging" table (with field's data type set to Memo), and then you could have another step for your data cleansing phase.

ASKER

We probably need to test it out for the fields before field: ScheduleID.At the moment somehow things changed as mentioned, I'm not getting that error with CSV, and in Access the error is as follows.

So basically I'm looking for a shortcut instead of having to change field types field by field, export examine the error etc...

One or more records has reached the maximum data limit for Microsoft Access MDB files. To export this view, please remove some characters and try again.

Thanks

So basically I'm looking for a shortcut instead of having to change field types field by field, export examine the error etc...

Yup, that's true. But as mentioned that we are exploring the possibility of using memo fields in a staging table not the original table, that would be helpful so that at least data can be imported, and then we can validate the length of data when it's necessary.

you could replicate the original table to staging table, and then modify the data type to memo for the testing.

hope this is practical to you.

Ben

Please post a representative sample of the data that has at least one of those too-long records. I have an idea.

Please post a representative sample of the data that has at least one of those too-long records. I have an idea.

ASKER

@Aikimark,

Since data has PHI, I cannot post it.

Can you suggest something to obfuscate the data but should remain valid for testing?

Thanks

Since data has PHI, I cannot post it.

Can you suggest something to obfuscate the data but should remain valid for testing?

Thanks

bring it into Notepad++ and do some find/replace operations. I only need enough records to see both normal and too-long records.

Also, you only need to clean the PII from one record. You can copy/paste the clean PII to other records.

ASKER

@Aikimark,

I did some find/replace obfuscation, however to be on safe side will send you file via private message.

Thanks

I did some find/replace obfuscation, however to be on safe side will send you file via private message.

Thanks

ASKER CERTIFIED SOLUTION

membership

This solution is only available to members.

To access this solution, you must be a member of Experts Exchange.

ASKER

Hi,

Can you give me instructions how to run this.

Tried saving as .ps1 under my download folder, then right click/run with powershell script, nothing happened.

Thanks

Can you give me instructions how to run this.

Tried saving as .ps1 under my download folder, then right click/run with powershell script, nothing happened.

Thanks

Did you change the path of the CSV on the first line?

if you are at a command line prompt, type this:

It should get you close.

if you are at a command line prompt, type this:

c:\users\bfuchs\downloads\> Powershell -file nameofscript.ps1It should get you close.

ASKER

See attached.Untitled.png

ASKER

The c:\users\bfuchs\downloads\

Since you are already in Powershell, do the following:

Since you are already in Powershell, do the following:

cd \users\mf\downloads

& .\Aikimark.ps1ASKER

What am I missing here?

Untitled.png

Untitled.png

Probably the second downloads in the path could be wrong..

Curious is it a .csv ? after all

Probably the second downloads in the path could be wrong..

What am I missing here?Where did you save the PS script?

Confirm that you named it "aikimark.ps1"

Confirm that you changed the path and file name on the first line of the script.

ASKER

Hi,

Both files are under download folder, see attached.

Can you give me exact steps how to run this

thanks

Untitled.png

Both files are under download folder, see attached.

Can you give me exact steps how to run this

thanks

Untitled.png

I posted the two steps in my earlier comment. Did you try that?

Did you alter the first line of the PS script to reflect the path and name of your CSV file?

Did you alter the first line of the PS script to reflect the path and name of your CSV file?

ASKER

Okay got this to work, will test now.

yaaay!

Keep us apprised of your progress.

Keep us apprised of your progress.

ASKER

Hi,

Okay created a file containing those two record and when running with this script I get the attached.

How do I proceed now?

ThanksUntitled.png

Okay created a file containing those two record and when running with this script I get the attached.

How do I proceed now?

ThanksUntitled.png

what does your script look like?

It looks like you ran the script once and got the expected output.

It looks like you're missing a leading "& " from your launching of the script

It looks like you ran the script once and got the expected output.

It looks like you're missing a leading "& " from your launching of the script

ASKER

1. Change the last line to eliminate duplicates in the output

2. Change the Access table definition so that the listed columns are data type memo instead of text

} | sort -Property fieldname -Unique | ft fieldname2. Change the Access table definition so that the listed columns are data type memo instead of text

ASKER

1. Change the last line to eliminate duplicates in the outputthis removes the character count, see attached.

Thanks

Untitled.png

You don't need the character count. You only need to know which columns are longer than 255 characters. Only these columns need to be memo data type.

ASKER

Thank you!

You don't need the character count. You only need to know which columns are longer than 255 characters. Only these columns need to be memo data type.When I need an general importer, then I use always Memo for all columns in the staging table. Then a query evaluates the data type, thus also length, of the data in all columns. So you can produce a better error reporting, when the data is incorrect.

All these issues are handled nicely if you open the text file and read it line by line...full control