Lagging speeds between servers

Overview:

1, NAS for backups

1, hyper- v gen 1 file server 2019

1, hyper- v gen 2 file server 2019

Each on a separate dell host

those dell host are connected to a dell SAN which holds all the data, including the VHD files for the file servers.

When transfering data between the file servers the speed varies between 100KB/s to 2-3MB/s.

When backing up data to our backup NAS. we are getting a consistent 2-3MB/s

It would take a week to fully back up at this rate. Though I cannot seem to figure out whats going on. Everrything runs fine minus this speed issue.

1, NAS for backups

1, hyper- v gen 1 file server 2019

1, hyper- v gen 2 file server 2019

Each on a separate dell host

those dell host are connected to a dell SAN which holds all the data, including the VHD files for the file servers.

When transfering data between the file servers the speed varies between 100KB/s to 2-3MB/s.

When backing up data to our backup NAS. we are getting a consistent 2-3MB/s

It would take a week to fully back up at this rate. Though I cannot seem to figure out whats going on. Everrything runs fine minus this speed issue.

You may also have to run a... lightweight speed test continuously for 24 hours, to determine if IOPS greatly increase on some schedule, like a background job running on some machine, accessing your SAN.

Lots of components to consider. Physical disk read speed, disk write speed, caching, disk configuration, VM config, controller hardware, cabling, storage network, data network, and competing workloads.

You just have to work your way through each component and identify the choke point.

Provide more details for more specific advice.

You just have to work your way through each component and identify the choke point.

Provide more details for more specific advice.

ASKER

Great. I'll begin working on those Monday and start posting more details and information.

ASKER

@michael -

I attempted to do this, but its missing a few steps or I am just a complete idiot in this - (I have never done this one before)

test disk speed to SAN is ok, i.e. using DiskSpd, see https://bobcares.com/blog/measure-storage-performance-and-iops-on-windows/

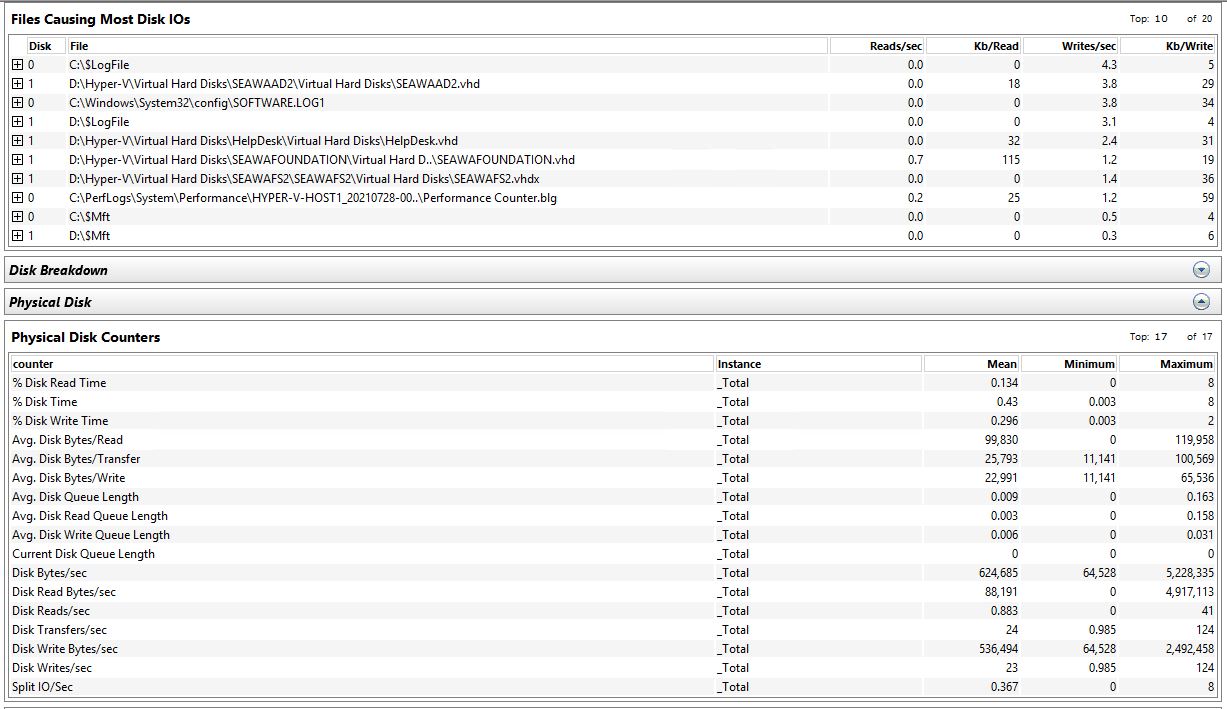

I have attached screenshots of that area of what I think I got. Drive D is the SAN volume

For the Iperf3, I can get that on the windows server, but not sure how to run that on the SAN

Network components seem fine. I had a cabling issue I resolved, but no change in anything else.

General actions you can do:

- make sure all your systems firmware (BIOS, RAID controller, FC controller, network switch etc.) are up-to-date

Completed and Dell verified current versions

- make sure all your systems driver (esp. network controller, FC controller) are up-to-date

Completed and Dell verified current versions

- check the cabling for bad cables

Completed - replaced one bad one

- if you're using SFP/GBICs make sure they are on the device manufacturers compatibilty list.

We are not utilizing this :(

I attempted to do this, but its missing a few steps or I am just a complete idiot in this - (I have never done this one before)

test disk speed to SAN is ok, i.e. using DiskSpd, see https://bobcares.com/blog/measure-storage-performance-and-iops-on-windows/

I have attached screenshots of that area of what I think I got. Drive D is the SAN volume

For the Iperf3, I can get that on the windows server, but not sure how to run that on the SAN

Network components seem fine. I had a cabling issue I resolved, but no change in anything else.

General actions you can do:

- make sure all your systems firmware (BIOS, RAID controller, FC controller, network switch etc.) are up-to-date

Completed and Dell verified current versions

- make sure all your systems driver (esp. network controller, FC controller) are up-to-date

Completed and Dell verified current versions

- check the cabling for bad cables

Completed - replaced one bad one

- if you're using SFP/GBICs make sure they are on the device manufacturers compatibilty list.

We are not utilizing this :(

ASKER

@david

Do you have a good software to use?

Do you have a good software to use?

ASKER

@Gary

I am including specs in this along with a few screen shots and a lot more details now :)

Dell Server Host

Dell SAN - PopwerVault ME4024

Dell SAN - PopwerVault ME4024

5 SSD's and 8 SAS drives at 10k

Network ports - everything is VLAN, but dell setup an ISCSI 1/2 for them (we have 2 host, but I am only utilizing one at this point)

Pool health shows ok

Pool health shows ok

Disk PerformanceGroup A=SSD

Disk PerformanceGroup A=SSD

GroupB = SAS

GroupB = SAS

No errors in event log

No errors in event log

The yellow - is events showing disk scrubs completed with no errors found.

The yellow - is events showing disk scrubs completed with no errors found.

ONLY think I can see that is suspicious = Host Ports, I get info on Host ports A

But on HostPorts B, I get nothing

I am including specs in this along with a few screen shots and a lot more details now :)

Dell Server Host

5 SSD's and 8 SAS drives at 10k

Network ports - everything is VLAN, but dell setup an ISCSI 1/2 for them (we have 2 host, but I am only utilizing one at this point)

ONLY think I can see that is suspicious = Host Ports, I get info on Host ports A

But on HostPorts B, I get nothing

- So each Dell host is directly connected to the SAN network? No network switch in between?

- Is this 10 Gbit copper?

- the "file servers" are both VMs on the same host?

- check the network card statistics on each HyperV host for correct network speed and no collellisions/retransmits on booth NICs for iSCSI and LAN.

- I don't know the Dell SAN but if there is a way to get the same information from the iSCSI ports check those too

- In case there is a switch in between, check the switch port config and statistics/network speed too.

iperf3: you can test between the 2 hosts and between the 2 guests/file server

- Is this 10 Gbit copper?

- the "file servers" are both VMs on the same host?

- check the network card statistics on each HyperV host for correct network speed and no collellisions/retransmits on booth NICs for iSCSI and LAN.

- I don't know the Dell SAN but if there is a way to get the same information from the iSCSI ports check those too

- In case there is a switch in between, check the switch port config and statistics/network speed too.

iperf3: you can test between the 2 hosts and between the 2 guests/file server

ASKER CERTIFIED SOLUTION

membership

This solution is only available to members.

To access this solution, you must be a member of Experts Exchange.

You asked, "Do you have a good software to use?"

I only run server side Linux, so for me there are many options.

Since you're running Windows, I'd start by searching for Linux options to give you info required, then search for a Windows alternative to the Linux tool.

Like nethogs or htop.

I only run server side Linux, so for me there are many options.

Since you're running Windows, I'd start by searching for Linux options to give you info required, then search for a Windows alternative to the Linux tool.

Like nethogs or htop.

- test disk speed to SAN is ok, i.e. using DiskSpd, see https://bobcares.com/blog/measure-storage-performance-and-iops-on-windows/

- test network speed between systems, i.e. using iperf3, https://iperf.fr/

- check the network components error counters for package loss

General actions you can do:

- make sure all your systems firmware (BIOS, RAID controller, FC controller, network switch etc.) are up-to-date

- make sure all your systems driver (esp. network controller, FC controller) are up-to-date

- check the cabling for bad cables

- if you're using SFP/GBICs make sure they are on the device manufacturers compatibilty list.