asked on

Google tries to index http when it's all https

I was wondering why Google search console is not indexing a site and finally noticed that is keeps trying to index http but the site is https.

This is confusing and I'm not sure how to fix this because I can clearly see that Google only knows the site as https yet it keeps trying http so fails.

A sitemap was submitted which is even itself an https url and the sitemap links also.

https://www.iogadgets.com/sitemap_index.xml

The web server is set to forward http to https and Google doesn't seem to recognize that it's being forwarded to https.

Using TOR and browsers from other networks, there's nothing wrong with tihs site.

There must be something really obvious that I'm not seeing at this point.

How can this be fixed?

ASKER

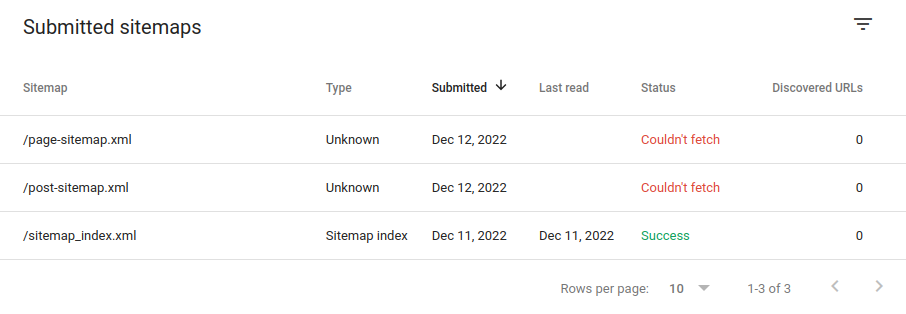

I thought I'd try adding the domain instead of using the URL method but it's doing the same thing.

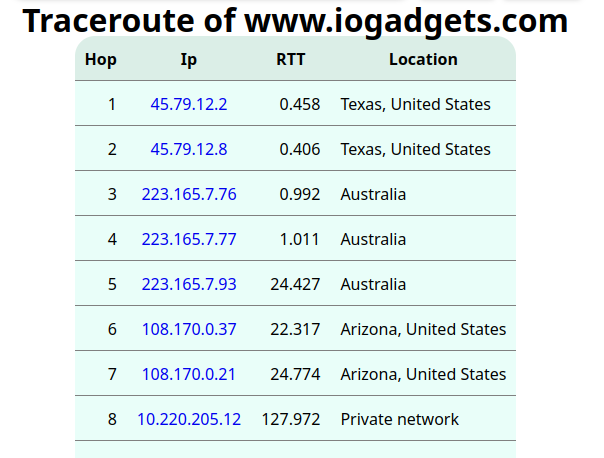

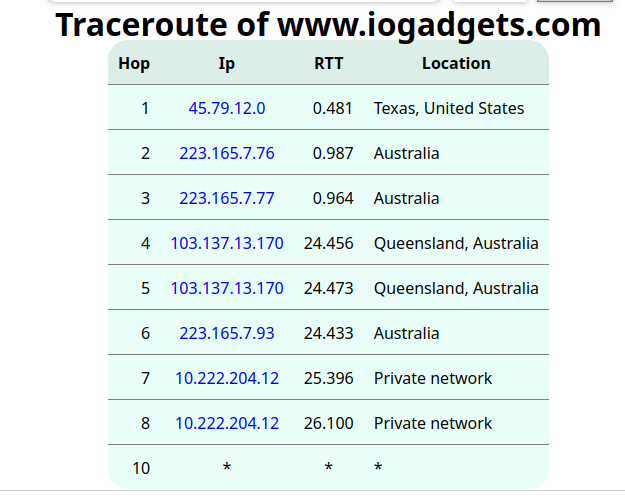

I ran the traceroute again and this time it seems to go to the correct data center where before, it seemed to end in AU.

It's starting to look like what ever DNS those services are using are out of date or something. What's weird is that it's still trying to index an http site but at the correct IP since I can see that traffic in the log.

Some company should hire me as someone who seems to have an ability to discover a lot of weird stuff.

ASKER

I think I can confirm this. I removed the site and added it again then ran the live url test. I get nothing.

I then submitted the sitemap again and it pulled up a bunch of sites that aren't mine.

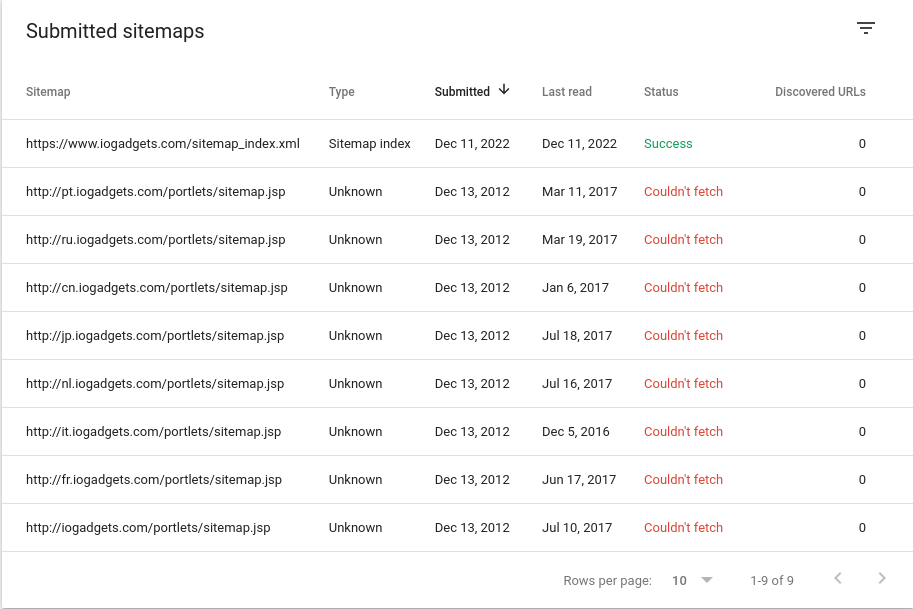

Since it says that the first one was a success, maybe it's the others that have been preventing the indexing. I'll remove those and try again. Maybe I'll be forced to add all those sub domains into my DNS records but it doesn't answer why GoDaddy's DNS records haven't propagated.

It also doesn't answer why Google can find the www site using http only when that's the only site submitted to Google as https no less.

BTW, the previous owner seems to have been an online magazine and likely had all kinds of DNS info out on the net.

https://web.archive.org/web/20160119060544/http://iogadgets.com/

Not sure if using this domain will be a plus when this settles or if it will hurt the site.

I'll also re-submit using url again since using domain ends up trying to use all those old sub-domains.

ASKER

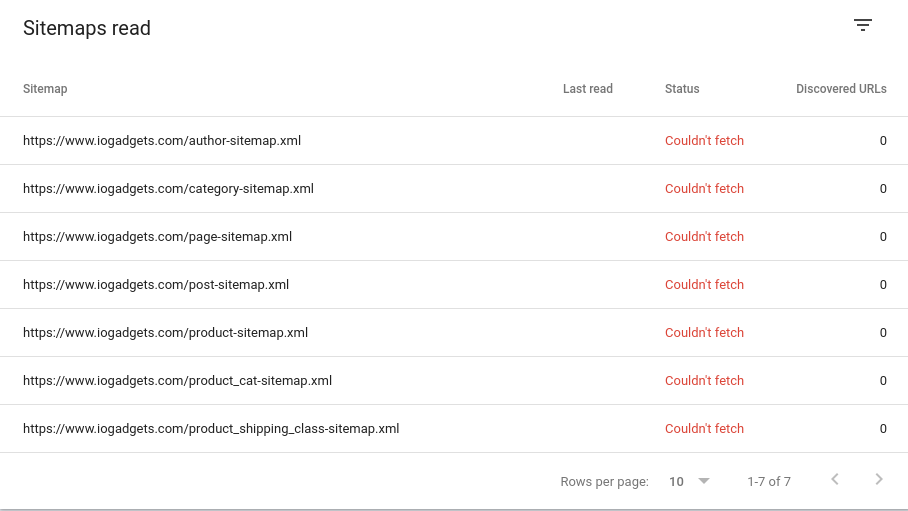

Well how crazy is this. I removed all the other sitemap links leaving only the one which Google says succeeded.

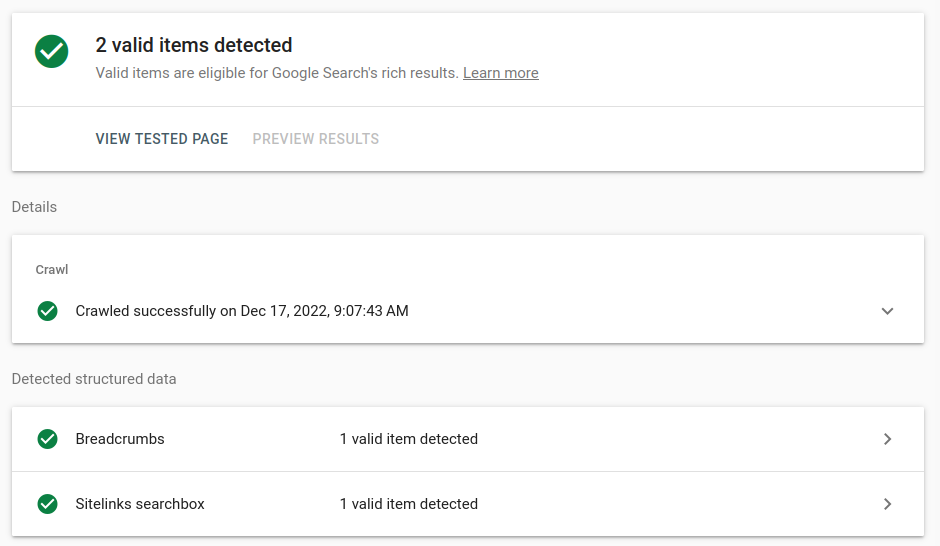

I then looked at the results and see this.

When I try to open sitemap links above from Google, each opens to the correct page.

Yet above, Google shows it could not fetch.

Not sure there's anywhere else to go with this question but I hope all the info I'm sharing will help someone some day. Or I've made some silly goof somewhere and I just can't see it.

ASKER

I added all those sub-domains, 301 to www and now I'm watching a lot of traffic hitting that web server.

Guess I should try taking advantage of that :).

I then submitted the sitemap again and it pulled up a bunch of sites that aren't mine.

I wonder if your site has been infected and if there is something hidden sending traffic to those links that are not yours. Make sure to disavow those links. But your site may need to be cleaned up.

ASKER

It's brand new, doesn't yet see traffic other than what I've done above so far.

I'm not sure where I'd start looking for an infection. I did check all of the top level pages and they are all fine.

I don't see any plugin I didn't install, nothing obvious at least in the rest. I don't install many plugins and most of them are paid and well maintained.

There isn't any file containing the http link nor in the database so what ever is going on, it's external.

As shown above, there seem to be conflicting DNS records across the Internet since they had sites in several countries. Now that' I've added my DNS records, I would imagine it's only a matter of time before things settle.

That still doesn't answer why Google won't see the https site of course. All those sub domains are now pointed to the main www one.

I would just keep post and page site maps since the rest will conflict and potentially create duplicate content.

ASKER

Why are there .jsp files? Where those from you?

ASKER

Nope, I never submitted those but it's what Google showed once I entered the main sitemap.

No idea where it's getting those from, they don't exist on this site.

I told you this was weird :).

I think what's happening is that Google is somehow stuck on some old records and can't see that the site is renewed along with those sub-domains being gone.

ASKER

As time permits, I keep getting back to this but I noticed something that was missed.

Those http hits are all from petalbot and not from Google.

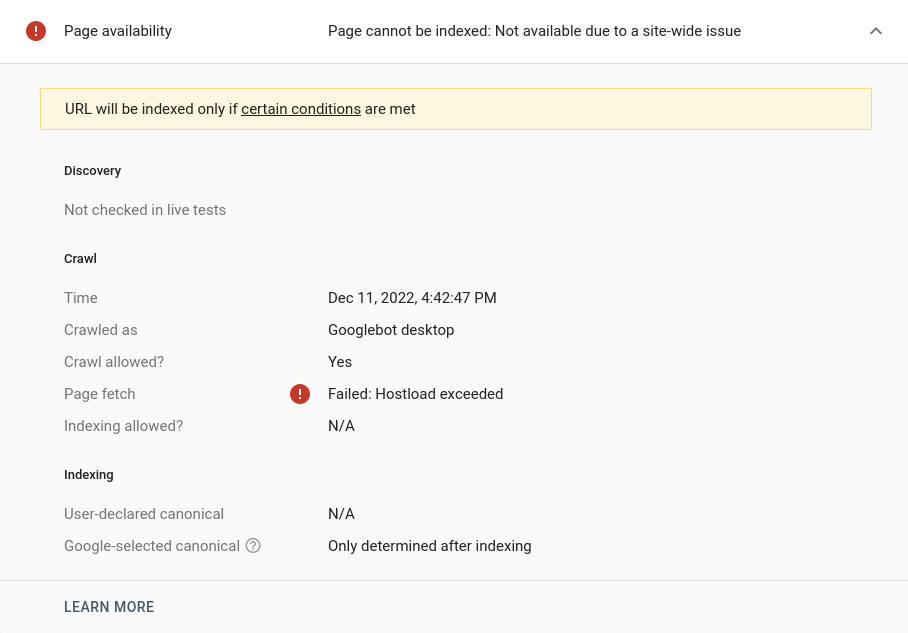

That said, Google is getting a 301 when visiting the site yet it's submitted as an asset in the search console and ownership is confirmed.

www.iogadgets.com 66.249.72.139 - - [14/Dec/2022:09:50:35 -0700] "GET /0720764.html HTTP/1.1" 301 242 582 - "-" "Mozilla/5.0 (compatible; Googlebot/2.1; +http://www.google.com/bot.html)"

www.iogadgets.com 66.249.72.140 - - [14/Dec/2022:09:50:36 -0700] "GET /0720764.html HTTP/1.1" 301 20 720222 719844 "-" "Mozilla/5.0 (compatible; Googlebot/2.1; +http://www.google.com/bot.html)"

I've since added not only 301's for dead links but also 410 to try and remove some of those.

I have checked the apache config over and over and see nothing strange and can reach the site using Tor from any proxy it's used to date.

All I can think of is that Google is stuck on the previous owners account or something along those lines and not considering the new one fully as the new owner.

I have now posted a question in the Google community so maybe I can update this to be of use to someone else some day if I can find a solution.

I think you said you disavowed those links in the search console but they came back. That means something is pointing google to those links. Deep dive in the search console, make sure there is not some setting or link that you missed.

And like before, for the site maps, you should only submit pages and posts. Others for Wordpress are just cross posting.

ASKER

Without being able to communicate with a real person at Google, it's been impossible to get anything fixed.

I've tried 410 on some of the links and allowing 404's so that Google can learn from that but it's an incredibly slow process simply waiting for the bot to come again.

Petalbot never seems to learn and continues to constantly hit the site looking for dead links.

I've yet to find a nice simple way of blocking at the firewall level so all sites can be free of that one.

Yes, I've added, removed, modified various links trying to see a difference but nothing seems to work so far and the site remains not indexed by Google, the most important one. Since it's not indexed the site, there's not much for info in the console.

If it helps, this is what Yoast told me. No change to date and no replies in the community post yet.

We have checked the sitemap https://www.iogadgets.com/sitemap_index.xml, and it's working as expected. We would like to let you know that when submitting sitemap to search engines like Google, you have to submit the sitemap_index file https://www.iogadgets.com/sitemap_index.xml.

Resubmit sitemap

We recommend resubmitting your sitemap to your Google Search Console. Please try the following:

1. Clear all your caching from your theme, plugin, server, and CDN like Cloudflare or browser. If you are not sure how to clear the cache from theme/plugin please speak to those authors. If you want to clear the cache from the server, please speak to your host provider. To clear the cache from a browser, use this guide: https://yoast.com/help/how-to-clear-my-browsers-cache/.

2. Delete your sitemaps that are already within the Google Search Console. Doing so will not hurt the SEO of the site. You can follow the help article to remove and resubmit the sitemap.

3. Then submit the sitemap again. Be sure to submit only https://www.iogadgets.com/sitemap_index.xml and nothing else. This guide explains more: https://yoast.com/help/submit-sitemap-search-engines/.

It's a small site so your other option is to delete the site map all together and let Google find it's way through the navigation. That will work too. Something is intercepting what you have right now.

ASKER

All good ideas and I've tried that as well.

I've added sitemaps, removed them, added 301's for old ones it keeps hitting, you name it.

I also added, removed robots.txt, tried 410 responses etc.

I have both domain and url to see if one works while the other might not.

Day in and day out, it is trying to index content that used to be tied to this domain, getting a 404 and hopefully learning but still has not indexed the site. There seem to have been a lot of content on that site and I can't take advantage of it since google prefers 404 over 301 from what I've read.

I've been filtering the logs watching what google alone is doing.

I also posted in the google community three days ago with no replies from anyone so far.

ASKER

ASKER

Google finally started indexing the site today but it only found the top page, not even the single blog article that's on there right now.

Did you remove the site maps? Just let the navigation links work, google will find the pages based on the links.

ASKER

Yes, that's what I was saying above. I've tried with and without maps and all the other things above.

I was watching the logs all this time to see if any one thing worked. This morning, I finally got an email from Google saying it started indexing the site on the 17th.

For the domain, it sees only the top page, it didn't find the blog article and shows links that aren't even on the site that all have 301/404. For the www.domain, it sees only one page, the top page.

If this is Google AI, we should all be scared :).

I picked your first comment as a solution since this might be a rare problem and most people looking for an answer would need to do what I did in the other question.

Thanks for helping Scott.

ASKER

Hi Scott,

I did add and remove the links and it's not those that's causing the problem.

Even trying to get Google to do a live url check fails.

I do a live url test of https://www.iogadgets.com/sitemap_index.xml which exists and is https but I get;

I see just one connection in the logs and immediately after, Google trying as usual.

Open in new window

Their help is useless and searching for this leads to mostly information about Google no longer indexing http pages.

Then trying the live url test tool using just the domain, I always get this useless error.

I've wondered if maybe Google is going somewhere else because it's not hitting the site when I run the above

I used a couple of traceroute tools on the net and one showed the correct IP for the site but this other one shows a totally different IP..