Desperately need to do an in place upgrade from sql 2014 to 2019 or ??

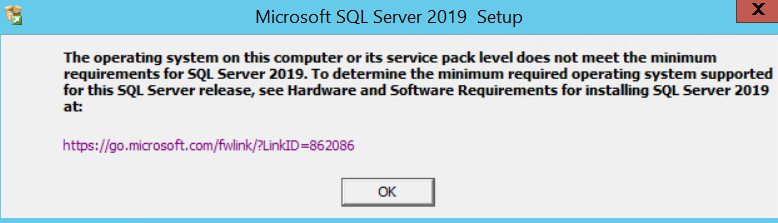

I'm in a pickle. We are on SQL 2014 / SSRS. We have a data warehouse that was set up by our vendor. We get a backup copy of the DB every night then I update the data warehouse from there. Two nights ago they upgraded our sql database to 2019 which means I can no longer update our data warehouse. I'm looking for the quickest solution until we can move it to a new server. I've found several MS articles that say an in place upgrade can be done from 2014 to 2019. When I try and run the install I get this error:

And here is one of the links that says you can do an in place upgrade:

And here is one of the links that says you can do an in place upgrade:

Any thoughts on getting the in place upgrade done or another suggestion that we can get this working quickly while planning a permanent move? The last time I moved our data warehouse it was a night mare and took weeks to get the bugs out.

You must be running "Windows Server 2016 or greater" as per: https://learn.microsoft.com/en-us/sql/sql-server/install/hardware-and-software-requirements-for-installing-sql-server-2019?view=sql-server-ver16

ASKER

Unfortunately 2012 R2. That also needs to be upgraded! But I want to get past this immediate issue first and then tackle the full conversion to a new server.

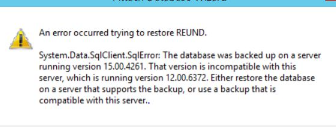

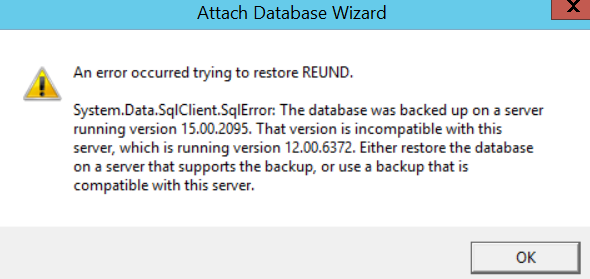

You get an error when executing the Restore on SQL 2014: could you tell us more about the error?

That error is alread known. I asked more details about the error you get when you Restore.

ASKER

We know you are backing up a DB hosted on SQL 2019. Which Compatibility Level is this DB? It might be 2019 but I guess it doesn't necessarily need to be 2019. It might run as well with a Compatibility Level of 2014. If you could downgrade the Compatibility Level from 2019 to 2014 you should be able to run the Restore on SQL 2014 successfully.

ASKER

That backup comes from our vendor and they refuse to change anything. But that is something I can try on our end - restore it as a 2019 database and resave it? Not quite sure how to do it, but it sounds like that might be easier...

you could do a temporary os and sql installation into a virtual machine import the database and then change the compatibility level to the lower version (hopefully no errors) then export the database and then import onto the old os and sql server

You can Restore, change Compatibility Level, Backup, Restore on SQL 2014.

Note that you can use W10 for installing a temporary MSSQL instance. That might be easier than having to create a server VM.

ASKER

We have 2 databases in our data warehouse. It turns out that our vendor only upgraded one so I can't mass change that server. I have the sql 2019 DB on another server so now I'm trying to see if I can use our ETL package to update the 2014 DB from the 2019 DB. I'm not sure if that is possible.

I see comments that I can restore it and change the compatibility level. I need to do this nightly - not sure how long that would take each time and how to do it. If it was something quick I'd sure try it for the short term. Does anyone have instructions for doing this?

Thanks for the answers!

The restore duration depends on media size and hardware. Switching compatibility level is instant, and can be done by an alter database statement easily.

ASKER

Thank you - will try this as soon as I get back to my office today.

ASKER

Sadly the backup compatibility outcome is what I've expected. So your choices left are

- to keep it a 2019 DB on the temporary server and work with that,

- to restore to 2019, and run a Copy Database Wizard task recreating all objects.

With the Copy Database Wiizard you can create a DTSX package runnable by SQL Agent (or Scheduled Tasks, if you like that more). Copying a database however will last much longer than a restore.

ASKER

There are so many steps involved in moving SSRS the data warehouse to a new server. Based on past experience it would take more time than I feel I have. I am going to try the copy database wizard and see how that goes. But I thought I'd toss out this to see if anyone feels like it might work (ie should I waste my time trying or not).

Is it possible to modify my ETL packages that update the data warehouse so that it is pulling data from the sql 2019 server while updating the sql 2014 data? I don't know if I'd have the same compatibility issue or not. I'll try that if you think it is possible.

If your database is small, export/import should work fine.

If your database is not small, bcp is a good option since it is simple to use and fast to move data from one server to another.

ASKER

I was reading up on the copy database wizard and according to this article it can't be used to go to a lower level of sql: https://learn.microsoft.com/en-us/sql/relational-databases/databases/use-the-copy-database-wizard?view=sql-server-ver15

I guess that option is out.

It is the Generate Scripts Wizard, please use https://www.mssqltips.com/sqlservertip/2810/how-to-migrate-a-sql-server-database-to-a-lower-version/

as a reference.

Also this easy to follow Youtube video, https://www.youtube.com/watch?v=evgZYa7DTsk

ASKER

Thanks Wayne. I am running through that right now. It turns out our DB is 59 gb so I'm not sure how quickly it will generate the script or update the files! I'll be anxiously watching it. Here's hoping!

59GB is not small. I'm afraid it is too big for SSMS to handle.

ASKER

Is there anything else I can try?

I'd propose to use bcp as its named implied to handle bulk data fairly quick.

Here is the basic steps I think

1) create a share between SQL 2014 and the temp server which has the restored SQL 2019 database

This step is not mandatory but handy for transferring data.

2) for each table in the database on the temp server

do

bcp out data to a file on the share

done

3) for each table in the database in SQL 2014

a) disable constraints

b) empty all tables

c) bcp in data from the file on the share

d) enable constraints

Also FYI: https://stackoverflow.com/questions/155246/how-do-you-truncate-all-tables-in-a-database-using-tsql

Purely just another thought (I am not sure if it fits your case, just FYI)

Can you use linked server feature pointing SQL 2014 to the temp SQL 2019?

A linked server could indeed be used to access the temporary 2019 instance. You would create synonyms for each object to access (view, table), using a linked server target for each. This could introduce concurrency or performance issues, though, as the optimizer is very limited in choices.

If you do not expect structural changes (adding or dropping columns aso.), scripted truncate table plus insert into with linked server source for each table might work for you, though it's hard to tell how good that works with that lot of data.

This, btw, is how I perform Oracle to MSSQL migration. 59GB would probably not get transferred within 24 hours.

ASKER

There are hundreds of tables in this database. I was trying to find a script that gave me a count, but either it didn't work or there are thousands! I know the vendor has a ton of temp files out there so I'm not sure if my count was correct. If that is the case, it would think it would be tough to use the bcp?

I'm not sure if the linked server would work but will check it out. I was trying to go through and change all the ETL packages that update the data warehouse to point to sql 2019, but maybe a better option would be to try the linked server.

bcp is not difficult when dealing with large number tables.

You can use T-SQL to generate those commands.

Please take a look for an example for "bcp out" commands for all tables:

1> use oneGBDB

2> go

Changed database context to 'oneGBdb'.

1> select 'bcp '+table_name+' out c:\temp\'+table_name+'.csv -c -T -S localhost' from information_schema.tables where table_type='BASE TABLE';

2> go

-

bcp NATION out c:\temp\NATION.csv -c -T -S localhost

bcp REGION out c:\temp\REGION.csv -c -T -S localhost

bcp PART out c:\temp\PART.csv -c -T -S localhost

bcp SUPPLIER out c:\temp\SUPPLIER.csv -c -T -S localhost

bcp PARTSUPP out c:\temp\PARTSUPP.csv -c -T -S localhost

bcp CUSTOMER out c:\temp\CUSTOMER.csv -c -T -S localhost

bcp ORDERS out c:\temp\ORDERS.csv -c -T -S localhost

bcp LINEITEM out c:\temp\LINEITEM.csv -c -T -S localhost

(8 rows affected)For DW system, typically you have a large FACT table so that we can run one bcp session for it, and run another session for rest of tables at the same time.

ASKER

Thank you - will be trying that a bit later today. Appreciate the continued help. I mostly work with creating SSRS reports - not very familiar with SQL at all.

The database already exists on the sql 2014 server. Will I detach that and will the bulk copy recreate the tables? Or should I be leaving the tables and it will replace data? I'll keep researching this, but thought I'd ask these questions so I have an idea.

Understand. It will be very helpful if you can allocate a database resouces to help you if you decide to go with bcp.

bcp deals with data only, therefore it assumes that your SQL 2014 database should have same meta data structure as the temporary SQL 2019 database.

Just for FYI, I'd like also provide a ballpark number on bcp speed which I did a while back on my laptop.

You can see below the import speed is 122K rows/second

bcp lineitem in lineitem.csv -c -S . -d onegbdb -b 1000000 -U sa -P <password> -t ','

Starting copy...

1000000 rows sent to SQL Server. Total sent: 1000000

1000000 rows sent to SQL Server. Total sent: 2000000

1000000 rows sent to SQL Server. Total sent: 3000000

1000000 rows sent to SQL Server. Total sent: 4000000

1000000 rows sent to SQL Server. Total sent: 5000000

1000000 rows sent to SQL Server. Total sent: 6000000

6001215 rows copied.

Network packet size (bytes): 4096

Clock Time (ms.) Total : 49069 Average : (122301.6 rows per sec.)The bcp export is faster as well, and it is 191K rows/second.

1000 rows successfully bulk-copied to host-file. Total received: 5996000

1000 rows successfully bulk-copied to host-file. Total received: 5997000

1000 rows successfully bulk-copied to host-file. Total received: 5998000

1000 rows successfully bulk-copied to host-file. Total received: 5999000

1000 rows successfully bulk-copied to host-file. Total received: 6000000

1000 rows successfully bulk-copied to host-file. Total received: 6001000

6001215 rows copied.

Network packet size (bytes): 4096

Clock Time (ms.) Total : 31354 Average : (191401.9 rows per sec.)ASKER

Oh boy... I created the bcp code with all the lines for that DB. It came up with 5728 tables and many of those rows are static_recs along with the table names I'm most familar with. It is still doing the copy. I used your example above and am creating csv files.

I'm still confused on what I do with the import. Here are my questions:

* As I mentioned the DB already exists in the sql 2014 server. Right now it has data in the tables. I've truncated tables before, but is there a quick way to truncate 5728 tables? I read you have to disable all constraints before you can delete the data and then enable the constraints? Does that sound right? (Not sure how it knows what constraints to enable again!)

* And would I then do a statement like this for each table?

bcp RE7_21559..AppealCampaign in "C:\bcp\AppealCampaign.csv" -c -T

* do I actually copy the folder with the backups created in the out to the sql 2014 server and run it there?

* and one final question... I realized I had used the original name of the DB in sql 2019. The name of the DB is slight different in 2014. I'm assuming I can then just do the following (changed from above) bcp REUND..AppealCampaign in "C:\bcp\AppealCampaign.csv" -c -T

I feel so confused! I sincerely appreciate your help!

ASKER

Wayne you are fantastic! Thank you.

ASKER

Thank you to everyone who responded with suggestions. While some of them didn't work, all were appreciated. A special thank you to Wayne who continued to assist all day.

Thank you for refreshing our knowledge and learning something new.

Lots of useful stuff revealed:

OS compatibility for in place upgrade,

Downgrade from higher to lower version,

Generate Scripts Wizard's limitation.

Your afford is much appreciated.

Happy Holidays.

Which OS are you running SQL 2014 on?